Hello,

I need to backup some 30 files with total size 800GB. Those files are located in a NAS device and backup destination is Azure. Backup speed is about 2MB/s which I believe is really slow.

Duplicati is running on a Windows Server 2012 machine with 32GB RAM and a 12 core Intel Xeon processor. Internet connection at the site is 600Mbps (75MB/s) and a test of uploading content to Azure was using most of this bandwith, so Internet connection speed does not seem to be the issue. I have checked transfer speed from the NAS device that holds the files to the computer running Duplicati and is higher 100MB/s. During backup operation RAM usage is <50%, CPU usage <20%.

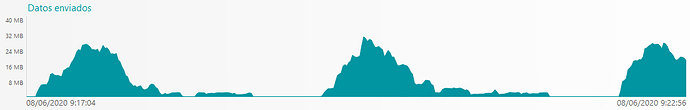

I have observed that during backup, the network usage in outbound direction is not continuous, as if Duplicati only uploads content for a couple of minutes and then stops uploading for a couple of minutes. This plot shows upload network usage of the computer for a span of 5 minutes. No other software is running when backup is working.

This upload behavior is confirmed by ingress metric of Azure, which shows a similar pattern.

I’m executing Duplicate with the following command line instructions (192.168.13.14 is the IP address of the NAS that holds de files to be backed up):

net use R: \\192.168.13.14\Volume-1 <password> /user:***** /persistent:no

"C:\Program Files\Duplicati 2\Duplicati.CommandLine.exe" backup azure://** R:\ --parameters-file=DuplicatiParameters.txt --dbpath=mydb.sqlite --backup-name=Volum-1

net use R: /delete /yes

Contents of DuplicatiParameters.txt file:

--azure_access_key="****"

--azure_account_name=****

--encryption-module=aes

--compression-module=zip

--dblock-size=50MB

--passphrase=****

--keep-versions=5

--auto-cleanup=true

--auto-vacuum=true

--asynchronous-concurrent-upload-limit=16

--asynchronous-upload-limit=16

--concurrency-block-hashers=20

--concurrency-compressors=20

--zip-compression-level=9

--console-log-level=Verbose

--disable-module=console-password-input

I can’t find the reason why backup operations are so slow. Any help would be appreciated. Please advise if more information is needed.