Hi!

I am using Unraid OS with docker duplicati version 2.0.2.1_beta_2017-08-01.

I selected the folder /photos which is about ~870GB and setup a backup job to B2.

I have a 1mbit connection upload so I know it will take a lot of time!

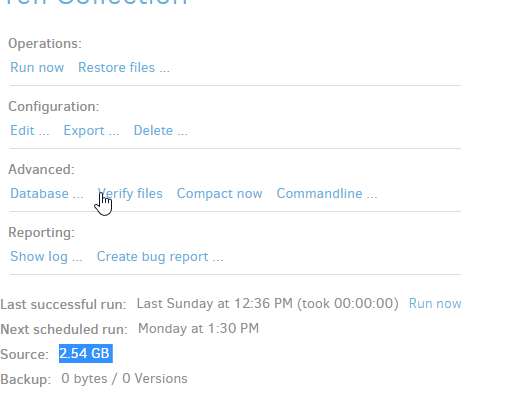

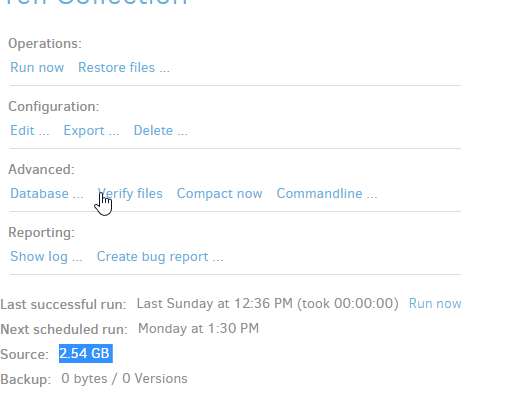

My problem is that backup source size is wrong as you can see from the screenshot below.

The process has stopped and started several times (router restart, server restart etc) just i case it does matter. Also, the size does not change from day to day.

Lastly, I have setup read only the permissions of /photos (from the docker settings) to be sure duplicati doesn’t mess anything (I am new to this so excuse me being cautious!)

Now the progress is

: 72169 files (856.30 GB) to go

I think this happens because it never finishes. The number reported here is then sum of all fully backed up files. If you have one large file that is repeatedly being processed, this number will not be updated until all parts of that file have been uploaded.

Ok it makes sense this way.

It’s a bit misleading cause the sum of all fully backed up files would be the Backup (or destination) and not the source!

I will wait a few days and see if that number goes up.

Thank you

Hi again,

Today the progress is at

: 71131 files (850.31 GB) to go

and I know that I don’t have any file bigger than 3GB.

And the source is still

Source:2.54 GB

Can I change the source folders to a small one so that the backup completes and see what the source field will change to? Or is there any better way to troubleshoot this? Cause I can’t wait till the end to find out that something is wrong!

Personally, when setting up a new backup job I start with a small subfolder of my source and run that a few times. Once I’m happy with the settings I “embiggen” my Source settings by select folders higher up in the tree to include more of what I ultimately want to back up. A similar thing can be done using Filters, though I find it easy to work at the folder level myself.

As for the source size appearing wrong (too big?) I expect the “to go” number is for ALL files found while the “Source” number is for what was actually included in the backup. Things that could make these two numbers vary widely include:

- Do you have any file or folder filters being applied? (e.g. Exclude *.jpg)

- Do you have an filtering parameters set? (e.g.

--skip-files-larger-than=10MB)

- Are you excluding temp, hidden, or system files (e.g.

--exclude-files-attributes="temporary,system,hidden") when you might have a lot of space used by files of that type

2 Likes

My config

mono “/app/duplicati/Duplicati.CommandLine.exe” backup “b2://xxxxxxx//photos/?auth-username=xxxxxxx&auth-password=xxxxxxxx” “/photos/” --backup-name=“xxxxxxxn” --dbpath=“/config/xxxxxxxx.sqlite” --encryption-module=“aes” --compression-module=“zip” --dblock-size=“50mb” --keep-time=“1Y” --passphrase=“xxxxxxxxxxx” --disable-module=“console-password-input”

But I have news!

The source size changed. What I did is to click stop and checking stop after upload so it finished the proper way propably.

I did test the process before I started the 800GB upload using a small folder of 1GB.

Now that I have no filters and I have already uploaded 24GB, can I deselect all folders except of only one in order to upload in smaller steps than alltogether?

Yes. You can add/remove folders as you please.

1 Like

It sounds like between using “stop after upload” when cancelling and/or removing filters you managed to get it working, glad we could help!

It was fixed before I touched filters, so it should be the proper finish that did the trick!

Thank you all!

1 Like