Hi all,

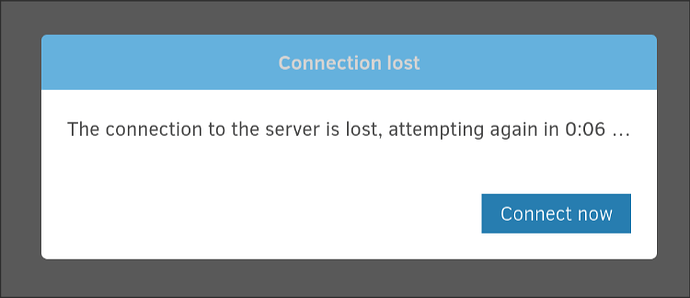

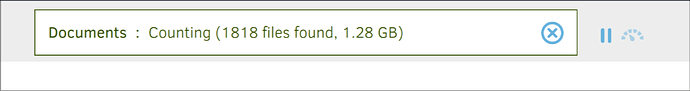

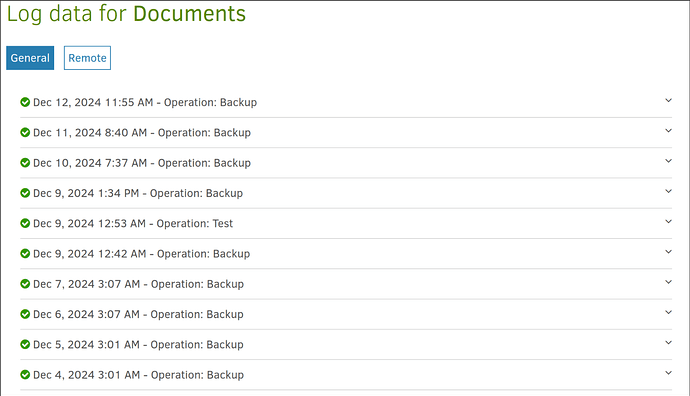

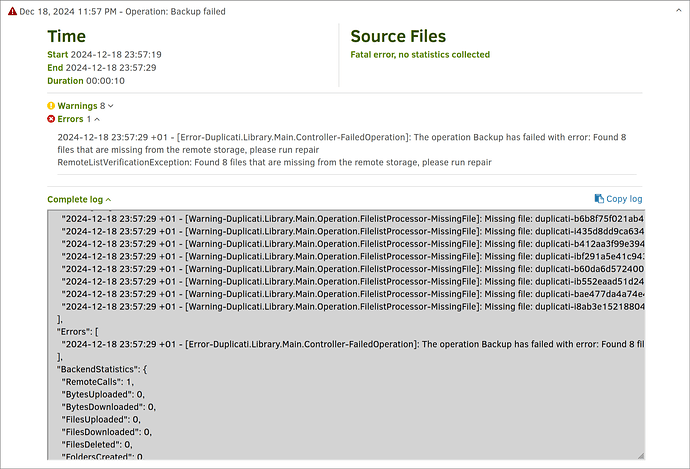

starting from roughly one week, I noticed the UI behaving strangely. As soon as I log in it tells connection lost (there’s a popup), then a countdown (still a popup), then it logins automatically (without clicking anything), then I notice on the top of the page that it tries to “verify the backend data”, it starts to count files, then before it completes it says again that connection is lost. It seems that only one of the two configurations is problematic.

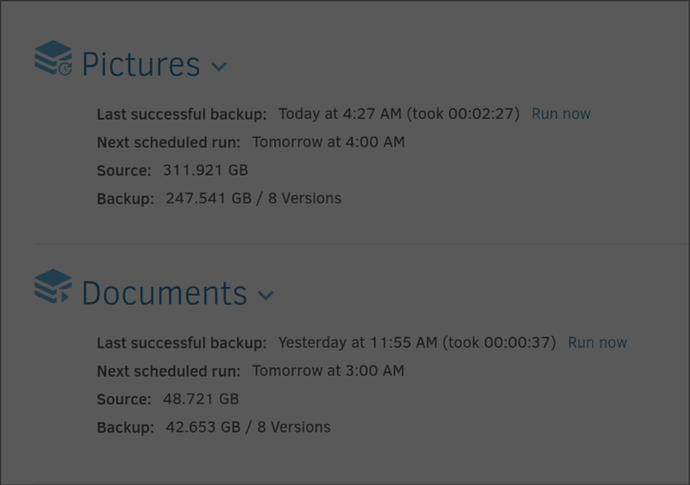

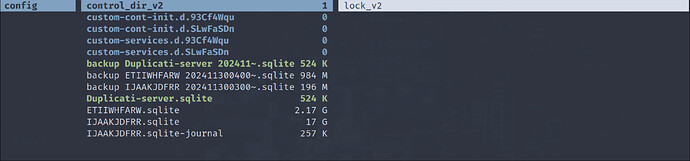

My configuration is quite simple, I have the linuxserver/duplicati docker image (“latest” tag uses “beta” branch). Only two identical jobs, pointing to two identical buckets on remote provider.

Docker container logs can be summarized as long list of:

...

Connection to localhost (127.0.0.1) 8200 port [tcp/*] succeeded!

Server has started and is listening on port 8200

Inside getter

Connection to localhost (127.0.0.1) 8200 port [tcp/*] succeeded!

Server has started and is listening on port 8200

Inside getter

Connection to localhost (127.0.0.1) 8200 port [tcp/*] succeeded!

Server has started and is listening on port 8200

Inside getter

Connection to localhost (127.0.0.1) 8200 port [tcp/*] succeeded!

Server has started and is listening on port 8200

Inside getter

...

not sure if those are my uptime checker server which pings regularly duplicati… however, scrolling a bit above I have:

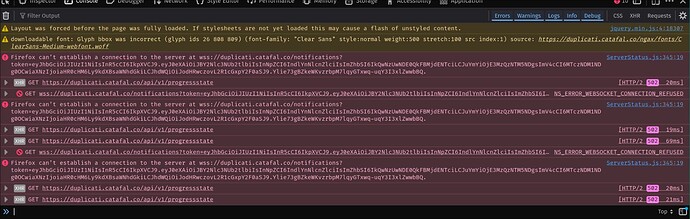

fail: Microsoft.AspNetCore.Diagnostics.ExceptionHandlerMiddleware[1]

An unhandled exception has occurred while executing the request.

Duplicati.WebserverCore.Exceptions.NotFoundException: No active backup

at Duplicati.WebserverCore.Endpoints.V1.ProgressState.Execute()

at lambda_method245(Closure, EndpointFilterInvocationContext)

at Duplicati.WebserverCore.Middlewares.HostnameFilter.InvokeAsync(EndpointFilterInvocationContext context, EndpointFilterDelegate next)

at Duplicati.WebserverCore.Middlewares.LanguageFilter.InvokeAsync(EndpointFilterInvocationContext context, EndpointFilterDelegate next)

at Microsoft.AspNetCore.Http.RequestDelegateFactory.<ExecuteValueTaskOfObject>g__ExecuteAwaited|129_0(ValueTask`1 valueTask, HttpContext httpContext, JsonTypeInfo`1 jsonTypeInfo)

at Duplicati.WebserverCore.Middlewares.WebsocketExtensions.<>c__DisplayClass0_0.<<UseNotifications>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Microsoft.AspNetCore.Diagnostics.ExceptionHandlerMiddlewareImpl.<Invoke>g__Awaited|10_0(ExceptionHandlerMiddlewareImpl middleware, HttpContext context, Task task)

…which I am not sure if it’s a cause or a consequence of something else.

Do you have any idea?

The server has been run kind of unmaintained for years now. Never an issue… Docker image is updated automatically in background (now I am on Duplicati - 2.1.0.2_beta_2024-11-29)