Hi Forum,

i am new to Duplicati, great software, thanks to all delveloper !!!

Here my Question:

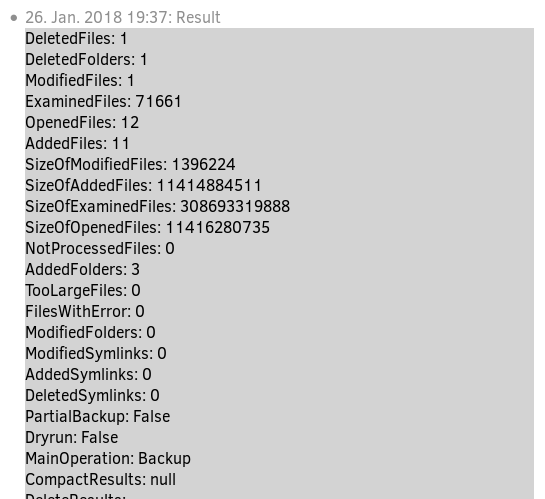

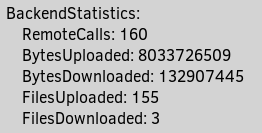

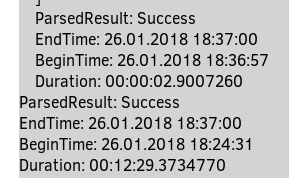

I have 1 TB of Data, mainly large files. I ran a Backup to WebDAV share on the same network, which took more than 48h which is ok for me.

Now I run a second backup with only 2 Files (2x2,5G) added. This Backup is going to take aprox. 24h much to slow for my needs. The CPU load is at about 95%

Can someone

- help me to say if that is normal or if there is something going wrong?

- give me some tips to tune things?

This is what i have already done:

- Increased blocksize to 2MB

- Increased dblock size to 1GB

- changed file-hash-algorithm to MD5 (not sure if this may be counterproductive)

- set zip-compression-method to “none”

- turned encryption off

Is there a way to make duplicati skip the hashing if the metadata (mtime, filesize) is unchanged? (the data in that fileset is not so important so that i perhaps do not need all the safty duplicati can bring).

I use Duplicati 2.0.2.1 on Debian Jessie, Celeron CPU J1900 @ 1.99GHz

I paste the exported config below.

Thank you for any advice.

Gerd

------ config ------

{

“CreatedByVersion”: “2.0.2.1”,

“Schedul{

“CreatedByVersion”: “2.0.2.1”,e”: {

“ID”: 1,

“Tags”: [

“ID=3”

],

“Time”: “2018-01-25T20:00:00Z”,

“Repeat”: “1D”,

“LastRun”: “2018-01-24T01:00:00Z”,

“Rule”: “”,

“AllowedDays”: null

},

“Backup”: {

“ID”: “3”,

“Name”: “medienunenc”,

“Tags”: [],

“TargetURL”: “webdav://192.168.X.X:8080//duplicati/light/medien?auth-username=XXXXXXX&auth-password=XXXXXXXXX”,

“DBPath”: “/root/.config/Duplicati/QKSQLICPJU.sqlite”,

“Sources”: [

"/mnt/data/shares/medien/"

],

“Settings”: [

{

“Filter”: “”,

“Name”: “encryption-module”,

“Value”: “”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “compression-module”,

“Value”: “zip”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “dblock-size”,

“Value”: “1GB”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “keep-time”,

“Value”: “6M”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–no-encryption”,

“Value”: “true”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–blocksize”,

“Value”: “2MB”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–tempdir”,

“Value”: “/mnt/tmp2”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–file-hash-algorithm”,

“Value”: “MD5”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–zip-compression-method”,

“Value”: “None”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–run-script-before-required”,

“Value”: “/usr/local/bin/weck-nas.sh”,

“Argument”: null

},

{

“Filter”: “”,

“Name”: “–run-script-timeout”,

“Value”: “200s”,

“Argument”: null

}

],

“Filters”: [

{

“Order”: 0,

“Include”: false,

“Expression”: “/mnt/data/tmp2/”

},

{

“Order”: 1,

“Include”: false,

“Expression”: “/mnt/data/shares/medien/stuffnotinbackup/”

},

{

“Order”: 2,

“Include”: false,

“Expression”: “**/.Trash-1000*/”

}

],

“Metadata”: {

“LastErrorDate”: “20180124T123703Z”,

“LastErrorMessage”: “The remote server returned an error: (401) Unauthorized.”,

“LastDuration”: “00:06:02.9563580”,

“LastStarted”: “20180124T124027Z”,

“LastFinished”: “20180124T124629Z”,

“LastBackupDate”: “20180124T124341Z”,

“BackupListCount”: “2”,

“TotalQuotaSpace”: “0”,

“FreeQuotaSpace”: “0”,

“AssignedQuotaSpace”: “-1”,

“TargetFilesSize”: “1185487153487”,

“TargetFilesCount”: “2214”,

“TargetSizeString”: “1,08 TB”,

“SourceFilesSize”: “1205697367501”,

“SourceFilesCount”: “61513”,

“SourceSizeString”: “1,10 TB”,

“LastBackupStarted”: “20180124T124027Z”,

“LastBackupFinished”: “20180124T124629Z”

},

“IsTemporary”: false

},

“DisplayNames”: {

"/mnt/data/shares/medien/": “medien”

}

}

I use Duplicati 2.0.2.1 on Debian Jessie