Hi all.

Brand new to Duplicati (and pretty clueless with AWS - S3 & Glacier - as well) and my first attempt to create a backup is running at a super-slow speed (3.32 MB/s).

I just ran a speedtest on my connection and it has a download of 75 Mbps & upload of 12.36Mbps.

My upload is normally 30+Mbps, so I am assuming duplicati is hogging that extra bandwidth.

Given I have 3TB to upload, that’s going to take forever at 3MB/s…

It did just occur to me that Mbps and MB/s are different (from memory, there’s 8Mbps per MBps?) so perhaps that explains my “slow” speed?

Anyway, if I have it wrong (ie it’s not 8Mbps to 1MBps), how do I speed things up?

Also, I went with the default of 50MB blocks. Wondering if I’d be better off increasing that size, and if so, to what?

Thanks in advance for any advice.

Hello and welcome!

If your upload is normally 30Mbps and Duplicati is doing 3.32MB/s, it is using almost all your upload. As you noted, you need to multiply the MB/s number by 8 to get the Mbps value. So 3.32MB/sec is like 26Mbps.

When you did your speed test it was competing with Duplicati. ie, Duplicati was slowed down during the test, the speed test wasn’t simply showing you the bandwidth that Duplicati wasn’t using (if that makes sense).

A 3TB backup will take a long time with a 30Mbps upload speed, but it will eventually finish. The 50MB volume size is probably not causing you any issue as you’re already almost getting max speed for your DSL.

Hopefully you saw the other threads about what care you should take when using archive storage tiers on S3.

Beyond that, have you customized your deduplication block size? The default of 100KB is too small for a 3TB backup. I would probably suggest a block size of 5MB. Unfortunately you cannot change this setting once you’ve started your backup. If you want to change it you have to start over.

Also for large backups I prefer to do it in sections. Select a subset of the data to back up first, let it finish, and then slowly add more data to the selection list. This way you’ll have opportunities to reboot your machine, if needed. (You don’t want to reboot during the middle of a backup.)

You may also consider having multiple backup jobs instead of one huge one. This gives you an opportunity to set different retention values on different types of data, if desired.

1 Like

Hi Rod & thanks heaps for your super-prompt and helpful reply!

Yeh, 3TB is gonna take forever  I think I just need to buy a big backup hard drive and put most of the files on that, and then only backup the regularly changing stuff to Amazon.

I think I just need to buy a big backup hard drive and put most of the files on that, and then only backup the regularly changing stuff to Amazon.

Re: the dedup block size, I’m not sure what I set that to. I’ll go check it out. What’s the benefit of increasing it?

As for other threads about care taken when using S3 archive tiers, I am not sure whether I have seen the ones you have in mind. I assume you’re referring to the difference between warm vs cold storage, plus the costs (which increase) to restore files out of glacier?

Any tips that might help?

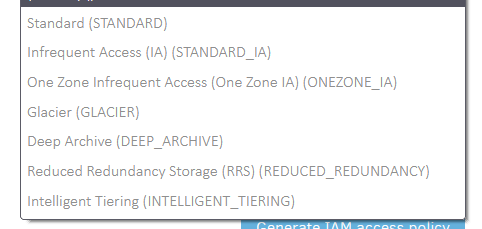

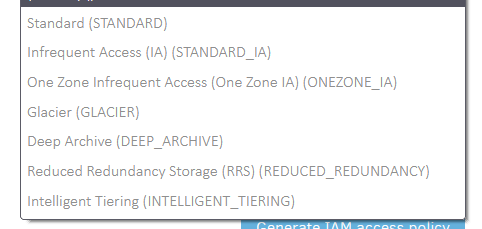

Lastly, I couldn’t figure out how to change which type of storage to use (I am currently using S3 One Zone IA, but was sort of thinking about switching to a cheaper option, but didn’t know how to do that since Duplicati doesn’t seem to have all the current Amazon options)?

Thanks again for your help.

Eran

Increasing the deduplication block size reduces the number of blocks that need to be tracked. When the database has to track too many blocks, some operations will slow down, and the local sqlite database will get pretty large. The downside to larger block sizes is that deduplication efficiency will be reduced to some degree.

Please see Choosing Sizes in Duplicati - Duplicati 2 User's Manual for more information.

If you are using archive tier storage with S3, you’ll need to use unlimited retention, disable compaction, and disable backend verification. This is because files stored in glacier cannot instantly be read, and deleting files early incurs a storage cost penalty.

So set your retention to unlimited and set the following options: --no-backend-verification and --no-auto-compact.

Doing restores from glacier may be tricky. You might need to move to a higher tier on at least some objects, which incurs a cost. I haven’t actually done that myself but there are threads about it. (I personally am testing using Glacier to hold a copy of my backup data. If I need to restore it will be from the primary copy on my NAS. The Glacier copy is only in a worst case scenario where my NAS has failed.)

You can configure that on the backup job Destination tab. To change existing objects, you can do that in the AWS console.

Here is what I see… is it missing something?

I think I just need to buy a big backup hard drive and put most of the files on that, and then only backup the regularly changing stuff to Amazon.

I think I just need to buy a big backup hard drive and put most of the files on that, and then only backup the regularly changing stuff to Amazon.