I read all of that the other day and wasn’t sure if I would need it, so I did borrow an extra Windows computer from a friend in case it came to that. I only have the Mac, and it doesn’t look like the tool is Mac compatible.

This tool can be used in very specific situations, where you have to restore data from a corrupted backup. The procedure for recovering from this scenario is covered in Disaster Recovery.

It’s not yet clear what’s going on, and RecoveryTool itself has limitations, maybe on memory usage, however whatever’s up with dindex won’t bother it at all because it only uses dblock and dlist. It possibly is slower than regular restore (I don’t know if anyone has benchmarked), but it does exist…

I’m still hoping that the download problems were just a destination weirdness, and extra dindex are actually extra. A dindex indexes a dblock, and names it inside, using a file that looks like the dblock, however is actually the index to its dblock. Ideally I think it’s one-to-one, but sometimes it’s different.

Things that make you go, “Uh-oh.” was opened by someone who noticed that their counts were odd. Pictures and explanations there also give a glimpse of the internal tables. I think other topics do too.

Downloads page macOS section shows Intel, Apple Silicon, and Homebrew, but Windows works too. Pictures I’ll post are Windows. I assume any OS differences are minor, but I don’t know for certain…

I don’t use Macs, but I’d assumed that basically all C# programs work the same way whatever the platform; under Linux all Duplicati executables are .exe files that could just work as is under Windows, it’s just that under Linux it’s necessary to launch them with

mono myprog.exe

About using it at all, as repairing seems to take an awful lot of time in some cases anyway (my idea of it is that when its internal data structures on the backend are badly broken, Duplicati starts to download everything), it there are several attempts necessary, it could be faster to bite the bullet and start using the recovery tool.

If you have another computer available, you can even start the process in parallel.

I don’t want to derail the main effort here, but the bug report failed with the error attempted to write a stream that is larger than 4GiB without setting the zip64 option.

I likely got various tool discussions confused. My post was about macOS SQLite browser.

Yikes. How big was Source on home screen or log, and how big is current local database?

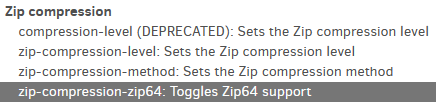

In Options → Advanced options (and we can just hope that bug report respects the option):

Duplicati.CommandLine.exe help zip-compression-zip64

–zip-compression-zip64 (Boolean): Toggles Zip64 support

The zip64 format is required for files larger than 4GiB, use this flag to

toggle it

* default value: False

That’s 90% to 100% range where it looks through all remaining dblock files. Getting download locally once (ideally with a fast downloader that uses concurrency to keep the Internet connection full) can be faster to download the first time, and then there are no more downloads. RecoveryTool is not concurrent download.

I suppose we can ask about the theoretical Internet speed (almost any speed test is concurrent) to guess whether running another download in parallel would hurt both downloads, and slow down the end restore.

source is about 1 TB and backup says about 1.2 TB. The DB is just under 10 Gig.

Internet speed fluctuates between 200-300 Mb, but in reality it seems to be downloading the files from the explorer about half that. I’m around 350GB in out of the 1.2 currently, so got a while to go

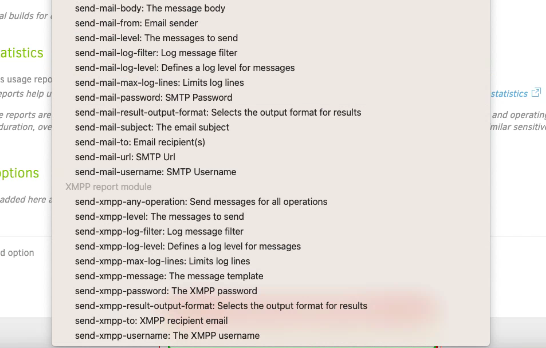

I don’t have a zip option category, nor does it list that in the core section. The last item is the XMPP settings

That’s larger than default blocksize can handle efficiently, so database operations will sadly be slow.

Please change default blocksize to at least 1MB #4629 would be just about right for a 1 TB backup.

There are other thoughts, but that’s probably a part of why the database is big. Lots of blocks in it…

This increases my wish to see if we can avoid having to recreate the database, but just use it using downloaded files to avoid download error (which possibly RecoveryTool would also be running into).

I guess I’ll leave it to you on whether you want to start a simultaneous download with RecoveryTool.

The remoteurl can be had from Export As Command-line, but a less disruptive test with it would be Duplicati.CommandLine.BackendTool.exe to see if get of a previous failing download works with it.

As mentioned, you may need to specifically say mono before program path if macOS won’t just run.

For a heavier workout, edit URL to an empty folder for Duplicati.CommandLine.BackendTester.exe.

You might be in Default options on Settings screen (looking at background). You want Options screen, then Advanced options. Perhaps Settings doesn’t offer Zip because it’s not always used…

I think I’ll wait for the bucket download to finish first just for fear of maybe messing something up before trying the Recovery Tool.

I don’t see this anywhere… I just have Settings on the left. I also went to edit the backup job and go to options, and it’s the same list as the one in the main settings

I get Core options then destination specific ones, then Zip compression, then Password prompt.

Zip is not required, but almost nobody uses anything else, because 7z doesn’t really work properly…

It looks the same way on Linux as Windows. I don’t know if there’s something on macOS that differs.

You could start down the path of a completely new backup, to look at Options. Don’t actually Save it.

The CREATE-REPORT command might be an alternative if the test on last line also refuses to offer.

Possibly somebody else with macOS can also test, but such helpers are rare. It’s possible it’s a bug.

You could also do the file counting while waiting, or download a DB browser to look in table yourself.

We’d try to stay away from the deep stuff which someone else might try. It’d just be type and counts.

EDIT:

Or just wait for download to finish and see if restore works.

Based on my mac running Ventrua 13.0.1 with Duplicati 2.0.6 (current beta), those options are there just as you describe.

As noted previously in other threads, the “Local folder or drive” advanced options in Step 5 are missing on initial job creation but they do show up once the job has been saved.

I didn’t update the group. I did find the zip settings. I was thinking the sections were alphabetic and had skipped right past it. I did get the bug report to create, but it didn’t download (at least I couldn’t find where it stored it if it did), and I couldn’t seem to download it doing a right-click save as.

The bucket is still downloading… pretty close now with only about 300 GB left. So I may just try a direct restore from the local files as a first step. I assume it will still take a 24hr+ or so to build the DB even though it will be local?

That’s probably the fastest simple path if it works, because this database is partial and temporary, but that also means it’s easier to lose messages, so please take some notes. Possibly you can also leave a tab at About → Show log → Live, switching to Verbose if you want a good look, and Warning the rest of the time which will also catch Error level (as will Verbose – levels are hierarchical, but you don’t want to get floods).

I’m not going to guess at timing. I did one yesterday, and download time was far less than DB processing. Possibly having DB on an SSD will help if you have one, but procedure would get a bit more complicated.

There are other harder ways to do this, such as giving it a job just to do its restore. Source is dummy, as backup is never done. Advantages are that database is in a known spot, if it needs inspecting or fiddling. Advanced option setup is slightly simpler, database build for a partial database takes some special work.

Shoutout to @ts678 and @gpatel-fr sticking with me as I had been calmly freaking out. Don’t want to speak too soon, but I I think I am in the home stretch.

Once I got the files locally, the db process looks like it took 2 hours to do a direct restore. It’s now “scanning for local blocks…” which looks like it’s going through all the files and I have been seeing things getting restored as it’s going through it. Maybe 20% done if I had a guess.

I did receive one error on the database rebuild saying it couldn’t find 1 dblock. I’m not sure how important that is or not. I have 75MB blocks. I looked for the file name on the bucket storage, and it was not found.

One behavior that I’m not sure if it’s normal or not is that I had a total of about 37k files in my bucket. I was only able to download around 33k, as I received errors saying “no content” in the S3 logs. It appears all of those were dIndex files. When I tried to download a single one directly from the UI of the storage, it found the file name, but the object wouldn’t download citing something like “object doesn’t exist” I’m wondering if during the revisions dIndex files cleared but somehow they shells remained? Maybe that’s a storage thing.

So far the only thing I know of is missing one dblock. I can copy up that log, but it looks like so far so good!!

If you have exact messages, might as well post them, or at least record them.

I forget how well Direct restore does logs, so having live log at warning might be safest.

If the dblock has blocks that you need, I think you’ll get a warning or error about the issue…

Or maybe there was just an extra dindex file pointing to a missing dblock that wasn’t in use.

Missing dindex files aren’t a disaster because the dblocks can be read to know what’s inside.

This would probably sit at the 90% to 100% range on progress bar, but local files read faster.

Duplicati doesn’t empty dindex files. It can ask to delete the file, and the storage should do so.

Here’s the error from the first steps of the direct restore:

{

“MainOperation”: “Repair”,

“RecreateDatabaseResults”: {

“MainOperation”: “Repair”,

“ParsedResult”: “Success”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “2022-12-25T22:18:06.088798Z”,

“BeginTime”: “2022-12-25T20:32:51.90889Z”,

“Duration”: “01:45:14.1799080”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null,

“BackendStatistics”: {

“RemoteCalls”: 17401,

“BytesUploaded”: 0,

“BytesDownloaded”: 2580529768,

“FilesUploaded”: 0,

“FilesDownloaded”: 17400,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 0,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 0,

“KnownFileSize”: 0,

“LastBackupDate”: “0001-01-01T00:00:00”,

“BackupListCount”: 0,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: 0,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Repair”,

“ParsedResult”: “Success”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2022-12-25T20:32:51.858842Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

},

“ParsedResult”: “Error”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “2022-12-25T22:18:32.396674Z”,

“BeginTime”: “2022-12-25T20:32:51.858834Z”,

“Duration”: “01:45:40.5378400”,

“MessagesActualLength”: 34807,

“WarningsActualLength”: 2,

“ErrorsActualLength”: 1,

“Messages”: [

“2022-12-25 15:32:51 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Repair has started”,

“2022-12-25 15:32:51 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()”,

“2022-12-25 15:32:54 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (33.97 KB)”,

“2022-12-25 15:33:04 -05 - [Information-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-RebuildStarted]: Rebuild database started, downloading 17 filelists”,

“2022-12-25 15:33:04 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220623T140000Z.dlist.zip.aes (79.32 MB)”,

“2022-12-25 15:33:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220623T140000Z.dlist.zip.aes (79.32 MB)”,

“2022-12-25 15:33:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220714T220001Z.dlist.zip.aes (79.32 MB)”,

“2022-12-25 15:33:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220714T220001Z.dlist.zip.aes (79.32 MB)”,

“2022-12-25 15:35:25 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220715T100001Z.dlist.zip.aes (78.19 MB)”,

“2022-12-25 15:35:28 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220715T100001Z.dlist.zip.aes (78.19 MB)”,

“2022-12-25 15:36:56 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220715T180730Z.dlist.zip.aes (78.69 MB)”,

“2022-12-25 15:36:58 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220715T180730Z.dlist.zip.aes (78.69 MB)”,

“2022-12-25 15:38:31 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220722T141512Z.dlist.zip.aes (79.51 MB)”,

“2022-12-25 15:38:33 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220722T141512Z.dlist.zip.aes (79.51 MB)”,

“2022-12-25 15:40:00 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220726T140001Z.dlist.zip.aes (79.22 MB)”,

“2022-12-25 15:40:03 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220726T140001Z.dlist.zip.aes (79.22 MB)”,

“2022-12-25 15:41:48 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220812T180000Z.dlist.zip.aes (71.11 MB)”,

“2022-12-25 15:41:50 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220812T180000Z.dlist.zip.aes (71.11 MB)”,

“2022-12-25 15:43:39 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-20220812T220000Z.dlist.zip.aes (77.16 MB)”,

“2022-12-25 15:43:41 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-20220812T220000Z.dlist.zip.aes (77.16 MB)”

],

“Warnings”: [

“2022-12-25 16:59:20 -05 - [Warning-Duplicati.Library.Main.Database.LocalRecreateDatabase-MissingVolumesDetected]: Found 1 missing volumes; attempting to replace blocks from existing volumes”,

“2022-12-25 17:12:28 -05 - [Warning-Duplicati.Library.Main.Database.LocalRecreateDatabase-MissingVolumesDetected]: Found 1 missing volumes; attempting to replace blocks from existing volumes”

],

“Errors”: [

“2022-12-25 16:27:52 -05 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-MissingFileDetected]: Remote file referenced as duplicati-b066fa89dd686481fbcd3c17b7814ed8f.dblock.zip.aes by duplicati-ibf17a583f84b48688e951c0f9d375be9.dindex.zip.aes, but not found in list, registering a missing remote file”

],

“BackendStatistics”: {

“RemoteCalls”: 17401,

“BytesUploaded”: 0,

“BytesDownloaded”: 2580529768,

“FilesUploaded”: 0,

“FilesDownloaded”: 17400,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 0,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 0,

“KnownFileSize”: 0,

“LastBackupDate”: “0001-01-01T00:00:00”,

“BackupListCount”: 0,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: 0,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Repair”,

“ParsedResult”: “Success”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2022-12-25T20:32:51.858842Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

}

I do have the warning type selected for the live log currently. It has been for a few hours or so, and nothing has popped up. Initially I didn’t have it up.

Weird, storage must not have followed directions then.

Appreciate all the help. Appears I was able to get everything back! It’s now running through a “verifying restored files…” step. I did receive a bunch of these errors in the warning feed for maybe 100 files.

message: Unterminated string. Expected delimiter: ". Path ‘[‘unix-ext:com.apple.ResourceFork’]’, line 1, position 102397.

When I spot checked a few on the restored files, they seemed fine, so maybe it’s something that could be ignored. So aside from that and the error I got posted above on the dblock, I think I’m good to go.

I appreciate all the help and sticking with me! I do have a follow-up question about supporting the newer versions of MacOS, but I’m going to make a new thread for that.

I’m quite worried about any storage which can do this from its UI above, and Duplicati transfers below:

Duplicati.CommandLine.BackendTester.exe with a URL from Export As Command-line but edited to an empty folder can do a short test by default, and a longer one if you set the parameters to request one…

backup-test-samples or backup-test-percentage can do a larger sample of downloads than default does.

You can also try Internet search or whatever forum or support your storage uses, to look for such issues.