Started a delete&rebuild 6pm yesterday, after I saved the AppData/Local/Duplicati Folder. Finished with error after 14 hours:

Recreated database has missing blocks and 1 broken filelists.

Consider using "list-broken-files" and "purge-broken-files" to purge broken data from the remote store and the database.

Started list-broken-files with the result:

Fertiggestellt!

0 : 11.09.2022 03:22:01 (15 match(es))

E:\Privatdaten\Backup_PrivatDatenVonRyzenLogs\Backup_PrivatDatenVonRyzen_2022_09_09_ 847.log (1,08 KB)

E:\Privatdaten\Backup_PrivatDatenVonRyzenLogs\Backup_PrivatDatenVonRyzen_2022_09_09_ 931.log (1,08 KB)

E:\Privatdaten\Backup_PrivatDatenVonRyzenLogs\Backup_PrivatDatenVonRyzen_2022_09_09_1919.log (1,08 KB)

E:\Privatdaten\thomas\PioProjects\RST2022_Testaufbau2\.pio\build\nodemcu-32s\idedata.json (28,95 KB)

E:\Privatdaten\thomas\PioProjects\RST2022_Testaufbau2\.vscode\c_cpp_properties.json (53,71 KB)

E:\Privatdaten\thomas\PioProjects\RST2022_Testaufbau2\.vscode\extensions.json (274 Bytes)

E:\Privatdaten\thomas\PioProjects\RST2022_Testaufbau2\.vscode\launch.json (1,80 KB)

E:\Privatdaten\thomas\Rhinoceros\Fenster_Einpressprofil\Fenster_Einpressprofil 001.3dm (142,35 KB)

E:\Privatdaten\thomas\Rhinoceros\Fenster_Einpressprofil\Fenster_Einpressprofil.3dm (176,84 KB)

E:\Privatdaten\thomas\Rhinoceros\Fenster_Einpressprofil\Fenster_Einpressprofil.3dmbak (139,64 KB)

E:\Privatdaten\thomas\Rhinoceros\Fenster_Einpressprofil\Fenster_Einpressprofil.3mf (16,83 KB)

E:\Privatdaten\thomas\Rhinoceros\Fenster_Einpressprofil\Fenster_Einpressprofil.stl (3,40 KB)

Return code: 0

Ok, this is data recreated Sep 9th, the day problems started. Something must have gone wrong backing up. No nice leading to this error but hovever. …

Started purge-broken-files with the result:

Fertiggestellt!

Uploading file (13,18 MB) ...

Deleting file duplicati-20220911T012201Z.dlist.zip.aes ...

Return code: 0

Worked, so far so good, all should be in sync again.

Started Backup Task

Error while running Privatdaten

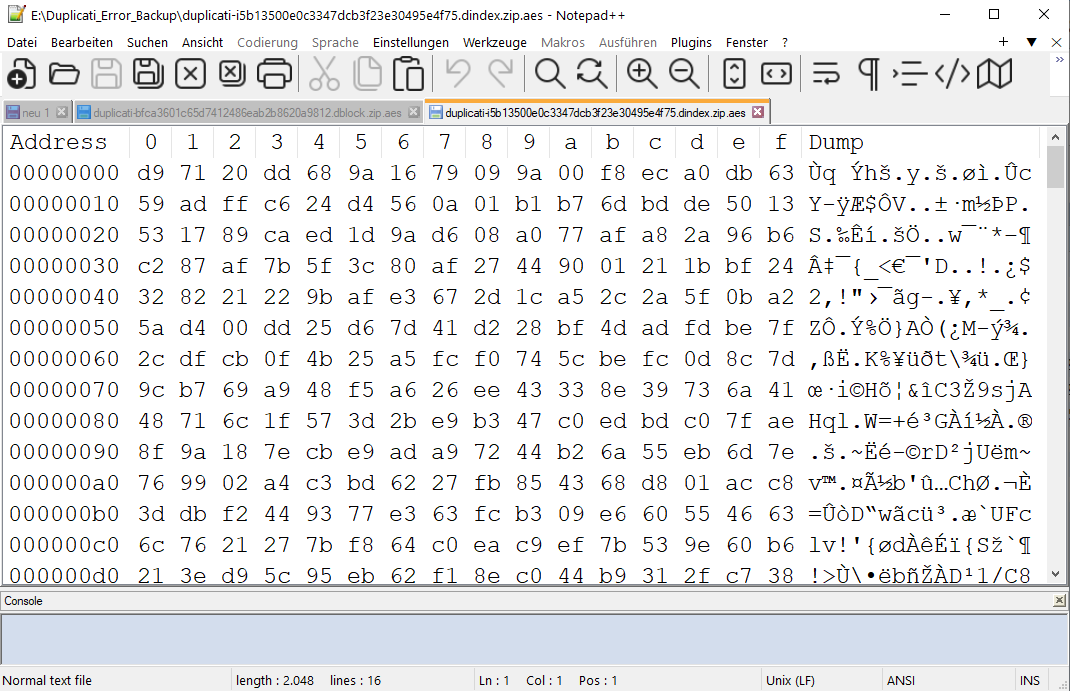

2022-09-17 13:11:02 +02 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-i5b13500e0c3347dcb3f23e30495e4f75.dindex.zip.aes

At this point I’m loosing a bit confidence in this really great program. Any suggestions?

Task Log and full log:

Zeit

Beginn 2022-09-17 12:19:21

Ende 2022-09-17 13:11:19

Dauer 00:51:58

Quelldateien

Geprüft 113807 (83.38 GB)

Geöffnet 89843 (80.63 GB)

Hinzugefügt 197 (71.38 MB)

Geändert 27 (11.09 MB)

Gelöscht 1

Test Phase

Komprimierungsphase

Phase Löschen (alte Sicherungsversionen)

Warnings 2

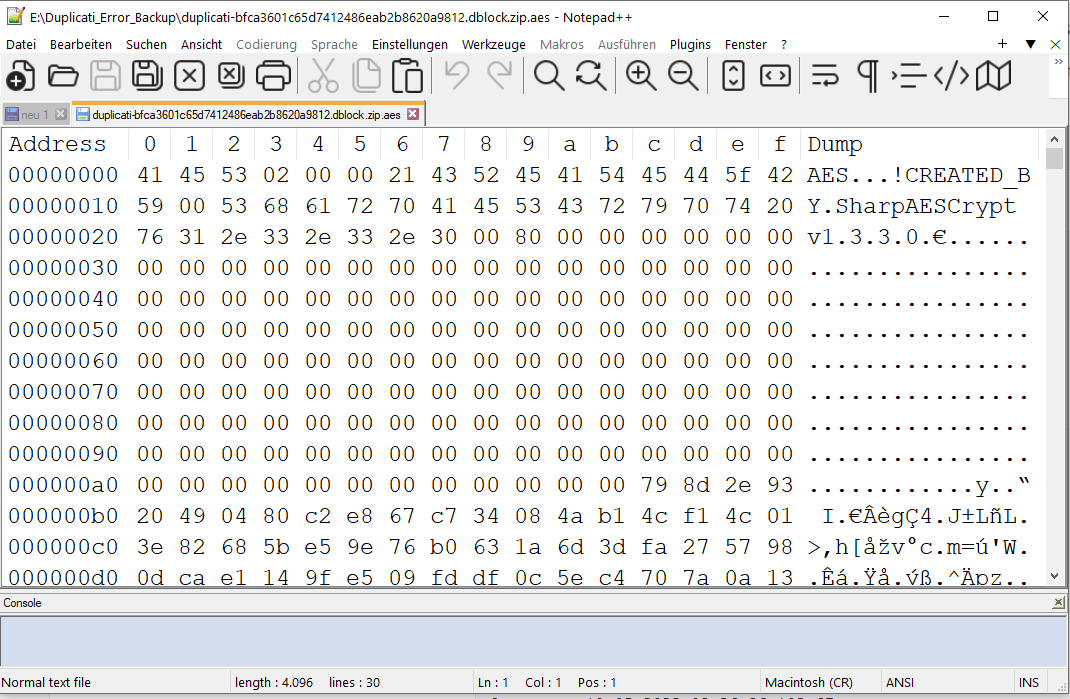

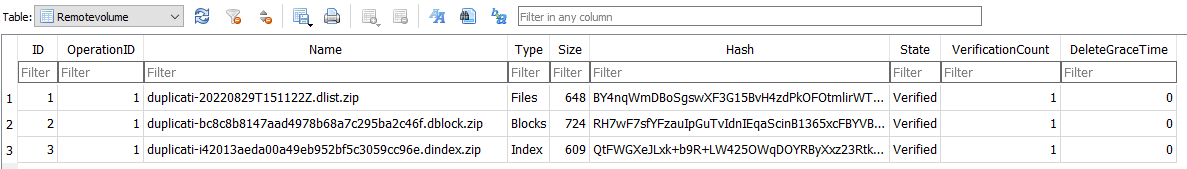

2022-09-17 12:20:26 +02 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteHash]: remote file duplicati-bfca3601c65d7412486eab2b8620a9812.dblock.zip.aes is listed as Verified with size 4096 but should be 24813, please verify the sha256 hash "nE+sklcKP2E10/H93nUmEao4mRacK228TG71znudLhQ="

2022-09-17 13:10:20 +02 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteHash]: remote file duplicati-bfca3601c65d7412486eab2b8620a9812.dblock.zip.aes is listed as Verified with size 4096 but should be 24813, please verify the sha256 hash "nE+sklcKP2E10/H93nUmEao4mRacK228TG71znudLhQ="

Errors 1

2022-09-17 13:11:02 +02 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-i5b13500e0c3347dcb3f23e30495e4f75.dindex.zip.aes

Vollständiges Protokoll

{

"DeletedFiles": 1,

"DeletedFolders": 0,

"ModifiedFiles": 27,

"ExaminedFiles": 113807,

"OpenedFiles": 89843,

"AddedFiles": 197,

"SizeOfModifiedFiles": 11624446,

"SizeOfAddedFiles": 74843242,

"SizeOfExaminedFiles": 89531491432,

"SizeOfOpenedFiles": 86576528170,

"NotProcessedFiles": 0,

"AddedFolders": 11,

"TooLargeFiles": 0,

"FilesWithError": 0,

"ModifiedFolders": 0,

"ModifiedSymlinks": 0,

"AddedSymlinks": 0,

"DeletedSymlinks": 0,

"PartialBackup": false,

"Dryrun": false,

"MainOperation": "Backup",

"CompactResults": {

"DeletedFileCount": 2,

"DownloadedFileCount": 0,

"UploadedFileCount": 0,

"DeletedFileSize": 2829,

"DownloadedFileSize": 0,

"UploadedFileSize": 0,

"Dryrun": false,

"VacuumResults": null,

"MainOperation": "Compact",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "2022-09-17T11:09:45.3727646Z",

"BeginTime": "2022-09-17T11:09:37.5813042Z",

"Duration": "00:00:07.7914604",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 26,

"BytesUploaded": 88059390,

"BytesDownloaded": 64067351,

"FilesUploaded": 6,

"FilesDownloaded": 3,

"FilesDeleted": 9,

"FoldersCreated": 0,

"RetryAttempts": 5,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 2531,

"KnownFileSize": 63844284109,

"LastBackupDate": "2022-09-17T12:19:21+02:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"VacuumResults": null,

"DeleteResults": {

"DeletedSetsActualLength": 7,

"DeletedSets": [

{

"Item1": 7,

"Item2": "2022-09-04T03:22:00+02:00"

},

{

"Item1": 6,

"Item2": "2022-09-05T03:22:00+02:00"

},

{

"Item1": 5,

"Item2": "2022-09-06T03:22:00+02:00"

},

{

"Item1": 4,

"Item2": "2022-09-07T03:22:00+02:00"

},

{

"Item1": 3,

"Item2": "2022-09-08T03:22:00+02:00"

},

{

"Item1": 2,

"Item2": "2022-09-09T03:22:00+02:00"

},

{

"Item1": 11,

"Item2": "2022-08-19T03:22:00+02:00"

}

],

"Dryrun": false,

"MainOperation": "Delete",

"CompactResults": {

"DeletedFileCount": 2,

"DownloadedFileCount": 0,

"UploadedFileCount": 0,

"DeletedFileSize": 2829,

"DownloadedFileSize": 0,

"UploadedFileSize": 0,

"Dryrun": false,

"VacuumResults": null,

"MainOperation": "Compact",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "2022-09-17T11:09:45.3727646Z",

"BeginTime": "2022-09-17T11:09:37.5813042Z",

"Duration": "00:00:07.7914604",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 26,

"BytesUploaded": 88059390,

"BytesDownloaded": 64067351,

"FilesUploaded": 6,

"FilesDownloaded": 3,

"FilesDeleted": 9,

"FoldersCreated": 0,

"RetryAttempts": 5,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 2531,

"KnownFileSize": 63844284109,

"LastBackupDate": "2022-09-17T12:19:21+02:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "2022-09-17T11:09:45.3727646Z",

"BeginTime": "2022-09-17T11:09:23.8981129Z",

"Duration": "00:00:21.4746517",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 26,

"BytesUploaded": 88059390,

"BytesDownloaded": 64067351,

"FilesUploaded": 6,

"FilesDownloaded": 3,

"FilesDeleted": 9,

"FoldersCreated": 0,

"RetryAttempts": 5,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 2531,

"KnownFileSize": 63844284109,

"LastBackupDate": "2022-09-17T12:19:21+02:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"RepairResults": null,

"TestResults": {

"MainOperation": "Test",

"VerificationsActualLength": 4,

"Verifications": [

{

"Key": "duplicati-i5b13500e0c3347dcb3f23e30495e4f75.dindex.zip.aes",

"Value": [

{

"Key": "Error",

"Value": "Invalid header marker"

}

]

},

{

"Key": "duplicati-20220227T012914Z.dlist.zip.aes",

"Value": []

},

{

"Key": "duplicati-iac00f557df8d4f8f85e8674c5a265f51.dindex.zip.aes",

"Value": []

},

{

"Key": "duplicati-b01650f97bcfa471d87fcd24ab6f928b1.dblock.zip.aes",

"Value": []

}

],

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "2022-09-17T11:11:19.6095205Z",

"BeginTime": "2022-09-17T11:10:20.1618168Z",

"Duration": "00:00:59.4477037",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 26,

"BytesUploaded": 88059390,

"BytesDownloaded": 64067351,

"FilesUploaded": 6,

"FilesDownloaded": 3,

"FilesDeleted": 9,

"FoldersCreated": 0,

"RetryAttempts": 5,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 2531,

"KnownFileSize": 63844284109,

"LastBackupDate": "2022-09-17T12:19:21+02:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"ParsedResult": "Error",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "2022-09-17T11:11:19.6251332Z",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:51:57.7765679",

"MessagesActualLength": 66,

"WarningsActualLength": 2,

"ErrorsActualLength": 1,

"Messages": [

"2022-09-17 12:19:21 +02 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: Die Operation Backup wurde gestartet",

"2022-09-17 12:19:43 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2022-09-17 12:19:44 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Retrying: ()",

"2022-09-17 12:19:54 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2022-09-17 12:20:26 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (2,47 KB)",

"2022-09-17 12:20:26 +02 - [Information-Duplicati.Library.Main.Operation.Backup.RecreateMissingIndexFiles-RecreateMissingIndexFile]: Re-creating missing index file for duplicati-b2a297cd9c6624c7891eb1aa8d731ce0e.dblock.zip.aes",

"2022-09-17 12:20:27 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ibd7ac9340ae5429eb03f2e484b9ba345.dindex.zip.aes (781 Bytes)",

"2022-09-17 12:20:27 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ibd7ac9340ae5429eb03f2e484b9ba345.dindex.zip.aes (781 Bytes)",

"2022-09-17 13:08:21 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b1a152d09aad54606a51091cdea438f4a.dblock.zip.aes (49,97 MB)",

"2022-09-17 13:08:28 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b7f2390bfa6db4877b2cb5c2c497c31d7.dblock.zip.aes (20,75 MB)",

"2022-09-17 13:08:57 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b7f2390bfa6db4877b2cb5c2c497c31d7.dblock.zip.aes (20,75 MB)",

"2022-09-17 13:08:58 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ia4f18baa1e3e422796ba25d28b3585e7.dindex.zip.aes (14,58 KB)",

"2022-09-17 13:08:58 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ia4f18baa1e3e422796ba25d28b3585e7.dindex.zip.aes (14,58 KB)",

"2022-09-17 13:09:12 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b1a152d09aad54606a51091cdea438f4a.dblock.zip.aes (49,97 MB)",

"2022-09-17 13:09:12 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-ia3fcfd67021349f998a855732cfcd620.dindex.zip.aes (56,48 KB)",

"2022-09-17 13:09:13 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-ia3fcfd67021349f998a855732cfcd620.dindex.zip.aes (56,48 KB)",

"2022-09-17 13:09:13 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20220917T101921Z.dlist.zip.aes (13,19 MB)",

"2022-09-17 13:09:23 +02 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20220917T101921Z.dlist.zip.aes (13,19 MB)",

"2022-09-17 13:09:23 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed",

"2022-09-17 13:09:23 +02 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00"

],

"Warnings": [

"2022-09-17 12:20:26 +02 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteHash]: remote file duplicati-bfca3601c65d7412486eab2b8620a9812.dblock.zip.aes is listed as Verified with size 4096 but should be 24813, please verify the sha256 hash \"nE+sklcKP2E10/H93nUmEao4mRacK228TG71znudLhQ=\"",

"2022-09-17 13:10:20 +02 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-MissingRemoteHash]: remote file duplicati-bfca3601c65d7412486eab2b8620a9812.dblock.zip.aes is listed as Verified with size 4096 but should be 24813, please verify the sha256 hash \"nE+sklcKP2E10/H93nUmEao4mRacK228TG71znudLhQ=\""

],

"Errors": [

"2022-09-17 13:11:02 +02 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-i5b13500e0c3347dcb3f23e30495e4f75.dindex.zip.aes"

],

"BackendStatistics": {

"RemoteCalls": 26,

"BytesUploaded": 88059390,

"BytesDownloaded": 64067351,

"FilesUploaded": 6,

"FilesDownloaded": 3,

"FilesDeleted": 9,

"FoldersCreated": 0,

"RetryAttempts": 5,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 2531,

"KnownFileSize": 63844284109,

"LastBackupDate": "2022-09-17T12:19:21+02:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.3 (2.0.6.3_beta_2021-06-17)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2022-09-17T10:19:21.8485653Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

}