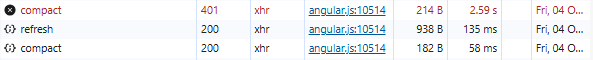

This new log confirms that the DataErrorException you had before in compact is persistent:

"Errors": [

"2024-09-30 13:23:32 +02 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error\r\nDataErrorException: Data Error",

"2024-09-30 13:23:32 +02 - [Error-Duplicati.Library.Main.Operation.BackupHandler-RollbackError]: Rollback error: Cannot access a disposed object.\r\nObject name: 'SQLiteConnection'.\r\nObjectDisposedException: Cannot access a disposed object.\r\nObject name: 'SQLiteConnection'.",

"2024-09-30 13:23:32 +02 - [Error-Duplicati.Library.Main.Controller-FailedOperation]: The operation Backup has failed with error: Data Error\r\nDataErrorException: Data Error"

],

The compact information is not available this time. I was hoping for more info rather than less.

One interesting this is the Warnings. Are you using VSS shadow copy (snapshot-policy), or is

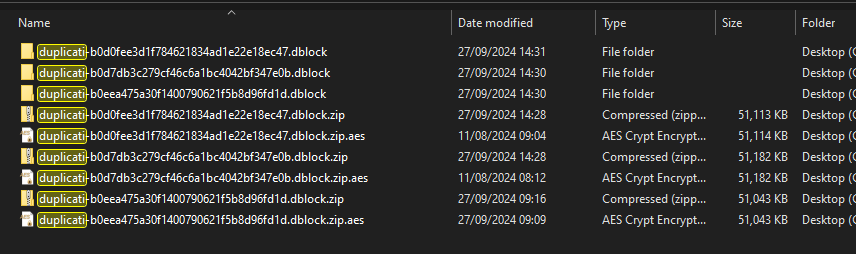

“2024-09-30 09:57:33 +02 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: C:\Users\administrator.MYDOMAIN\Desktop\duplicati-b0d7db3c279cf46c6a1bc4042bf347e0b.dblock\Xh_6jfv4J415W33JnRUHyj04MKaKlS0vX5Wt4EJAME0=\r\nIOException: A device which does not exist was specified. : ‘\\?\GLOBALROOT\Device\HarddiskVolumeShadowCopy13\\Users\administrator.MYDOMAIN\Desktop\duplicati-b0d7db3c279cf46c6a1bc4042bf347e0b.dblock\Xh_6jfv4J415W33JnRUHyj04MKaKlS0vX5Wt4EJAME0=’”,

“2024-09-30 09:57:33 +02 - [Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: C:\Users\administrator.MYDOMAIN\Desktop\duplicati-b0d7db3c279cf46c6a1bc4042bf347e0b.dblock\xi0RJGCWWeeqjb8ofJaaEBju1NUUMlBMvQ38n63IiRM=\r\nIOException: A device which does not exist was specified. : ‘\\?\GLOBALROOT\Device\HarddiskVolumeShadowCopy13\\Users\administrator.MYDOMAIN\Desktop\duplicati-b0d7db3c279cf46c6a1bc4042bf347e0b.dblock\xi0RJGCWWeeqjb8ofJaaEBju1NUUMlBMvQ38n63IiRM=’”

(and more, looking kind of like the block contents of a single dblock file) actually on a desktop?