Hi - looking to move my backup process to cloud based vs local external drive. I currently run a linux machine and have used Back In Time quite successfully for many years. The feature I really enjoy in BIT is the smart purge that allows me to keep incremental backup like:

6 per week

1 per week/month (4)

1 per month/year (12)

1 per year for each previous year

This, of course, keeps my back up space to a minimum.

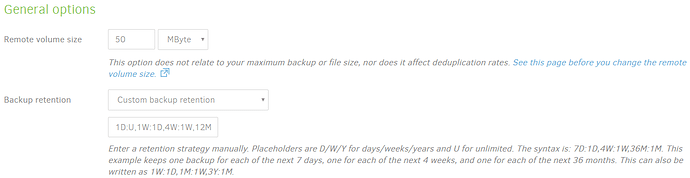

I see Duplicati has some auto purge features, but selecting “older than X” would mean missing out on getting to a file that’s older than “X” and newer than the initial backup. (Yes, I know something that was transient within a month will also be lost with BIT.)

So, wondering if I can do a manual version. For instance, at the start of a new month, manually batch select all the incremental backups for the previous month up the the last one, and delete them? That way, I’d have 1 incremental for each month. At the end of the first year, my backups would show 13 instances. The initial and an incremental for each month.

It doesn’t seem like this would break any continuity between the remaining monthly incremental backup. Will this work?

Thanks!