I suppose what I’m really looking for is an ETA for completion.

I feel getting ETA is difficult because in case of backup operation it depends on the file types, how fast they can get processed. ETA will keep fluctuating other things are bandwidth speed system performance etc

in this case though we’re only talking about rebuilding the database, so I think that’s just analysing the blocklist files and comparing them to the source filesystem.

At least some indicator of how many it has processed would be helpful.

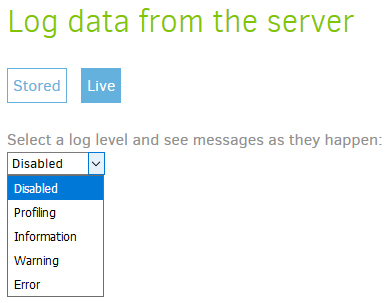

It sounds like you’re in the right place - does the droplist not show other options (such as Profiling)?

For a rebuild that could happen, but if all the files have already downloaded or the connection drops then reconnects between downloads then it shouldn’t be an issue.

The way I see it, if you cancel the repair you can be 100% sure it won’t work - letting it run improves those odds quite a bit. ![]()

As for an ETA, on a database repair that’s very hard to pin down. I personally haven’t had any long (to me) rebuild times but we’ve heard from other users who have waited a week or two for the repair to finish.

I don’t know that we’ve pinned down yet why there’s such a huge difference in repair times, so we can’t really say whether yours is likely to be fast or slow.

The “Profiling” view in the “Log data from the server” shown above should give you a step-by-step list of what is going on. If you’re not seeing anything other than “Disabled” try refreshing (F5 or Ctrl-F5) your browser window.

see, now that’s an interesting point… I noticed a number of times in the last 3 days or so that the droplits in duplicat were not populating properly… I figured it was just a symptom of it being busy,

If you weren’t getting a “Missing XSRF Token” error then there’s definitely something else going on…

Ok, so I tried the rebuild again, this time being careful not to touch anything else on the system (no apt update etc)

it ran for about 24 hours and appears to have stalled at this point yesterday:

Do I just leave it and hope it finishes ?

Thanks for the detail - the “CommitRestoredBlocklist” message seems to be part of the process that is downloading block volumes from the destination so they can be scanned for what data to put into the recreated database.

Unfortunately, I’m unclear as to whether this is firing the last time in a loop (meaning the next code is where it’s apparently freezing) or looping again (meaning something in the loop is having a problem).

I think the loop itself downloads a file from the destination so it can be processed - so it’s possible there’s a problem downloading the next block for processing and the code just sits there waiting forever for the download to finish.

If you go into the job “Show log…” menu and click on the “Remote” button, is there a log entry timestamped AFTER the “CommitRestoredBlocklist” message?

Oh, and does your repair “always” seem to die at this point in the process?

Hmmm…are you using Chrome? I know there’s an issue with a few versions where the CSS makes Chrome unhappy and the log contents, while there, aren’t shown.

Firefox on Ubuntu 16.04.

I can SSH port forward and use IE if you think it will make a difference…

No need, FF doesn’t exhibit that issue so I guess you really don’t have anything in that log.

Unfortunately, unless @kenkendk has any other ideas, the only thing I can think of at this point is a custom build for you with step-by-step logging of that small piece of code so we can figure out where it’s really getting stuck.

ok, thanks.

I’ here as a willing guineapig

I’m wondering if the Windows Subsystem for Linux (WSL) could help here? ie if you need to seed from a Windows 10 machine for what will ultimately run from a Linux server, you could install Mono and Duplicati under WSL, seed the backup from there, then transfer files to the Linux server. I am thinking of doing this to setup my very old QNAP+Debian server…

What’s the purpose of doing that - just “consistent” OS metadata / permissions in the backup versions?

Edit:

Never mind - my brain finally turned on and I realized you’re trying to avoid the path separator changes between the same source files as stored on the two different OSes.

I agreed with Pectojin - this should work just fine as long as the full path (such as username) doesn’t change, though you’ll likely get permissions changes between the two locations (who knows what voodoo Microsoft is doing with WSF).

I’m willing to bet that WSL to Linux preseeding will work. The only real difference between Linux and a WSL system is that Microsoft created a fake Linux kernel to put GNU on top of.