I was going to add this but I figured I’d leave it out for simplicity. With each backup on this machine I backup a copy of the database. This probably isn’t the best way to go about it as Duplicati is running as a service and I’m essentially backing up the database as the database is being written to (doing this backup). So if the above is correct, VSS is only grabbing a partial database. Maybe I’m wrong.

I was reading about those commands and I tried the LIST-BROKEN-FILES on the actual machine with the following syntax and it was coming up with the wrong database name. Depending on the s3 folder location it would generate a different name but never the right one. Not sure how this mechanism is working. Maybe I’m not including a link to the database? ** Now that I think about it this maybe what I’ve described in the last paragraph, the wrong windows location for the database **

I set up this computer that’s being backed up to run Duplicati as a service. In (C:\ProgramData\Duplicati\Data) I see “Duplicati-server.sqlite” and “10RandomCharacters.sqlite”. “Duplicati-server.sqlite” is small, like 52kb small and the other file with the random characters is the larger actual database.

When I run “Duplicati.CommandLine.exe list-broken-files https://bucketname.s3.wasabisys.com/folder” it asks for the encryption key which I give it, then it comes back and says, “Database file does not exist: C:\Users\pat\AppData\Local\Duplicati\EBWOBJQYJE.sqlite”.

First off it’s the wrong location for the database and secondly it’s the wrong database name. It should be, “C:\ProgramData\Duplicati\Data”. Not sure how to tell it that.

Major edit. Ok so it’s easier to run the LIST-BROKEN-FILES command from the gui as my syntax was wayyyy off. I was able to run it on the actual machine and received the following message:

2022-10-05 13:28:29 -04 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation ListBrokenFiles has started,

2022-10-05 13:28:32 -04 - [Information-Duplicati.Library.Main.Operation.ListBrokenFilesHandler-NoBrokenFilesetsInDatabase]: No broken filesets found in database, checking for missing remote files,

2022-10-05 13:28:32 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2022-10-05 13:28:32 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (752 bytes),

2022-10-05 13:28:32 -04 - [Information-Duplicati.Library.Main.Operation.ListBrokenFilesHandler-NoMissingFilesFound]: Skipping operation because no files were found to be missing, and no filesets were recorded as broken.

Weird as everything seems to be okay but the issue still exists.

2nd edit:

You definitely cannot backup the Duplicati sqlite database with Duplicati. You get a smaller file than what’s actually on the computer after Duplicati is done. I assume this is because VSS is copying it before Duplicati is done with the current backup (the one backing up the database).

Final Edit

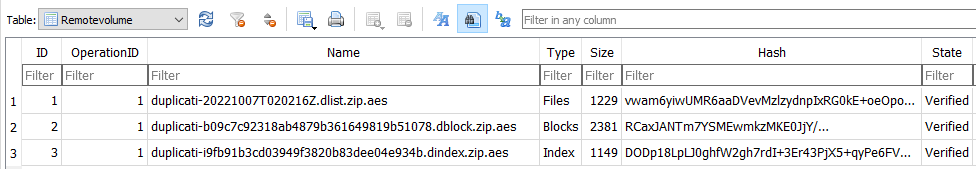

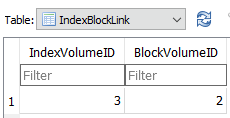

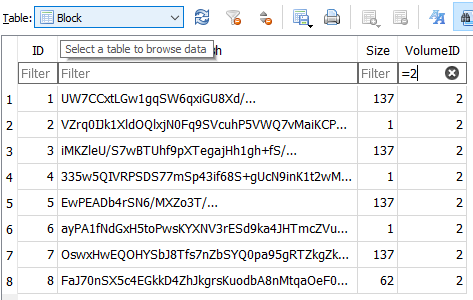

Problem resolved. Please note Duplicati is run as a service in my example so the folder location may be different from your setup. I was trying to do a backup of the Duplicati sqlite database located here: “C:\ProgramData\Duplicati\Data”. My thinking was that if anything happened to the actual dblock, dindex, and or dlist files I would at least have the database intact if I needed to run any commands against it.

The process was, I would include, “C:\ProgramData\Duplicati\Data” in with the backup of user files. However when I would import that database into a virtual machine running Duplicati and point it to my S3 provider, it would always come up one version number short than what the actual machine stated. I would also see the above error that files were incorrectly referenced if I tried a restore on any of the files.

Turns out, you cannot backup the Duplicati database along with the main backup job. It’s my assumption that VSS is grabbing a partial copy of the database before Duplicati can finish writing to it. When you do the restore and point Duplicati to the database it looks as if there’s files missing when in fact there isn’t.

Once TS678’s comment clicked, I realized what was happening. I ran a restore job on the actual machine (I wasn’t able to get to this machine directly at first) with the issue and was puzzled when the restore job ran without incident.

This is why LIST-BROKEN-FILES kept coming back a success every time…because there really was no issue. I just had a broken database file.

I’ve rectified this situation by running the main backup job and run a separate job backing up the database files located here, “C:\ProgramData\Duplicati\Data” about an hour later. No more errors about missing files.

I’m still seeing the error about the 13 missing files if I run a restore on the actual machine without using the database. In other words if I point Duplicati directly to the S3 files and say here ya go, do a restore; I see the error about the 13 missing files. If I use the database there’s no issue.