This was much more than requested, but since you’ve begun exploring, I’ll comment.

That’s surprising. Usually bad hash means damaged file, which means it won’t decrypt.

The vol file is the volume index of the dblock file that this dindex indexes. It’s JSON text.

They’ll all fail to decrypt because they’re not encrypted. Only the associated dblock file is.

This folder contains convenience copies of blocklist files which are in some dblock file too.

Database recreate can avoid reading any big dblock folder this way – just dlist and dindex.

Processing a large file describes how a multiblock file is represented via a list of its blocks.

A block is known by its SHA-256 hash. The blocklist file strings 32 byte hashes in a series.

The file name is the Base64 of the SHA-256 hash of file bytes. The technique is used a lot.

Database rebuild gets into the external (destination) and internal (database) formats more.

Names should all match, but you might be confusing the dblock file with its index in dindex.

I open up a dindex.zip. In vol is duplicati-bef7f8a54492b45ef9959fe75cb4b2b59.dblock.zip

which is the index for that file which is also in the destination. Its name is in verification file:

“Name”:“duplicati-bef7f8a54492b45ef9959fe75cb4b2b59.dblock.zip”,“Hash”:“c/AC4voky1XOqGWgTMBh/deB2pqEH+BxAYe0tcbd/CE=”,“Size”:752

Check State value. Usual in my short test is 3. If something else, it might be a deleted file.

These are held in the database awhile, but shouldn’t actually be present at the destination.

I don’t think there’s any --dblock option. You put the file names (without any path portion)

into the Commandline arguments box (on separate lines if you’re doing multiple at a time).

For any dindex file that failed the hash test yet somehow decrypted, its dblock name is in vol folder.

Using the database, you find the dindex in Remotevolume table, look up its ID in IndexBlockLink as IndexVolumeID, and take the BlockVolumeID for that row back to Remotevolume to get a file Name.

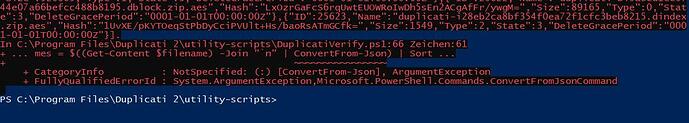

Did you try running the verification script? When I intentionally shortened a dindex file, it told me that

C:\>powershell -ExecutionPolicy Bypass -file "C:\Program Files\Duplicati 2\utility-scripts\DuplicatiVerify.ps1"

cmdlet DuplicatiVerify.ps1 at command pipeline position 1

Supply values for the following parameters:

FileOrDir: C:\ProgramData\Duplicati\duplicati-2.0.6.104_canary_2022-06-15\RUN\test 1

Verifying file: duplicati-verification.json

Verifying file duplicati-20230318T211945Z.dlist.zip

Verifying file duplicati-20230318T230243Z.dlist.zip

Verifying file duplicati-20230324T142351Z.dlist.zip

Verifying file duplicati-b0be1098d590f49ec8abf5ed48ff8c6a2.dblock.zip

Verifying file duplicati-b68cae76f5fb14adfa22ece3a0b21efa4.dblock.zip

Verifying file duplicati-bef7f8a54492b45ef9959fe75cb4b2b59.dblock.zip

Verifying file duplicati-i01af6a3a2aca4942b651e8052e733c6f.dindex.zip

Verifying file duplicati-i6917b5de06b3479abfa484d201785878.dindex.zip

Verifying file duplicati-i97990165a1864f1c9ff863261ba20ba2.dindex.zip

*** Hash check failed for file: duplicati-i97990165a1864f1c9ff863261ba20ba2.dindex.zip

Errors were found

so its answer is rather non-specific. Even though it can get the length, it only complains about hash.

Being able to report errors suggests that it still knows about the files in Remotevolume table, which is probably more or less dumped into duplicati-verification.json. Does the verification tool give 14 errors?

If so, you could possibly just move on to hiding 14 dindex files (e.g. move them to a folder) and Repair.

A worrisome thing is that you were able to decrypt a dindex that gave a hash failure. That’s a surprise.

You didn’t say length value for any of the bad dindex files, but duplicati-verification.json would know all.

The reason this matters is that if the problem is always truncation, there’s now a backup-time test for it.

Added check to detect partially written files on network drives, thanks @dkrahmer

but it’s only in the Canary releases so far. If network drive got unreliable, maybe use a different access?

Truncation is commonly to a binary-even value. I think filesystems and SMB like binary. The calculator Windows supplies can do conversions, or if you want to tell me 14 actual file sizes, I can convert a few.

As a side note on verifying (assuming you can get affected to tell you after you tell it the dblocks) is a

no-local-blocks is needed to force it to go to the destination, otherwise it will use source blocks, if found.

Duplicati will attempt to use data from source files to minimize the amount of downloaded data. Use this option to skip this optimization and only use remote data.

Do you ever simulate disaster recovery with Direct restore from backup files? That creates a database, however somewhat more thorough (because it does all versions) is to move yours aside and do Repair. Assuming it works fairly quickly (it would have trouble if a dindex is actually corrupted, so read dblocks), you get your choice of putting your old database back (it has logs and such) or staying on recreated DB.