https://github.com/duplicati/duplicati/blob/master/Duplicati/Library/Main/Operation/RestoreHandler.cs

is what gets run. The Logging.Log lines show what logs at different levels from this file anyway. The

shows the file verification against the SHA-256 at time of backup. Can you see that line for your file?

This wouldn’t prove that backup damage hadn’t occurred, but database investigation could test that.

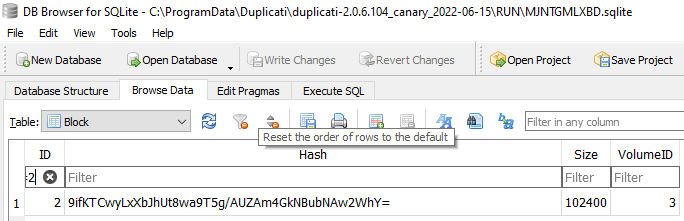

Here’s backup of a 1024000 byte file of NUL at default blocksize of 100 KiB, so expecting 10 blocks.

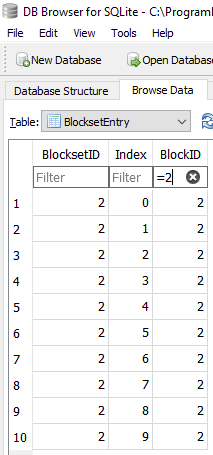

Here’s the database view, and it’s easy to see that the file is made of 10 blocks which are the same.

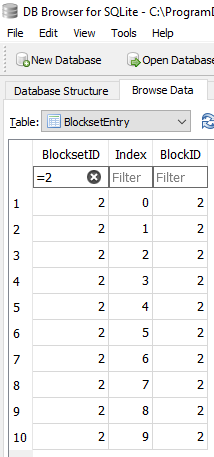

The BlocksetID represents the file, and the series of BlockID represent the fixed-sized blocks it has:

This could show if a file really got backed up as (or DB somehow turned into) a run of the same block.

Knowing what BlockID 2 actually contains comes from knowing it was all NULs, so the hash for that is

and we could search the backup for other files that have NUL blocks in them by using column header:

In this test backup, of course, there is only one file, but if several of yours went NUL, they would show.

This behavior isn’t one I can recall having heard of (feel free to search), so something else is going on.

Whether you proceed with an investigation or a different restore is up to you. I can help with either one.