The backup was discontinued on March 8,

It started again, but it was also suspended on March 9th.

There are logs below in /usr/lib/duplicati.

/usr/lib/duplicati

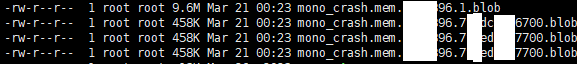

mono_crash.mem.~~~~.blob

I started the backup again…

What’s the problem?

The backup was discontinued on March 8,

It started again, but it was also suspended on March 9th.

There are logs below in /usr/lib/duplicati.

/usr/lib/duplicati

mono_crash.mem.~~~~.blob

I started the backup again…

What’s the problem?

When Mono crashes, it is a problem with Mono and Duplicati can only provide workarounds.

If you see no other error messages, I think it would require a deep dive into the crash files you found.

Since Mono is fairly stale at this point, I think the solution is to move forward to .Net instead of using Mono.

Do you have any description of what “discontinued” and “suspended” look like, e.g. in the web UI?

How do those two terms differ? Is this something like a daily scheduled backup that started again?

If unattended, or visible descriptions don’t say much, there are other options, e.g. making a log file.

Basically there’s no Duplicati information here, so not much can be said besides mono has crashed.

Sometimes newer mono does better. What does mono --version say? Did you install as directed?

Possibly journalctl --unit=duplicati will show something interesting about the mono crashes.

EDIT:

Or maybe it was manual start? First sounds automatic, second sounds manual (good chance to look)

Mono version is…

Mono JIT compiler version 6.8.0.105 (Debian 6.8.0.105+dfsg-2 Wed Feb 26 23:23:50 UTC 2020)

I saw the path you shared and installed it.

But, I didn’t proceed it(

sudo apt install apt-transport-https nano git-core software-properties-common dirmngr -y

).

journalctl --unit=duplicati

I checked the log from the command you gave me

Should I try it like this?

sudo apt install apt-transport-https nano git-core software-properties-common dirmngr -y

And upgrad mono version.

https://www.mono-project.com/download/stable/#download-lin-ubuntu

I’m really confused by what you saw and did, and in what order.

I linked to the Prerequisites section which gave the mono install.

You gave a link to a different section with an action I don’t know:

Do you mean you didn’t do that? Fine for now. Update mono first.

When? Was that your old situation, or after changes?

Regardless, splitting top error line at 2257 (might vary) and searching for the left and right parts using Google found a few reports. One closed due to no originator response. One closed due to old version.

remains open, but by 2022, mono had already become stagnant. Maybe the latest one will work for you.

If not, we probably shouldn’t expect a fix for mono, but there is a small chance of finding a workaround.

Is that a question or is that what you did? Is Ubuntu what you’re using? Duplicati has directions. Again:

Interestingly, the cited GitHub issue on mono was on FreeBSD. What OS exactly are you using for this?

OS : Ubuntu 20.04

I didn’t do this Prerequisites

So, I’m proceeding as below and trying to back up again.

sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF

echo "deb https://download.mono-project.com/repo/ubuntu stable-focal main" | sudo tee /etc/apt/sources.list.d/mono-official-stable.list

sudo apt update

sudo apt install mono-devel gtk-sharp2 libappindicator0.1-cil libmono-2.0-1

sudo apt install apt-transport-https nano git-core software-properties-common dirmngr -y

$mono --version

Mono JIT compiler version 6.12.0.200

I could see that there was a Out Of Memory.(dmesg)

52Gi is used for buffer and cache,

so I ran a backup again after emptying it. echo 1 > /proc/sys/vm/drop_caches

$dmesg

[65354363.445777] Out of memory: Killed process 2661693 (mono-sgen) total-vm:134184716kB, anon-rss:63599468kB, file-rss:0kB, shmem-rss:4kB, UID:0 pgtables:269140kB oom_score_adj:0

[65354367.340391] oom_reaper: reaped process 2661693 (mono-sgen), now anon-rss:0kB, file-rss:0kB, shmem-rss:4kB

$ free -h

total used free shared buff/cache available

Mem: 62Gi 2.4Gi 7.5Gi 1.0Mi 52Gi 59Gi

Swap: 6.9Gi 987Mi 5.9Gi

$echo 1 > /proc/sys/vm/drop_caches

$ free -h

total used free shared buff/cache available

Mem: 62Gi 2.5Gi 58Gi 1.0Mi 2.0Gi 59Gi

Swap: 6.9Gi 987Mi 5.9Gi

Maybe we should review your Duplicati configuration, and you watch memory with top, ps, or similar tools to see if it shows more growth than expected during the backup. Some is normal.

The top command will also show system memory and swap statistics, which might be helpful, however if you happen to be expert in the Linux OOM-killer, feel free to interpret what it shows.

As for buf/cache and drop_caches, is that even relevant? Dropping didn’t change available.

Which is the trigger of OOM killer, free or availaible memory in Linux?

is saying available, but we might not know system memory situation when OOM-kill got done.

You could certainly poll using a script and a loop, but polling is the kind of thing that top can do.

What is Duplicati source size? Is it a physical drive on the system? What is destination storage? What are Duplicati Options screen 5 “Remote volume size”, dblock-size and blocksize (if used)?

source : NFS storage

source size : about 45TB, If I start backup, The existing process was maintained, so there were about 8TB left

Destination : Backblaze B2

remote volume size : 50MByte

No options

yesterday, I change /etc/default/duplicati. ans run a backup.

MONO_GC_PARAMS=“max-heap-size=24g”

But, I had the same problem.

/var/log/syslog

/usr/lib/duplicati

That might be a record try. Duplicati at current 100 KB default blocksize slows past 100 GB

because tracking all the blocks bogs down the SQL (and inflates size, so may matter here).

I’m not quite following. I thought there was a process crash. Do you mean prior backup was

resumed with 8 TB left to backup (which is still pretty impressively large progress before it)?

I’m also still looking for the other monitoring requested in the previous post. Please provide.

Throwing screenshots of mono internals is slightly informative, but not an area we work with.

EDIT:

How long did this backup take, and how much did it manage to put on B2? Fortunately the

uploading to B2 is free, but fixing the blocksize and starting fresh will likely still take awhile.