FYI, 2.0.3.9_canary_2018-06-30 doesn’t fix the problem.

I’ve run into this before even with prior versions of Duplicati (was using 2.0.3.5 for a while

before the latest updates to .7 and .8). I suspect for some people, prior versions were less picky but the newer versions are now picking up the permissions properly.

Every single time I found it was related to file/folder permissions. Duplicati saw the file/folder was there, but could not read anything from it since the permissions were set to “someone” else. In my case this error was common when file permissions were set so only (an example name) Susan and local system Admin had full access, but domain admin and Windows “network system” did not. Once I went in via local admin on the server the files were stored on, I changed permissions to allow domain admin full access (or at minimum read/write), then those errors went away.

I’ve run several of my smaller backups today under 2.0.3.9-canary and so far I have not seen this issue despite the files having very fragmented and specific permissions, but Duplicati also runs under the domain admin which should override lesser permissions.

The ownership / permission idea makes sense, especially for situations like @ethauvin’s where it happens every run.

But it doesn’t explain situations like mine where it only happens very rarely - even on files that haven’t changed in years.

Doesn’t explain it for me either, single user pc and the error happens on every single file in various backup sets, like a few others have reported.

And didn’t someone report the same problem on Linux?

I don’t use snapshots, wonder if that would make a difference. I’ll try it on a small backup and report here.

It took me a few weeks of digging through logs and info from Duplicati, and telling it to write the logs to a file which helped me track down the problematic files. In my case it was files from the late 90s and early 2000s where permissions were set by the user (via Citrix, linux servers, and other means), so only that specific user had permissions (and local admin). This meant the newer PCs, servers, and domain settings could not override the old permissions, so I had to log in to the local server. Once there I tracked down all the files and updated permissions to the more modern setup for local and domain admin access. After that no more errors relating to metadata.

Permissions are permissions regardless if it is Windows, Linux, DOS, or whatever, it is just how they are set differs among the OS. I would suggest looking at the error logs and check the permissions for the mentioned files versus what permissions Duplicati is set to run as. If Duplicati runs as “duplicati/duplicati” and the file permissions are set to something like 770 or even 755, then non-root users may not have full access to the files, thus the errors.

The permission idea make a lot more sense for you, @JonMikelV, than for the rest of us with thousands of files showing the warning, across multiple backups set, operating systems, etc.

Snapshots made no difference.

@JonMikelV, I am running Duplicati as a service, and I’m pretty sure @Chipmaster is too. Are you? Could that be the difference?

Yes, I’m running Duplicati as a service as well.

@mikaitech, how did you track down the specific files/folders affected? Did you look it up by the hash in the summary or were you writing to a log file?

I added small pull request Debug metadata by mnaiman · Pull Request #3312 · duplicati/duplicati · GitHub to show path affected. My suspicion is, that these are only folder paths because of length 137.

Cool, thanks!

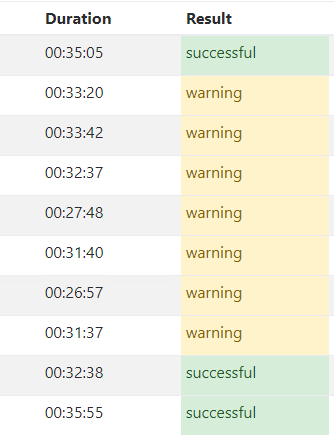

My experience with backuping Thunderbird Portable folder on Windows 7 with VSS:

Sometime is ok, sometimes warning, ratio may be 50:50

So in my case:

- No long paths

- No permissions changes/limitations

It looks like that coincidence is involved.

This is sample result for problematic backup job - no changes was made to backup job or OS

@JonMikelV I set it to write logs to a file, looked through the file to see what files was coming back with the various errors and 99% of them were due to permission errors. Permissions issues were caused by the items I mentioned previously:

with these specific files (that displayed the errors) permissions were set by the user (via Citrix, linux servers, and other means), so only that specific user had permissions (and local admin). This meant the newer PCs, servers, and domain settings could not override the old permissions, so I had to log in to the local server (Windows 2003). Once there I tracked down all the files and updated permissions to the more modern setup for local and domain admin access. After that no more errors relating to metadata.

I have same problems and no old permissions are involved.

Example of one I see with my latest backup, which I learned was not actually permissions related:

2018-07-06 00:21:32 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataPreProcess.FileEntry-TimestampReadFailed]: Failed to read timestamp on “Z:\ACCTG\HUD\zzzzz\Backup\mydoc\cdoc\doc.ico”,

2018-07-06 00:21:32 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataGenerator.Metadata-TimestampReadFailed]: Failed to read timestamp on “Z:\ACCTG\HUD\zzzzz\Backup\mydoc\cdoc\doc.ico”,

2018-07-06 00:21:32 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-UnexpextedMetadataLookup]: Metadata was reported as not changed, but still requires being added? Hash: c8M3XXXXVi/HJe3v29xxxxcGBMtVucpGZxbbM/SP5ZE=, Length: 106, ID: 382696

According to the actual log file, it gives more information:

2018-07-06 00:21:32 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataPreProcess.FileEntry-TimestampReadFailed]: Failed to read timestamp on “Z:\ACCTG\HUD\zzzzz\Backup\mydoc\cdoc\doc.ico”

System.ArgumentOutOfRangeException: Not a valid Win32 FileTime.

Parameter name: fileTime

-at System.DateTime.FromFileTimeUtc(Int64 fileTime)

-at Duplicati.Library.Snapshots.SystemIOWindows.FileGetLastWriteTimeUtc(String path)

-at Duplicati.Library.Main.Operation.Backup.MetadataPreProcess.<>c__DisplayClass2_0.<b__0>d.MoveNext()

In my case, the extended error message shows the reason:

Not a valid Win32 FileTime

In this case I checked the file, and it shows “Modified: Thursday, October 3, 24943 20:55:54”. Yes you read that right, something happened that set the year of the file to display 24943. Thus the reason for this error.

I could see this happening with virus infected files, other files with faulty timestamps, or any number of file related problems like this including permissions.

I scanned all my files and none have date which differs more than couple years from now. there needs to be yet another cause of issue.

This sounds like what is described here (where the “fix” was to manually adjust the timestamps to something more sensible):

I agree since if it was only the timestamp then the same files should be causing the error on every run, which isn’t the case for me, mr-fibble, etc.

What it does potentially tell us is that there are either multiple causes for that error or (more likely) that there are multiple failure points in the code that aren’t being caught until the metadata error check is reached.

Hopefully once your pull request reaches a canary version we’ll get enough additional detail to help narrow it down.

In that case I suggest going into the Duplicati settings and enable the log-file to something like C:\DuplicatiTemp\log.txt or /sda/home/DuplicatiTemp/log.txt so it logs the full errors, not just the truncated limited info shown on the web access page. That will tell you the full errors and why it is kicking back.

Yes, error is definitely bound to concurrent processing (c# async things). Multiple things are run in parallel in Duplicati which is awesome in terms of speed. Downside is, that is way harder to predict how code is behaving - reason of different causes.

More tests - 185 from 197 files in backup are affected.

I took same data as backup, same Duplicati, same OS, same backend, same DB and put to my virtual dev machine for debug. Guess what - backup went fine, no warnings.

Thanks for the tips to enable logging to a file. Didn’t realize that would have more detail.

After that, I see the following that looks a bit suspicious but I haven’t been able to glean a cause yet:

$ grep foobar.mp4

2018-07-09 19:48:14 -04 - [Verbose-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-IncludingPath]: Including path as no filters matched: /foobar.mp4

2018-07-09 19:48:14 -04 - [Verbose-Duplicati.Library.Main.Operation.Backup.FilePreFilterProcess.FileEntry-CheckFileForChanges]: Checking file for changes /foobar.mp4, new: False, timestamp changed: False, size changed: True, metadatachanged: False, 2/9/2016 1:05:41 AM vs 2/9/2016 1:05:41 AM

2018-07-09 19:50:16 -04 - [Verbose-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-FileMetadataChanged]: File has only metadata changes /foobar.mp4

In between the “Checking file for changes” and the “File has only metadata changes” there’s an associated “Metadata was reported as not changed, but still requires being added?” warning.

So it looks like it looks up the metadata for the file. Finds that everything is the same except the file size(!?). Determines that the metadata has not changed and then queries the database for the metadata id. That seems to fail to find a matching entry in the db, so it then reprocesses the file and finds that actually, nothing has changed.

Given this, I’m not quite sure what’s wrong. The file size mismatch is a clue perhaps, or it could be a red herring. Looking at the code and intuition would tell me that if the metadata hash mismatches then it would be updated in the database such that the next time it runs it would not mismatch. Unless some value in the metadata is uninitialized, random, or constantly changing, then it should be able to recover from these mismatches. Yet for me, every file reliably has these metadata hash mismatches.

I’m using xfs for a filesystem, and stat reports everything fine about these files:

$ stat /foobar.mp4

File: /foobar.mp4

Size: 2268649487 Blocks: 4430960 IO Block: 4096 regular file

Device: fd00h/64768d Inode: 8613937208 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Access: 2018-07-09 19:48:14.675413810 -0400

Modify: 2016-02-08 20:05:41.318177515 -0500

Change: 2016-02-08 20:07:02.310180112 -0500

Birth: -

I suspect, the real hint here is that both myself and @ethauvin are using check-filetime-only. I see in code like: duplicati/MetadataPreProcess.cs at 4b9c0ab9a223334d52b0b9ad6c4fb0bbb9199f29 · duplicati/duplicati · GitHub that file size is set to -1 if that setting is selected. Yet the logic at duplicati/FilePreFilterProcess.cs at 914b17055ce244e2b336843c6afc6ffc8963d9c5 · duplicati/duplicati · GitHub seems to unconditionally check the file size of the given file and add it. And a -1 value according to the logic on line 71 would always indicate it has changed.