Well this is disconcerting!

I only have 175 versions right now on one machine and in the restore process it takes 45 each time I drill down into a deeper folder. Is this time a function of version count, # of files backed up, or both?

Well this is disconcerting!

I only have 175 versions right now on one machine and in the restore process it takes 45 each time I drill down into a deeper folder. Is this time a function of version count, # of files backed up, or both?

Just for fun I tried opening two web interfaces to Duplicati, one to the Live Log (Profiling) and the other to a backup job in which I clicked Restore.

The sum of the “took” times reported in the profiler was definitely less than the time it took for the browser to display the results, so there may be a third slowdown - the UI itself.

I know historically I’ve run into issues with browser performance when dealing with very large or complex select lists. One way to test this could be to try @mr-flibble’s idea and run the same thing in the command line to see if it spits out results any faster.

I had a similar approach as you – backing up files every hour. I maxed out around 289 versions. At that time the backup time had increased by an hour (to 1h15m) and the time to render every click in the restore UI was around 29 minutes. I think the CPU usage of the service had also increased during backups as well.

At that point I decided that the 1 hour backup interval was not a practical configuration at this time. The volatile files are for work and if a restore was needed it would probably be a time sensitive issue so the prospect of waiting hours to find and restore a file was not acceptable. In the end I reverted to a once per day interval.

I wonder if it is possible to apply retention policy in order to reduce the versions in the backup? Or is a “new” policy only applied to backups taken after the retention policy is defined ?

It will be applied on old backups as well.

Just keep in mind, that if the retention policy you defined leads to deleteing a lot of backups, depending on your settings Duplicati might start compacting your backup files.

If your backup location is somewhere online and your download and uploadspeed is rather slow, then this might take a “while”

When is the policy executed? When starting the next backup? or is it possible to run a command like “apply policy and compact now” ?

--keep-time, --keep-versions and --retention-policy are part of the backup job configuration.

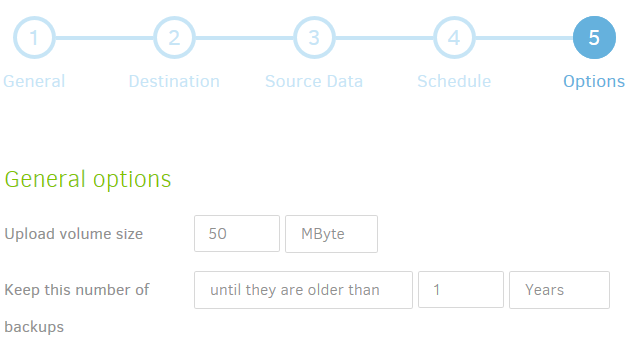

They are defined in step 5 of the Add/Edit Backup wizard.

--keep-time and --keep-versions can be supplied under “General Options”:

--retention-policy is an advanced option.

The --keep-time, --keep-versions and --retention-policy options are applied after completion of each backup job. Compacting is started automatically when needed. You can set thresholds for compacting the advanced options --small-file-max-count and --small-file-size.

I have made some tests on this backup set now. The first backup was done 2015.

I applied an acceptable retention policy and reduced the versions from 502 to 20. There is 130 000 files in the backup. Time for the initial listing on restore is now reduced from 40 min to 20 sec. Listing the next folder takes about the same time, 20 sec.

Wow, that’s a serious improvement!

Looks to me that the local database is the bottleneck for large backups with many source files.

You significantly reduced the number of snapshots from 502 to 20. Did the used space at the backend reduced a lot also? If there is not much changed between 2 backup operations, deleting backups should not have much effect on the data used at the backend.

If only new data is added between 2 backups (for example if you backup a folder with pictures from your camera), deleting backups shouldn’t have any effect to the storage space used.

If browsing through folders in Duplicati improves in situations like these, I suppose it’s safe to say that queries to the local DB are causing the performance issues, not the number of blocks or the number of files backed up.

It was several months since this backup set was in use. The source data in the backup was 275 GB and at the backend 255 GB / 502 versions.

After the run now with retention applied, the data in the backend has in fact increased. It is now 288 GB / 21 versions. The source data has decreased to 224 GB. (51 GB is removed).

Thanks for doing (and logging) the testing!

I’m curious if you noticed whether or not any of the huge file browsing improvements due to fewer versions translated to faster backup times in any way.

can you also share what was the database size before and now?

I have looked at some old logs and tried to compare similar situations with the backup runs, and yes, I would say that there is a huge performance improvement after reducing the versions.

But also to be noted is that it has been performance improvements in the software since then. So the comparison might not be really fair when it comes to the time duration for the backup runs.

The size of the local database was 3,3 GB. I did not log exactly how much the change was after the “retention run”, but it did not change much. I did a “vacuum run” afterwards and after that it shrunk to 766 MB.

Doesn’t Duplicati automatically do that at the end of the job? I was watching the profiling live log and saw VACUUM executed at the end.

Probably you are in the Beta Update channel, v2.0.2.1 is the latest (and still only) beta version.

In v2.0.2.2 Canary, performing automatic VACUUM was dropped, because this could result in waiting for a long time after a backup has been completed and the local database is large.

Usually there is not much space released by the VACUUM operation, so starting from v2.0.2.2 this is a manual operation.

Yep I’m on the beta version. Thanks for clarifying!

Trying this with Duplicati version 2.0.6.3_beta_2021-06-17 results in an error “Setting multiple retention options (–keep time, --retention-policy) is not permitted”.

Just for reference, I found another discussion, that --retention-policy was changed 2018 as follows:

--retention-policy now deletes backups that are outside of any time frame. No need to specify --keep-time as well. Help text was changed accordingly.--retention-policy is now mutual exclusive to the other retention options --keep-time and --keep-version. Help text was changed accordingly.--retention-policy now complies with --allow-full-removal, deleting even the most recent backup, if it’s outside of any time frameRefer to https://forum.duplicati.com/t/removing-files-from-backup/13014/3

P.S. It is a good practise to let old threads stay unclosed, allowing to add such referrals years later.

Yes, it changed. Don’t believe everything you see in old threads, because that happens sometimes.

I suppose that’s the counter-argument to the argument here that they be closed after long inactivity… Some forums seem to go that way, some leave them open, and there are pros and cons to each way.

I’m not aware of any problem referencing closed thread in an open one. If you mean referencing from ancient topic to bring it up to date, thank you for updating, as it may save someone from being misled.

There’s no specific goal to keep 60,000 posts in 6,000 topics updated though. It’s way too much work.