Unfortunately, this means that I killed the process and ran Duplicati 2 again!

Thanks for the advice, but at the point this backup is sitting, I had no option left than to resort to killing the process in Task Manager. (Since both stop after file or stop now, just didn’t do anything for a long time, but at this point a long time means, maybe 2 minutes).

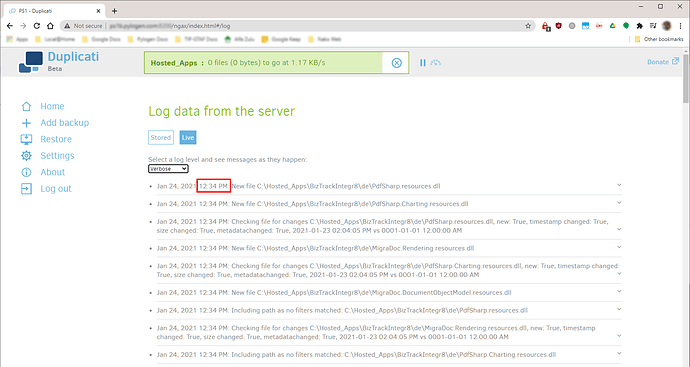

Thanks for this, I wasn’t sure what was the highest level of logging. When I track the “Live” logs, I used Verbose. I’ve only heard of “Verbose” so I suspected the other options to be special cases. I’ll update my logging to “Profiling” then. I do have Process Monitor installed and use it a lot, but I can’t recall checking the disk usage on it. Task Manager does not show disk usage, as this is in a VM.

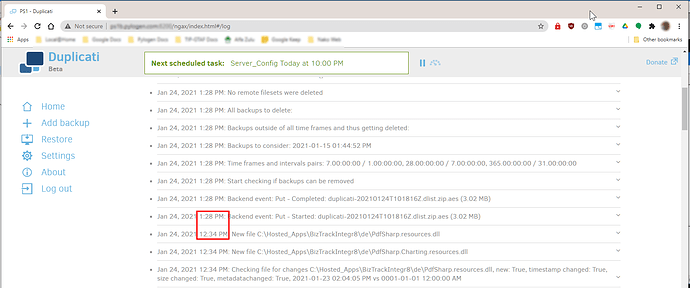

I do have this setting set, so It pauses for 10minutes on startup before running backups. It ensures when the server restarts, that it doesn’t immediately starts with backups. So the reason it is in a paused state is because of the setting that is on. I actually forgot about the setting, but once I saw under the About page that it was counting down, I remembered and checked the setting.

This backup is 335.89 MB in size, so not that big then.

I’ve enabled the advanced setting to send logs to a file in “Verbose” mode, and will see if I can see anything else there. It is a 1.4Gb file, which is a bit slow to work through. I need to figure out how to tell Duplicati to create a new file after say 100Mb or one each day.

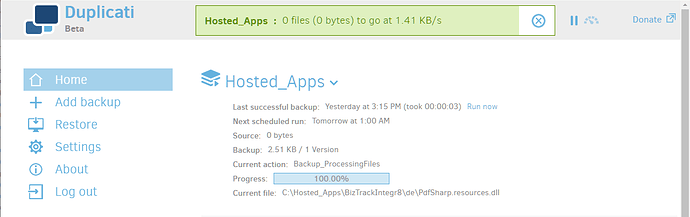

Edit: Also check my post just before your post, to see the final live logs screenshot. The backup eventually completed, I also just realised that the backup has a very large amount of small text based files, so everything “fits” inside a few backup “blocks” or what they are called, uploading only at 100Mb size (I’ve set this so), so the backup process may be hard at work working with a huge amount of small files, while not yet ready to break the process to “upload”. Also it seems now that the process “hangs” at the end of the last file. So AFTER it iterated all the files that is in the sources, it took very long to start with the upload of the changed files (only a 3.02Mb file).

Edit: Ok this log file shows the same things as the “live” log, since both were in Verbose mode.