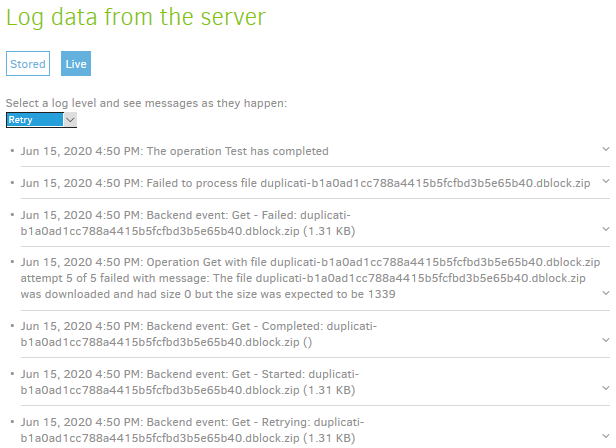

On a reattempt, I got a much better detailed error message:

{

"DeletedFiles": 0,

"DeletedFolders": 0,

"ModifiedFiles": 0,

"ExaminedFiles": 113427,

"OpenedFiles": 0,

"AddedFiles": 0,

"SizeOfModifiedFiles": 0,

"SizeOfAddedFiles": 0,

"SizeOfExaminedFiles": 996095516696,

"SizeOfOpenedFiles": 0,

"NotProcessedFiles": 0,

"AddedFolders": 0,

"TooLargeFiles": 0,

"FilesWithError": 0,

"ModifiedFolders": 0,

"ModifiedSymlinks": 0,

"AddedSymlinks": 0,

"DeletedSymlinks": 0,

"PartialBackup": false,

"Dryrun": false,

"MainOperation": "Backup",

"CompactResults": null,

"VacuumResults": null,

"DeleteResults": null,

"RepairResults": null,

"TestResults": {

"MainOperation": "Test",

"VerificationsActualLength": 5,

"Verifications": [

{

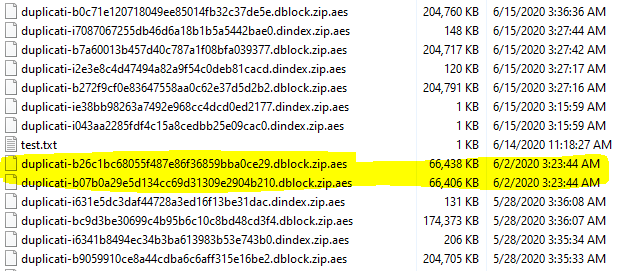

"Key": "duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes",

"Value": [

{

"Key": "Error",

"Value": "File length is invalid"

}

]

},

{

"Key": "duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes",

"Value": [

{

"Key": "Error",

"Value": "File length is invalid"

}

]

},

{

"Key": "duplicati-20191118T100742Z.dlist.zip.aes",

"Value": []

},

{

"Key": "duplicati-i19fbd785e868445e8cd6790aa8f96dfd.dindex.zip.aes",

"Value": []

},

{

"Key": "duplicati-b23543a0128c84464b5a11fbf5605d646.dblock.zip.aes",

"Value": []

}

],

"ParsedResult": "Success",

"Version": "2.0.5.1 (2.0.5.1_beta_2020-01-18)",

"EndTime": "2020-06-15T18:23:04.078949Z",

"BeginTime": "2020-06-15T17:56:05.035603Z",

"Duration": "00:26:59.0433460",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 15,

"BytesUploaded": 0,

"BytesDownloaded": 219938775,

"FilesUploaded": 0,

"FilesDownloaded": 3,

"FilesDeleted": 0,

"FoldersCreated": 0,

"RetryAttempts": 8,

"UnknownFileSize": 8,

"UnknownFileCount": 1,

"KnownFileCount": 8825,

"KnownFileSize": 877229435996,

"LastBackupDate": "2020-06-15T03:00:00-06:00",

"BackupListCount": 167,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.5.1 (2.0.5.1_beta_2020-01-18)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2020-06-15T17:31:14.061439Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

"ParsedResult": "Error",

"Version": "2.0.5.1 (2.0.5.1_beta_2020-01-18)",

"EndTime": "2020-06-15T18:23:04.322979Z",

"BeginTime": "2020-06-15T17:31:14.061424Z",

"Duration": "00:51:50.2615550",

"MessagesActualLength": 33,

"WarningsActualLength": 0,

"ErrorsActualLength": 2,

"Messages": [

"2020-06-15 11:31:14 -06 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started",

"2020-06-15 11:48:37 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2020-06-15 11:48:51 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (8.62 KB)",

"2020-06-15 11:55:57 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2020-06-15 11:56:02 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (8.62 KB)",

"2020-06-15 11:56:05 -06 - [Information-Duplicati.Library.Main.Operation.TestHandler-MissingRemoteHash]: No hash or size recorded for duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes, performing full verification",

"2020-06-15 11:56:05 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 11:57:55 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 11:58:06 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:02:18 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:02:28 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:06:42 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:06:53 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:07:47 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:07:57 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:08:50 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Failed: duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes ()",

"2020-06-15 12:08:50 -06 - [Information-Duplicati.Library.Main.Operation.TestHandler-MissingRemoteHash]: No hash or size recorded for duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes, performing full verification",

"2020-06-15 12:08:50 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes ()",

"2020-06-15 12:09:46 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes ()",

"2020-06-15 12:09:56 -06 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes ()"

],

"Warnings": [],

"Errors": [

"2020-06-15 12:08:50 -06 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-b07b0a29e5d134cc69d31309e2904b210.dblock.zip.aes",

"2020-06-15 12:14:19 -06 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-b26c1bc68055f487e86f36859bba0ce29.dblock.zip.aes"

],

"BackendStatistics": {

"RemoteCalls": 15,

"BytesUploaded": 0,

"BytesDownloaded": 219938775,

"FilesUploaded": 0,

"FilesDownloaded": 3,

"FilesDeleted": 0,

"FoldersCreated": 0,

"RetryAttempts": 8,

"UnknownFileSize": 8,

"UnknownFileCount": 1,

"KnownFileCount": 8825,

"KnownFileSize": 877229435996,

"LastBackupDate": "2020-06-15T03:00:00-06:00",

"BackupListCount": 167,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.5.1 (2.0.5.1_beta_2020-01-18)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2020-06-15T17:31:14.061439Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

}