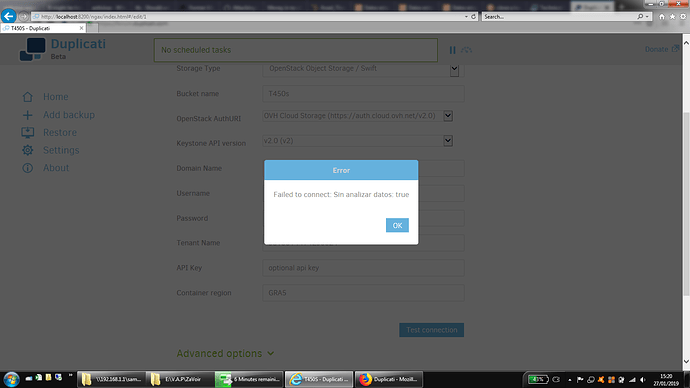

and it sounds like this is the backup of the first full paragraph that used to work. I don’t see how running the new backup could have broken the old one though. Have you checked that the parameters are still correct, perhaps even using Export As Command-line, and tried the “Test connection” button on Destination page?

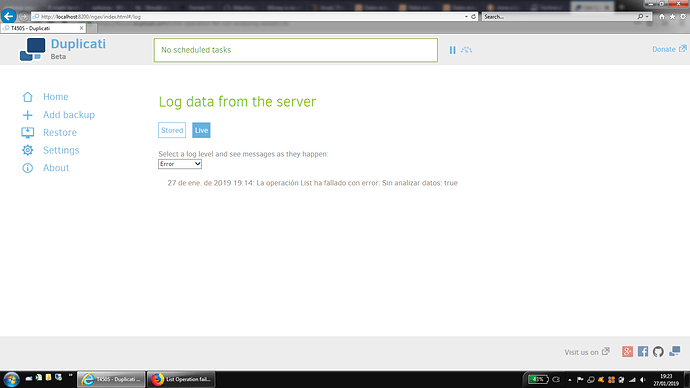

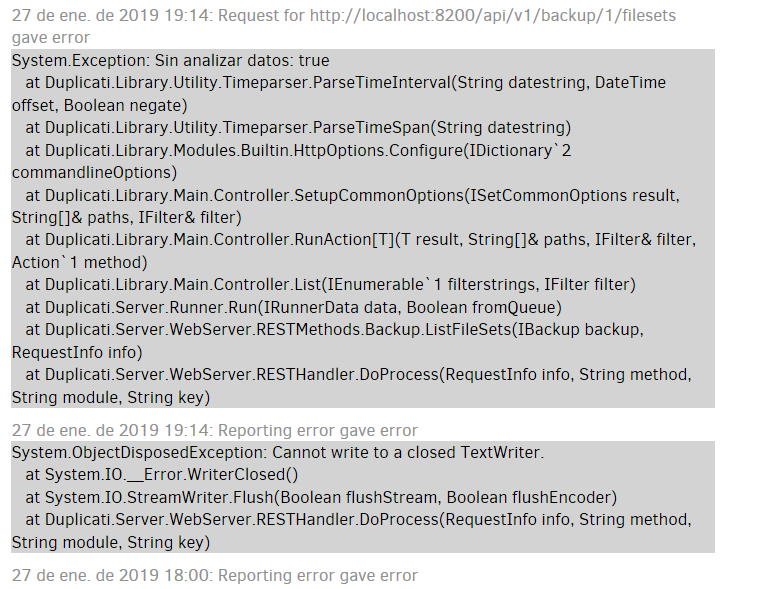

In the live log, sometimes clicking on a one-line message will expand into details. More details are needed.

might be the translation, but it doesn’t make sense to be showing up where yours is. It’s usually due to something unrecognized in a custom retention time string, for example as was discovered in this post.

You could also see if you can list with a command line tool. Duplicati.CommandLine.BackendTool.exe accepts the Target URL from the Export as Command-line I mentioned earlier. Don’t modify, just list.

C:\Program Files\Duplicati 2>Duplicati.CommandLine.BackendTool.exe help

Usage: <command> <protocol>://<username>:<password>@<path> [filename]

Example: LIST ftp://user:pass@server/folder

Supported backends: aftp,amzcd,azure,b2,box,cloudfiles,dropbox,file,ftp,googledrive,gcs,hubic,jottacloud,mega,msgroup,onedrive,onedrivev2,sharepoint,openstack,rclone,s3,od4b,mssp,sia,ssh,tahoe,webdav

Supported commands: GET PUT LIST DELETE CREATEFOLDER

C:\Program Files\Duplicati 2>