Good afternoon, I was wondering if there is a possibility to optimize this listing of files to restore, it takes too long for me to list the items to restore.

This is my version: 2.0.5.110_canary_2020-08-10

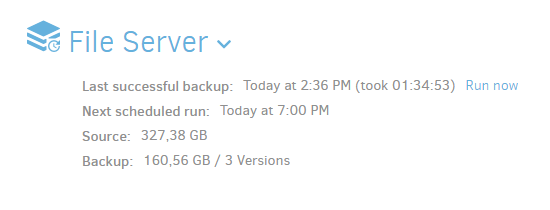

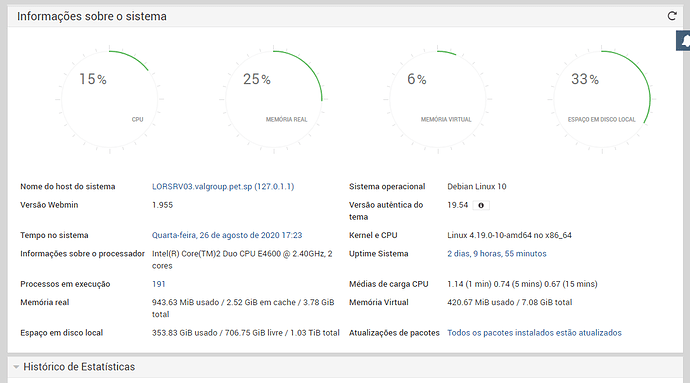

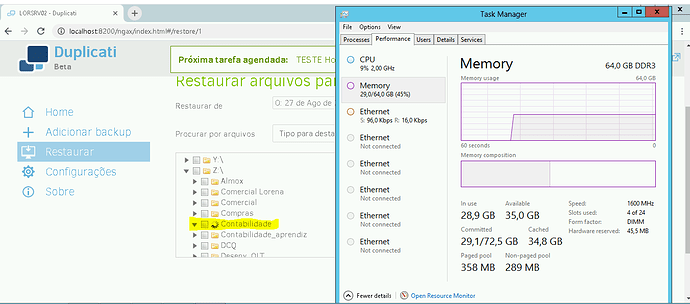

How many files are in this backup job? How large is it? How many versions? Also what kind of CPU is running the Duplicati engine? I think all of these affect the speed of the restore browser.

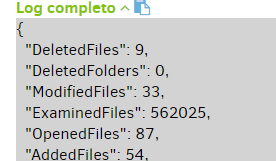

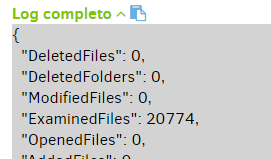

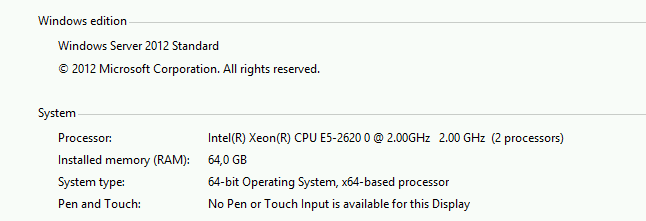

Your data set is pretty large and the processor is 13 years old. How many files are in the backup set, do you know? Check your backup history and look at “examined files” count. I believe that is also a factor.

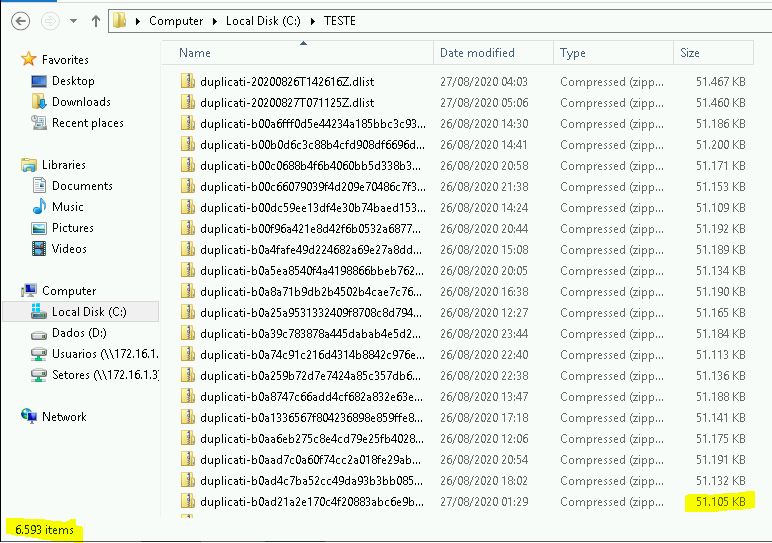

These are the scanned files, I used blocks of 5 GB, now decreases to 1 GB, in case I decrease, in the checks he converts these blocks of 5 GB into blocks of 1 GB?

I even tried to create a unique 200 GB blog so that everyone could stay in one block, but without success, it takes even longer.

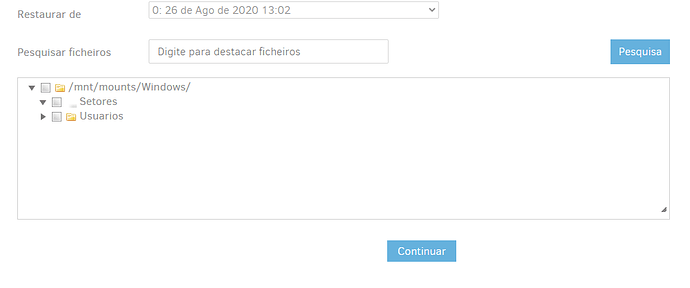

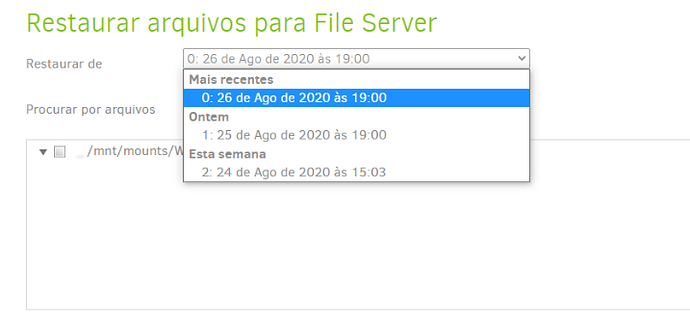

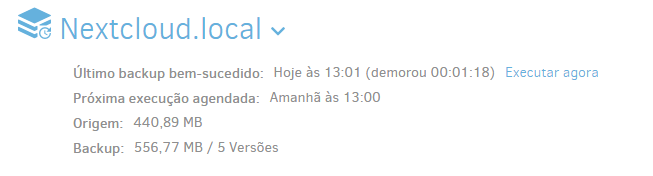

I use smart retention, for now I only have these points

With this other plan the listing of the files is instantaneous, it is certain that the size of the files is smaller, but there are several files to be listed as well.

The backups above are on Linux server, the one below I am uploading the same files on windows server and a little more robust to test the directory listing.

When you say you are using 5GB or 1GB blocks I’m guessing you mean the “remote volume size” (the --dblock-size option). Your deduplication block size is probably still 100KiB (the default, and can’t be changed after your first backup). 100KiB dedupe blocks with 160GiB back end means you have maybe 1.6M blocks being tracked by your database. I’m guessing your older processor is the bottleneck. Maybe optimizations will happen in future releases to help with this.

If you are willing to start over, you may be able to improve performance by changing the deduplication block size to 1MiB (the --blocksize option). This would reduce the number of blocks your database has to track to 10% of what it tracks now, and could improve performance.

Good morning, in that case I created one. Backup from scratch with 1gb block, so the blocks are really 1gb.

The option --blocksize = 1GB

My tests were just finished on the most robust server and the result is the same.

This test with smaller, “standard” blocks.

How does this block issue work, what would be the ideal value for use?

Here’s a write-up on this topic:

Thank you very much, read here!

Now regarding the slowness in listing the files, I did the test on 2 different servers and the performance didn’t change.

Does “server” mean

If so, the result is the same because there is no use of that server. The directory listing is normally the Duplicati system querying its local database. This has seemingly had some optimizations already, per

Optimizing load speed on the restore page

but maybe they’re not enough for your situation. You could certainly do the same logging work to see if there’s some SQL query that’s especially long, but it could take some looking (as seen in above topic).

I’m not familiar with code internals. If you’re lucky enough to have one query that’s the main time delay, running About → Show log → Live → Profiling might show log pause awhile on “Starting” before end.

For a serious study, you’d want a log-file with at least log-file-log-level=profiling, and tools to study that.

Listing directories for restore very slow #1715 is a very old GitHub issue with last user report in 2018… Discussion may be useful, as comments there also indicate that this is a local Duplicati and DB issue.

Other suggestions given here are good, and will help other slow spots, but I’m not sure about this spot.

The word “server” is ambiguous. Is Duplicati on the same system both runs? If so, expect same result.

If Duplicati itself moved to a system that’s hugely faster, then no change would certainly be unexpected.

Potentially big performance boost coming up:

Edit: This impacts the actual restore, and not the listing of the files.

Will this improvement come in an upcoming update?

It has been merged and will be part of the next canary release.

Cool, I’ll wait then!