Is there a way to check how much Backup-Source size difference is caused by compression and how much by deduplication?

Also, as a new user i would like to share my feedback.

The backup speed in x files (y gb) to go at z mb/s was quite misleading for me.

I was calculating ETA by dividing y by z, and concluded that it would take weeks to complete. I noticed later that y go down 10 times as fast as z would indicate so i realized that its probably just the upload speed.

I think some users could think the same and quit because of this, so some kind of processed data speed, or an ETA could be useful there.

Welcome to the forum @Csiz

How is this useful, and what accuracy are you after? You can certainly do experiments, e.g. different

--zip-compression-level (Enumeration): Sets the Zip compression level

This option controls the compression level used. A setting of zero gives

no compression, and a setting of 9 gives maximum compression.

* values: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9

* default value: BestCompression

If you turn compression off, then you see what deduplication gave you. You can’t turn deduplication off.

Another way to estimate compression is from dblock zip files, using a utility that shows compression.

If encrypted, you would decrypt the file with AES Crypt or Duplicati’s SharpAESCrypt.exe CLI program.

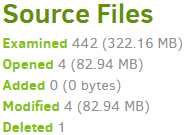

Viewing the log files of a backup job gives aggregate information, but only on source and backup sizes:

Complete log shows"BytesUploaded": 61126381, so it uploaded about 70% of its modified file size. Deduplication can have a big effect on ongoing backups because only changes upload, about 18% here.

Same “ongoing backup” effect is possible here, or was this the first? On a non-initial backup, processing happens faster because there may be files that are unchanged, or changed in a way deduplication helps.

I think that’s what you get, but processing speed varies with what is found, and future finds are not known, meaning an ETA for final completion of processing then upload can’t be computed early. After processing, upload speed might be computable, but it’s kind of late. asynchronous-upload-limit default allows 4 files of processed data to be in the upload queue. With default file size of 50 MB, this is near the end of uploading.