Feb 11, 2018 8:08 AM: Failed while executing “Backup” with id: 6

Duplicati.Library.Interface.UserInformationException: Found 73380 remote files that are not recorded in local storage, please run repair

This means that the list of files at your DESTINATION include 73,380 files that Duplicati didn’t recognize. It’s not a reference to any local storage changes (deletes or otherwise).

Since the local database is used to keep track of what files Duplicati has saved to the destination that means that either:

- something outside of Duplicati dumped a whole bunch of files into the destination folder (quite possible)

- the Destination folder in the job got changed to point to somewhere that already had a bunch of files (possible, but people usually remember changing a job)

- the local database is corrupted (unlikely that it would cause this specific error but still work fine elsewhere)

- the destination provider is not returning the correct list of files

Things you can check into include:

- connect to the destination outside of Duplicati and see if there are 73k+ files listed (and whether or not they’re all Duplicati related files (meaning dblock, dindex, or dlist files)

- in Duplicati check the job “Show log…” menu, click on the Remote tab, a expand messages such as “list” which should include a chunk of the files the destination reported as having

- check regular messages / logs which might include some of the unexpected files

Sigh — turns out I hadn’t booted my Mac in a long time but when I did (a few days ago), the local Duplicati application launched. But there was already a launchdaemon version with system privileges running.

Guess, I’m going to have to start all over again.

Bummer

Why start all over? I assume it’s the non-daemon version complaining about the files, do you even want that one running?

Because when I looked in the two backup folders, there were only about 10 files or so in each of them (dindex and dlist files) with a total file size of about 700M. Given that the source drives have almost 3 TB of data on them, that’s either one hell of a compression rate or a totally broken back.

Unfortunately, at this point, I may not even bother. I’ve had enough aggravation that the cost of Crashplan for Small Business is looking like a reasonable deal.

Ease of use is definitely one thing CrashPlan currently has over Duplicati.

It’s not the ease of use, it’s the “set it and forget it” aspect so I don’t have to keep checking it all the time.

The thing is, it has been running flawlessly on my wife’s Mac, where I installed Duplicati at around the same time last year and it is running flawlessly on a Linux VM backing up my Zimbra mail.

I’m going to give it one last shot, I’ve completely wiped Duplicati files on my environment and started fresh.

So here’s what’s going on. I have started completely fresh. I created a job for which I selected the source as /Volume/Macintosh HD

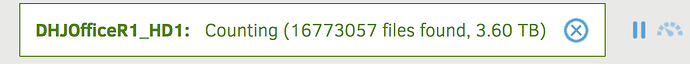

That volume is a 1TB SSD with about 600GB used. However, if you look at the attached image, you’ll see that Duplicati has found 3.6TB of data already.

Now I have other volumes but they’re all mounted under /Volume so they shouldn’t show up under /Volume/Macintosh HD. It may be that there are symbolic links that I’ve forgotten about. So is there a way to tell Duplicati not to follow symbolic links?

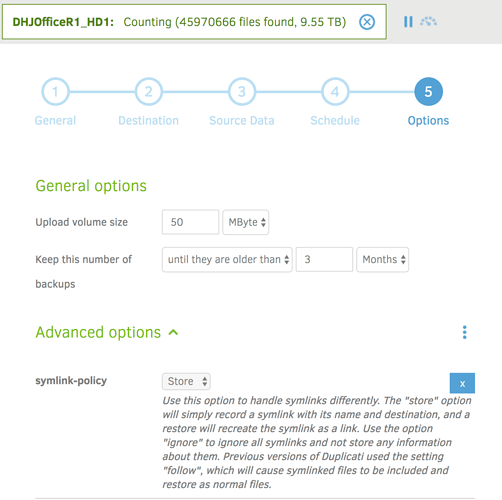

By default I believe when Duplicati finds a symbolic link it’s supposed to just stores that a link exists, it doesn’t follow it. Is it possible you set --symlink-policy=follow at some point?

--symlink-policy

Use this option to handle symlinks differently. The “store” option will simply record a symlink with its name and destination, and a restore will recreate the symlink as a link. Use the option “ignore” to ignore all symlinks and not store any information about them. Previous versions of Duplicati used the setting “follow”, which will cause symlinked files to be included and restore as normal files.

Definitely not — I have never set any options - see attachment. Meanwhile it’s still counting and has found 9.5 TB on my 1TB drive.

Question: is there a way I can look at the list of files that it is finding? That would obviously make it easier to figure out what’s going on.

Too bad - that would be some awesome drive compression results!

I’d recommend you use the -test-filters command. While it is available via the Command line GUI, some users have reported running it that way doesn’t always apply the filters correctly so I’d suggest trying a shell command line as follows:

- In the job menu select “Export …” then “As command-line” then click the “Export” button.

- Copy / paste the resulting string into a text editor and change

backuptotest-filters, then add--full-result="true"and--log-file="\<path>" - Run the resulting command line which should spit out a file at <path> showing a list of what is being included and excluded

Of course if you are stuck in a recursive loop this command might run for quite a while so you may need to monitor the text file until you start seeing repeating folders then just kill the command.

If the command line thing doesn’t work out for you then you could try the GUI Command line option for a normal backup but with --dry-run, --full-result and --log-file parameters added.

Once we figure out where the recursion is happening we can try to determine why Duplicati isn’t obeying the Store parameter.

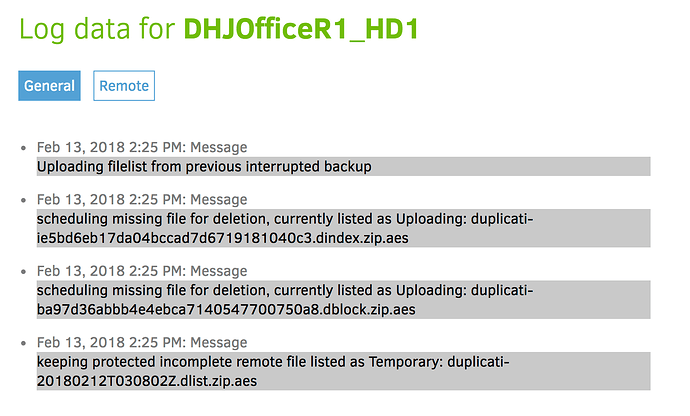

OK - I will try that — in the meantime, I decided to stop the “backup” and the log contained the following (see image). Given that this was a brand new backup, where all files in the remote system were removed before we even started, why would there be these errors in the log?

That’s a very good question. When you stopped the backup was that the first very first time you’d run DHJOfficeR1_HD1 or had there been possibly been a start, abort, start again before it got to the super big file count?

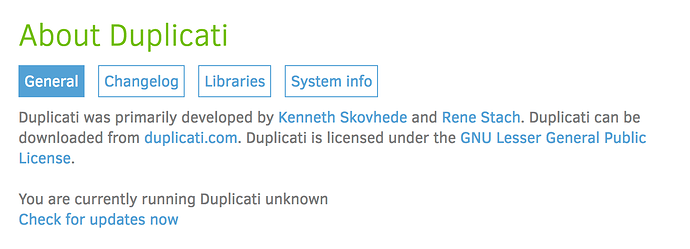

I apologize if you included this but I’m not finding it - what version of Duplicati are you using? I ask in case you’re on the latest 2.0.2.19 canary and maybe found a new bug…

It was the very first time because I completely removed everything (including the database .sqlite files) before I started.

As for the version, that’s a good question (see graphic), apparently I’m using an “unknown” version, even after doing a Check for Updates Now, but I certainly haven’t updated it since I installed it in August and was certainly not running Canary version (was wondering if I should though)