Hello,

I have a backup with about 1 TB. Source 157k files, 9000 folders.

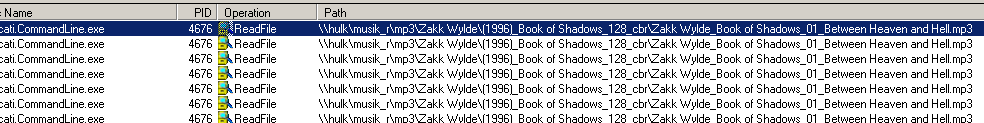

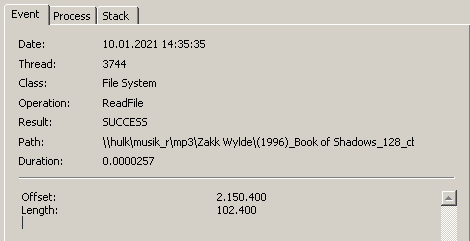

I have set the --check-filetime-only=true but the backup is very, very slow WITHOUT ANY FILES CHANGED. The “… files need to be examined” is visible for HOURS without any file has changed. I started procmon (sysinternals suite) and looked what Duplicati is doing and I see this (filtered the query aso, just to see the ReadFile):

So Duplicati is reading this file in 100k blocks.

As I see in the procmon output EVERY SINGLE FILE is read completly! At the moment this backup is running since 35 minutes and has read from the source file system (a different local Windows machine with a share) throu port 445 about 120 GB (seen in Tool Net Limiter) . This is a reallistic speed with about 50-60MB/sec.

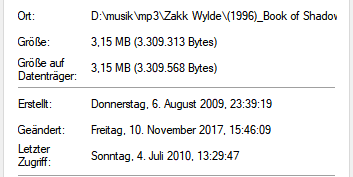

The File Timestamp for the example file is in CMD:

in Windows

This file was already included in the first version of the backup and has not changed size or date since the first backup some days ago.

My Question: How can I find out why Duplicati is reading all the files and not just checking the timestamp?