I have a backup configure to azure blob and it used to work well, but some days ago, i received this error on email

Failed: The request was aborted: The request was canceled.

Details: Microsoft.WindowsAzure.Storage.StorageException: The request was aborted: The request was canceled. ---> System.Net.WebException: The request was aborted: The request was canceled.

at System.Net.ConnectStream.InternalWrite(Boolean async, Byte[] buffer, Int32 offset, Int32 size, AsyncCallback callback, Object state)

at System.Net.ConnectStream.Write(Byte[] buffer, Int32 offset, Int32 size)

at Microsoft.WindowsAzure.Storage.Core.ByteCountingStream.Write(Byte[] buffer, Int32 offset, Int32 count)

at Microsoft.WindowsAzure.Storage.Core.Util.StreamExtensions.WriteToSync[T](Stream stream, Stream toStream, Nullable`1 copyLength, Nullable`1 maxLength, Boolean calculateMd5, Boolean syncRead, ExecutionState`1 executionState, StreamDescriptor streamCopyState)

at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.ExecuteSync[T](RESTCommand`1 cmd, IRetryPolicy policy, OperationContext operationContext)

--- End of inner exception stack trace ---

at Duplicati.Library.Main.Operation.BackupHandler.HandleFilesystemEntry(ISnapshotService snapshot, BackendManager backend, String path, FileAttributes attributes)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(ISnapshotService snapshot, BackendManager backend)

at Duplicati.Library.Main.Operation.BackupHandler.Run(String[] sources, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass16_0.<Backup>b__0(BackupResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

Request Information

RequestID:

RequestDate:

StatusMessage:

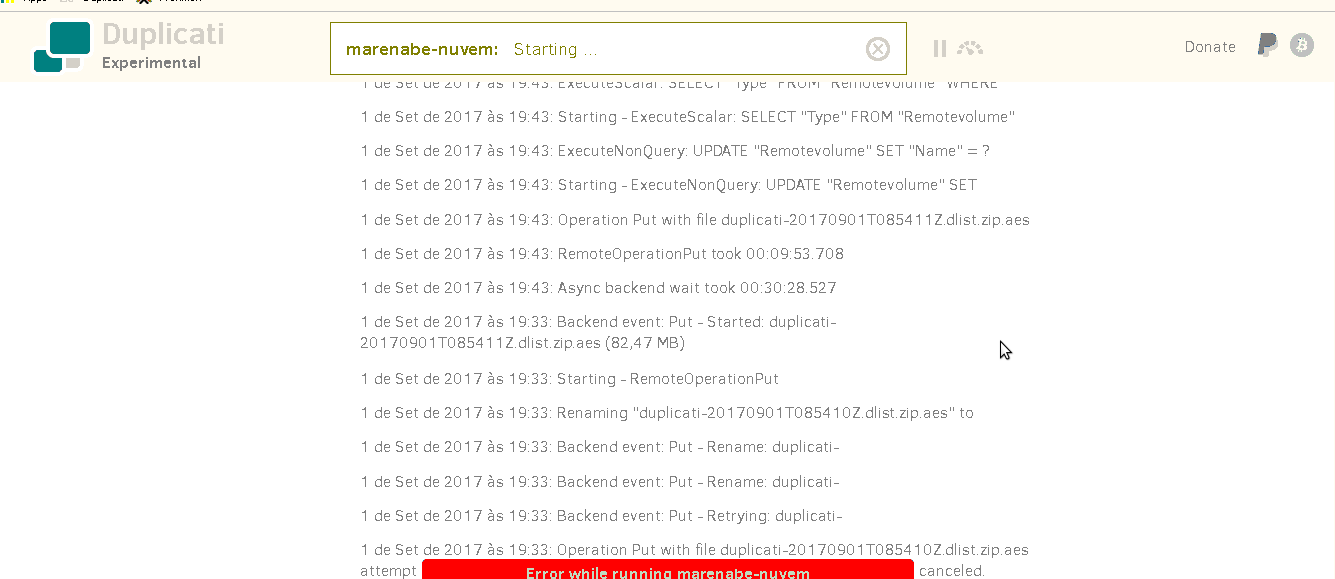

I then deleted the backup and start it from scratch. But again, after uploading 40GB (full backup size), i have the same error on “Waiting for upload” stage.

I tested with a new backup and it works well with small files. When i put some “big” file (100mb or more) it gave the same error. On this “test backup”, i solved the issue changing the “block size” from 50 to 20mb. But in the “production backup” of 40GB this trick doesn’t work.

I noticed that in the end of backup, duplicati tries to upload a 80mb file, and then it gave the error message, tries to rename the “block” and the backup fails.

Questions:

Why are duplicati uploading a 84MB file, if my settings are configured to 20MB block size?

How can i solve it or give more informations?

Screenshots: