Was it hard to get work done because Duplicati slowed the system? It’s certainly quite slow itself.

Any idea via Task Manager (or otherwise) what system resources were under stress at the time?

Whatever’s being slow here might also affect Direct restore from backup files if it’s ever required.

Duplicati.CommandLine.RecoveryTool.exe

This tool can be used in very specific situations, where you have to restore data from a corrupted backup. The procedure for recovering from this scenario is covered in Disaster Recovery.

Restoring files using the Recovery Tool

So you can probably still get files if it’s worth tying up the storage, but it’d be nicer to work normally, which basically means getting your database back together somehow. Slowness makes this worse.

Do you have a copy of the database before Recreate, or any other information on backup history?

Sometimes one may try to back out the latest changes to remote, in the hope that earlier is still OK.

The error you got is a database error, but the unknown is what it’s looking for (and trying real hard).

If you have nothing else, Google Drive itself can let you make guesses, if your usage is predictable.

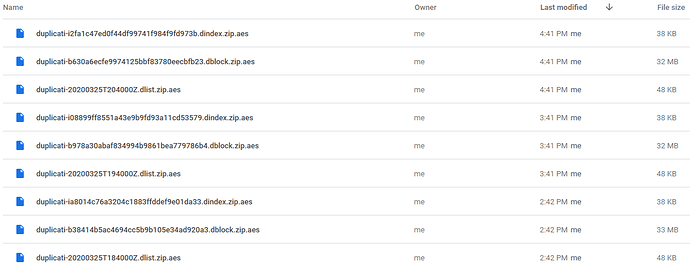

Here’s a generally predictable backup, viewed at drive.google.com:

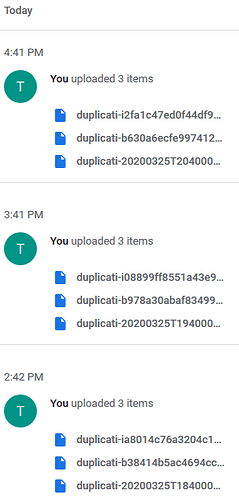

On the right of that is a helpful Activity display:

Seeing no deletes is a good sign, because deletes might be a compact that would complicate things.

Regardless, the theory (I think) is that dlist files are your backup file lists, and say what blocks to use.

Blocks are in dblock files and are indexed by dindex files one-for-one. If somehow a block is missing, extensive searching occurs. It can be awhile even if fast, and the one you posted was far from fast…

So – if you copied the DB before the Recreate, there’s a chance it can be fixed, or at least shed light.

Otherwise there’s a chance that hiding the most recent dlist (add prefix to name) may help Recreate, however if it gets to Pass 3 of 3 again, you probably don’t want to wait until the end if it’s still so slow.

There are some errors, though, that actually benefit from searching all the way. Did you run Canary? That had dindex and other problems awhile. Beta is safest for important backups (but is not perfect). Experimental has been pretty good recently, because it’s been a pre-Beta (largely to test upgrading).