I thought I would provide some feedback here because for some months and different versions of the Canary releases I’ve had one server, running Fedora 42, every few days reporting this same issue for one of its backups. However, this backup is using an SMB share on a local Windows 2025 server as its destination, not an offsite one as being reported above.

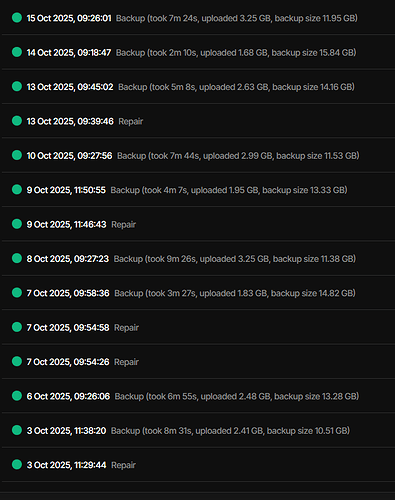

I waited until this morning for the backups to complete after updating to .105 and this is what it reported:

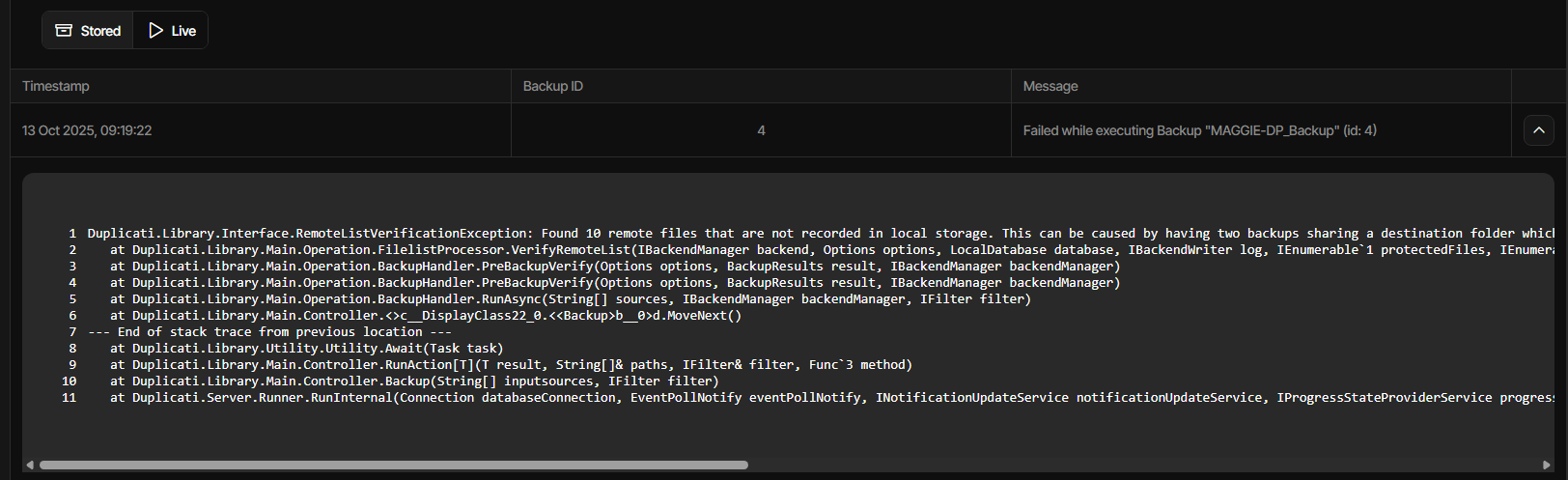

Failed: Found 10 remote files that are not recorded in local storage. This can be caused by having two backups sharing a destination folder which is not supported. It can also be caused by restoring an old database. If you are certain that only one backup uses the folder and you have the most updated version of the database, you can use repair to delete the unknown files.

Details: Duplicati.Library.Interface.RemoteListVerificationException: Found 10 remote files that are not recorded in local storage. This can be caused by having two backups sharing a destination folder which is not supported. It can also be caused by restoring an old database. If you are certain that only one backup uses the folder and you have the most updated version of the database, you can use repair to delete the unknown files.

at Duplicati.Library.Main.Operation.FilelistProcessor.VerifyRemoteList(IBackendManager backend, Options options, LocalDatabase database, IBackendWriter log, IEnumerable`1 protectedFiles, IEnumerable`1 strictExcemptFiles, Boolean logErrors, VerifyMode verifyMode, CancellationToken cancellationToken)

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify(Options options, BackupResults result, IBackendManager backendManager)

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify(Options options, BackupResults result, IBackendManager backendManager)

at Duplicati.Library.Main.Operation.BackupHandler.RunAsync(String[] sources, IBackendManager backendManager, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass22_0.<<Backup>b__0>d.MoveNext()

--- End of stack trace from previous location ---

at Duplicati.Library.Utility.Utility.Await(Task task)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Func`3 method)

The share is a unique folder dedicated to this server, so there is no sharing with another server. Basically the main share is \LISA\MAGGIE with two backups on this server, one uses \LISA\MAGGIE\DUPLICATI\LOCAL\ for its destination and this backup rarely fails and so far not like this, the second backup uses \LISA\MAGGIE\DUPLICATI\DP. The way I have things scheduled is that when the first backup finishes it starts the second one using an after job script. Basically, the second job just backs up the database of the first job.

If I run a repair then re-run the backup, it usually works but I’ve had times when it needs a second repair. In the case of the above, it worked after a single repair.

My prediction is that it will probably be fine

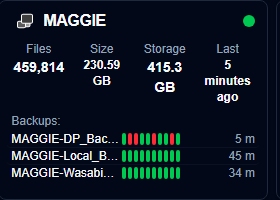

I just realised that the image shows 3 backups, only two of them use the share and the order is Local (scheduled), Wasabi (scripted), DP (scripted) so there is quite a gap between the two backups using the SMB share.