Hi,

I’ve installed Duplicati 2.0.6.3 in a Docker container (with the official image) on my Synology NAS. Before I had installed the same version natively, but I had to remove Duplicati because it filled up my system partition completely and my NAS got stuck almost fully (I was very lucky to be able to SSH into my NAS after many restarts; the restart after disconnecting my USB devices made me able to SSH into my NAS and delete the sqlite file of 1,3 GB…). Anyway, because of this experience I decided to use Duplicati in a containerized way.

However, my first backup goes extremely slow. When using Duplicati natively I got less than 1 MB/s. I thougt the limited size of my system partition was the evildoer, but it’s not that much better the Docker way: 1,11 - 1,24 MB/s. I backup to a local, though external disk (through USB 2.0). Admitted, I do use encryption (AES), as configured in Duplicati.

I have no RAM issues; CPU usage is okay most of the time, although my NAS can get busy from time to time (however, most of the time it has enough resources available).

I have about 11 TB to backup. Yes, that’s quite a lot, but this amount will certainly increase to 15 and 20 TB within the next 2 or 3 years. I backup to a 18 TB HDD (Seagate) in a 4 bay external disk enclosure (Icy Box). With the current speed it would take 4 to 5 months (!!!) to finish my first backup!

With Hyper Backup (from Synology) that only took a few days! I stopped using Hyper Backup though, because after a few backups the repository got corrupted for one reason or another… So I decided to try on Duplicati (also because OneDrive for Business works with Duplicati!).

FWIW: my remote volume size was 50 MB w<hen using Duplicati natively; now, in Docker, it’s 200 MB.

How can I troubleshoot this? Is this expected behavior? Any tips? Advice? Solutions?

Thanks a lot!

Regards,

Pedro

yes this is expected behaviour, but not duplicatis fault.

its synology and most NAS in general.

they are incrible slow, specially when it comes to large amounts of files. linux in general isnt that great with that, add samba, a slow NAS processor and not many disks and you have a desaster on ur hands

ur icybox jsut makes things worse. what do you expect from an USB enclosure ?

why synology backup works (a tiny bit) better? well because they upload into one file kinda huge junks (but that has a hole lot other issues in the long run) thats easier to digest for that little NAS but still far from good.

best advise i can give. toss those NAS into the bin, no not resell it, they are cursed, bad karma

build ur own nas with an enclosure that can hold all ur disks. even a 10 year old I3 is faster and mroe relyable. for easier handeling you can use FreeNAS

however in any case you can mount ur nas as an ISCSI device instead of an SMB share, that will reduce overhead and makes it a bit better. however that icybox enclosure will make you trouble, a lot.

also careful about the raid level. i assume youll use big consumer disks. dont ever use any kind of RAID5 with that. it will fail even with just one dead disks. either take the risk and use pure stripeset (and gain vperformance) or use freenas or another BSD based nas software that supports raidz

zfs raid will work contrary to raid5, reason is the expecture URE failure rate on large consumer drives is to high to gurantee recovery on a raid5 and with one error the hole array fails. data would be largly recoverable but only manually. so just dont

Synology has a wide variety of models at various price points. They are certainly not all slow. What model NAS are you using? How much RAM does it have?

For such a large backup, it might be best to split it out into different jobs. At a minimum you would want to increase the deduplication block size. The default of 100KB is not suitable for such a huge backup. A rule of thumb we sometimes suggest on the forum is to take your total backup size and divide by 1 million to calculate a recommended block size. For instance, a 10TB backup should probably use a 10MB dedupe block size. Note that you must set this value before your first backup.

That being said, I don’t believe your dedupe block size is the cause of your performance issues. I’m curious to find out the model.

Also, is “enable resource limitation” configured on the container?

Hi,

Thank you for the very useful information!

I have a DS2411+ (12 bay) with an extra 2 GB RAM module (so 3 GB RAM in total). RAM usage is (very) good; CPU usage is, well it depends: sometimes good, sometimes quite high, but it’s not sitting there constantly at 90+% or so.

My Duplicati Docker container uses 34% of the CPU and about 100 MB of RAM (according to Docker). The mono process is the big “evildoer” of course.

I have no resource limitation enabled, as I wanted to give the container a lot of room to do its thing. Is there a reason I should enable this for better Duplicati performance?

The dedup block size is the default I guess: 100 KB. However, I can’t find an environment var to change this. What’s the best way to change this in my particular case (so using the official Duplicati container image)? I have changed stuff inside containers before, but always as a last resort (that’s why I ask about a potential env var).

Thanks again for your help! This is very useful to me!

Grtz!

Pedro

No, I wouldn’t… I was just making sure it wasn’t set and possibly causing the issue.

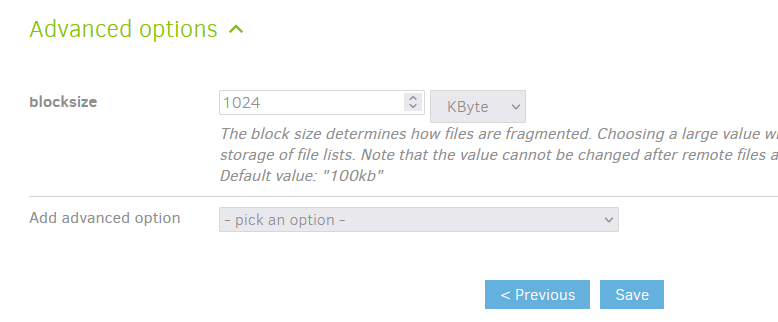

Most settings are configured through the Duplicati web UI. In the case of the deduplication block size, it’s an advanced setting you configure on page 5 of your backup job config. Click the “add advanced option” dropdown and find “blocksize” in the Core options seciton. Then you’ll be able to configure it:

You can read here for more information on this and other size settings: Choosing Sizes in Duplicati - Duplicati 2 User's Manual

Try starting over with a small set of data to back up and confirm that it can complete in a timely fashion and not be too slow. You can then add more data to the backup job (by selecting additional folders) as desired. Or you can split up your backups into multiple jobs if that makes sense for your scenario.

Hi,

Changing the dblock size has certainly improved the situation. However, it’s still much slower than Hyper-Backup (I’ve tested with a plethora of dblock and remote volume sizes). I’m going to split up my backups in such a way that the local backups will occur with Hyper Backup and my (smaller) backups to OneDrive for Business with Duplicati.

With Duplicati, after finding the (approximately) “right” size values, I could achieve speeds of 3 MB/s, max. 4. With Hyper Backup I’m able to double this. OneDrive for Business is another story: Duplicati is able to get 500-700 KB/s. I can’t use Hyper Backup for this, as OneDrive for Business is only supported through a 3rd party proxy (basic-to-sharepoint-auth-http-proxy, which should work), but not in MFA cases (yes, yes, app passwords, but it doesn’t work in this situation). It does work however in Duplicati (although the type “Microsoft OneDrive v2” should be used: it seems the specific OneDrive for Business type isn’t valid anymore according my tests and a source on the Net).

With all this information I should be able to have backups of different types on different locations (local + cloud, online + offline, at home and remote location, etc. etc.). It’s not my ideal setup, but to achieve this I’m afraid I have to upgrade to stuff of a different level (redundant servers, fibre, remote servers, high class NICs/disks/…). What I can do now however is good enough and should cover my needs in a goog to very good way (but as I said, not close-to-perfect). In a nutshell: I could have hoped for more, but in the end I’m satisfied

Thanks for your help, guys! Especially the dblock size tip has made a difference, although at the end I’ll still be using Hyper Backup for local backups because of better performance. But as I said, for OneDrive for Business it will be a great help!

Ciao!

Pedro

I don’t know if Duplicati can achieve the same performance level as HyperBackup as it probably works quite differently under the hood. There is some room for performance improvement in Duplicati: some operations are limited to a single thread, some database queries could be optimized, etc. That being said, if you have time to experiment you could mess around with the concurrency-xxxxx options to see if tweaking those makes any difference for you.

Hi,

concurrency-max-threads already has a default value of 0 and should use the existing hardware capabilities for good performance. I’ve changed this to a fixed number of threads, but I see degradation instead of improvement.

concurrency-block-hashers and concurrency-compressors have a default value of 2. Increasing those to 4 doesn’t seem to make a difference (or at least not a noticeable or noteworthy difference).

I’m not saying that improvements are not possible, but at first glance I don’t succeed. I suspect (very) small improvements can be found, when I would test in a very fine grained way with many, many combinations of different parameter values and in-depth analysis of resource usage, but I don’t think it’s worthwhile for me to do this right now (I’ve already tested multiple value combinations and I don’t see any “opening”…).

However, thanks a lot for your help and insights, @drwtsn32! I’ve decided to use Duplicati for certain backup types (i.e. towards OneDrive for Business), so I’m certainly in (although partly)

Greetz,

Pedro