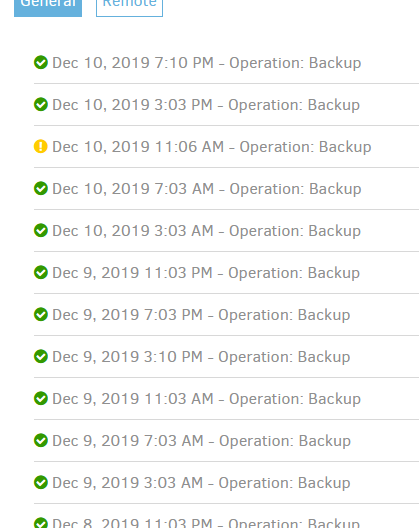

I have a couple of warnings and a couple of errors that pop up on two of my backups. They are shown even those those backups claim to have finished “successfully”. I would like to make sure that what I’m seeing is not serious, or at least doesn’t affect the stability of the backup.

First, the WARNING. I’m pretty sure its innocuous becuase there’s something about empty metadata rather than some file? In any case, the following is an example of the type of warning I’m seeing:

Nov 27, 2019 12:02 AM: Result

DeletedFiles: 1

DeletedFolders: 0

ModifiedFiles: 1

ExaminedFiles: 36027

OpenedFiles: 12

AddedFiles: 11

SizeOfModifiedFiles: 103710

SizeOfAddedFiles: 982714

SizeOfExaminedFiles: 20194206331

SizeOfOpenedFiles: 1086424

NotProcessedFiles: 0

AddedFolders: 0

TooLargeFiles: 0

FilesWithError: 0

ModifiedFolders: 0

ModifiedSymlinks: 0

AddedSymlinks: 0

DeletedSymlinks: 0

PartialBackup: False

Dryrun: False

MainOperation: Backup

CompactResults:

DeletedFileCount: 2

DownloadedFileCount: 0

UploadedFileCount: 0

DeletedFileSize: 1914

DownloadedFileSize: 0

UploadedFileSize: 0

Dryrun: False

MainOperation: Compact

ParsedResult: Warning

Version: 2.0.4.5 (2.0.4.5_beta_2018-11-28)

EndTime: 11/27/2019 12:01:21 AM (1574830881)

BeginTime: 11/27/2019 12:01:18 AM (1574830878)

Duration: 00:00:02.9266682

Messages: [

2019-11-27 00:00:00 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started,

2019-11-27 00:00:09 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2019-11-27 00:00:27 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (717 bytes),

2019-11-27 00:01:03 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b4b659c409149408ab68b093bfc360440.dblock.zip.aes (667.76 KB),

2019-11-27 00:01:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b4b659c409149408ab68b093bfc360440.dblock.zip.aes (667.76 KB),

...

]

Warnings: [

2019-11-27 00:00:55 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.MetadataGenerator.Metadata-MetadataProcessFailed]: Failed to process metadata for "<<...>>\Documents\BookSmartData\kris birthday book\library\1ea72ce3-548a-4a82-91ac-2cc596a19988.original", storing empty metadata,

2019-11-27 00:00:55 -05 - [Warning-Duplicati.Library.Main.Operation.Backup.FileBlockProcessor.FileEntry-PathProcessingFailed]: Failed to process path: <<...>>\Documents\BookSmartData\kris birthday book\library\1ea72ce3-548a-4a82-91ac-2cc596a19988.original

]

While the following is the type of error I’m seeing.

DeletedFiles: 0

DeletedFolders: 0

ModifiedFiles: 0

ExaminedFiles: 61044

OpenedFiles: 0

AddedFiles: 0

SizeOfModifiedFiles: 0

SizeOfAddedFiles: 0

SizeOfExaminedFiles: 191264017084

SizeOfOpenedFiles: 0

NotProcessedFiles: 0

AddedFolders: 0

TooLargeFiles: 0

FilesWithError: 0

ModifiedFolders: 0

ModifiedSymlinks: 0

AddedSymlinks: 0

DeletedSymlinks: 0

PartialBackup: False

Dryrun: False

MainOperation: Backup

CompactResults: null

DeleteResults:

DeletedSets: []

Dryrun: False

MainOperation: Delete

CompactResults: null

ParsedResult: Error

Version: 2.0.4.5 (2.0.4.5_beta_2018-11-28)

EndTime: 12/1/2019 2:03:26 AM (1575183806)

BeginTime: 12/1/2019 2:03:09 AM (1575183789)

Duration: 00:00:16.6621065

Messages: [

2019-12-01 02:00:00 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started,

2019-12-01 02:00:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2019-12-01 02:02:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.27 KB),

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed,

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00,

...

]

Warnings: []

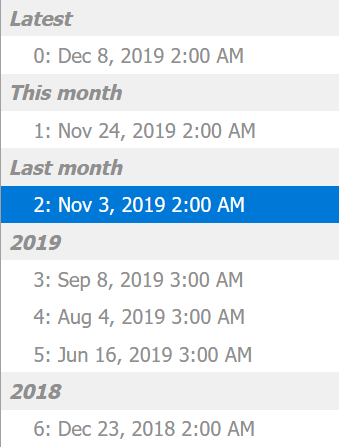

Errors: [

2019-12-01 02:06:33 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190616T070000Z.dlist.zip.aes,

2019-12-01 02:07:51 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190804T070002Z.dlist.zip.aes,

2019-12-01 02:09:10 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190908T070000Z.dlist.zip.aes,

2019-12-01 02:10:31 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191103T070003Z.dlist.zip.aes,

2019-12-01 02:11:50 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191124T070000Z.dlist.zip.aes,

...

]

BackendStatistics:

RemoteCalls: 72

BytesUploaded: 0

BytesDownloaded: 790723838

FilesUploaded: 0

FilesDownloaded: 70

FilesDeleted: 0

FoldersCreated: 0

RetryAttempts: 24

UnknownFileSize: 0

UnknownFileCount: 0

KnownFileCount: 13584

KnownFileSize: 177480612080

LastBackupDate: 11/24/2019 2:00:00 AM (1574578800)

BackupListCount: 6

TotalQuotaSpace: 1104880336896

FreeQuotaSpace: 833139431124

AssignedQuotaSpace: -1

ReportedQuotaError: False

ReportedQuotaWarning: False

ParsedResult: Error

Version: 2.0.4.5 (2.0.4.5_beta_2018-11-28)

Messages: [

2019-12-01 02:00:00 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started,

2019-12-01 02:00:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2019-12-01 02:02:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.27 KB),

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed,

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00,

...

]

Warnings: []

Errors: [

2019-12-01 02:06:33 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190616T070000Z.dlist.zip.aes,

2019-12-01 02:07:51 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190804T070002Z.dlist.zip.aes,

2019-12-01 02:09:10 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190908T070000Z.dlist.zip.aes,

2019-12-01 02:10:31 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191103T070003Z.dlist.zip.aes,

2019-12-01 02:11:50 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191124T070000Z.dlist.zip.aes,

...

]

RepairResults: null

TestResults:

MainOperation: Test

Verifications: [

Key: duplicati-20190616T070000Z.dlist.zip.aes

Value: [

Key: Error

Value: Hash mismatch on file "%TEMP%\dup-7d8fcbf4-3aad-4a98-9a43-85b1fc010b0f", recorded hash: UoGtUcOHV9AXWupkOzUW1p+ghPDVwPcq/3ylOu2uBO0=, actual hash L7dD/9ZL9HfT+SXIB22ixOIih1WpEh95+Xom012Y0F4=

],

Key: duplicati-20190804T070002Z.dlist.zip.aes

Value: [

Key: Error

Value: Hash mismatch on file "%TEMP%\dup-f7cb2c4e-90b9-41bf-8eaf-e336f85eddba", recorded hash: UUo6mknmU5uMn9KY3hRT6FkvYkGwPmuKyMJpY7fPEMs=, actual hash xGfSoVH/OBMJcgdBxeuKr1Udy2BzPjoMFXQLi8FqHSg=

],

Key: duplicati-20190908T070000Z.dlist.zip.aes

Value: [

Key: Error

Value: Hash mismatch on file "%TEMP%\dup-549d286e-1591-42c5-98a2-bd402ea2775b", recorded hash: Tblpj6iv2w4ddt+dUP56mkxB66KN4fm2bd7siFlgD1E=, actual hash ikkUhmd/3akqqGDc5uJXCqweoFwEeIW4dQqSaMeo9Qw=

],

Key: duplicati-20191103T070003Z.dlist.zip.aes

Value: [

Key: Error

Value: Hash mismatch on file "%TEMP%\dup-2da56840-61de-4dda-854a-fc216f78803d", recorded hash: OCDN3y9ZDpAwpXkqUdU2DTpZKEY3ScmQsmN7GrX/wsA=, actual hash S3atQ8MOQgRChlU5rl288KDbyYbPIEI93D9OgjeBlNI=

],

Key: duplicati-20191124T070000Z.dlist.zip.aes

Value: [

Key: Error

Value: Hash mismatch on file "%TEMP%\dup-a0da7821-8703-4b24-a803-982674c0df39", recorded hash: f8BPkSosFGEvr7fF+awqkmvTWx4UoVJqv9x7rdhTr8c=, actual hash y/qye18BuEX+KE0+cp6nzGR0L7JulbAT+2Xs+nvIu3c=

],

...

]

ParsedResult: Error

Version: 2.0.4.5 (2.0.4.5_beta_2018-11-28)

EndTime: 12/1/2019 2:25:49 AM (1575185149)

BeginTime: 12/1/2019 2:05:15 AM (1575183915)

Duration: 00:20:34.5288014

Messages: [

2019-12-01 02:00:00 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started,

2019-12-01 02:00:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2019-12-01 02:02:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.27 KB),

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed,

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00,

...

]

Warnings: []

Errors: [

2019-12-01 02:06:33 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190616T070000Z.dlist.zip.aes,

2019-12-01 02:07:51 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190804T070002Z.dlist.zip.aes,

2019-12-01 02:09:10 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190908T070000Z.dlist.zip.aes,

2019-12-01 02:10:31 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191103T070003Z.dlist.zip.aes,

2019-12-01 02:11:50 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191124T070000Z.dlist.zip.aes,

...

]

ParsedResult: Error

Version: 2.0.4.5 (2.0.4.5_beta_2018-11-28)

EndTime: 12/1/2019 2:25:51 AM (1575185151)

BeginTime: 12/1/2019 2:00:00 AM (1575183600)

Duration: 00:25:51.1571890

Messages: [

2019-12-01 02:00:00 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started,

2019-12-01 02:00:10 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: (),

2019-12-01 02:02:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (13.27 KB),

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed,

2019-12-01 02:03:09 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-FramesAndIntervals]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00,

...

]

Warnings: []

Errors: [

2019-12-01 02:06:33 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190616T070000Z.dlist.zip.aes,

2019-12-01 02:07:51 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190804T070002Z.dlist.zip.aes,

2019-12-01 02:09:10 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20190908T070000Z.dlist.zip.aes,

2019-12-01 02:10:31 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191103T070003Z.dlist.zip.aes,

2019-12-01 02:11:50 -05 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-20191124T070000Z.dlist.zip.aes,

...

]

It seems related to Error Message following successful backup job, or Database error backup or Errors on backup but they don’t seem to shed any light.

This is a backup to OneDrive. Should I be worried?