I edited the backup job making it a SFTP connection. I ran the backup and got that error message. Do you think it just makes more sense to do that, to straight up run the backup and then as long as there’s no errors, I’m good?

Yes. I did this already, running the backup with a SFTP connection. I got the rebuild d blocks error message. Or purge the files.

Odd that’s in repair. Maybe you set the advanced option auto-cleanup. Regardless it’s not totally happy. Possibly turning on the rebuild-missing-dblock-files advanced option for another Repair will help, however that option usually doesn’t work well, which is why it’s not on. Initial backup maybe does better.

The message about the purge command is probably talking about the purge-broken-files flavor of it.

Recovering by purging files talks about it. Basically source files whose data is missing get removed from the backup, and next backup will back them up again. I don’t know which method would be faster to try.

One problem with just going for it is that we don’t understand what went wrong with the previous backup which possibly had upload problems too (or Synology is losing files), as there seem to be missing files.

Right. The rebuild d block options didn’t work.

It goes with repair, not backup, but seemingly your backup somehow ran repair. Maybe option I named.

Pushing the Repair button might be more reliable, but it sounds like you might be doing the purge plan.

I think I’m just going to run the backend test now with the SFTP setup. I will share results. I think that makes sense. The only thing is that in order to get SFTP on my synology, I needed to create a new shared folder. The folder that I ran with the last test, for some reason, I can’t just add SFTP ability to it.

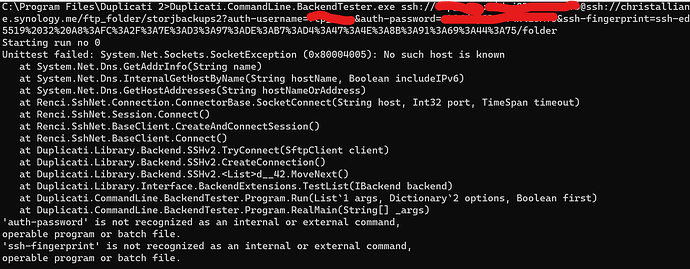

Oh boy. I tried to run the SFTP backup, straight up. It’s didn’t work. Then I deleted the backup profile and added it again, with SFTP. Still got an error. Tried to run the backend test on the new profile and got an error.

I just want to back up my files. ![]()

This isn’t shown, but if you added it again with the same destination, it will complain about extra files because the new job database expects fresh start. You would use a clean folder for a brand new job.

Please say what you did and what you got, if you want more explanation on that run.

For the BackendTester run, you seem to have mangled your URL, maybe during edit for empty folder.

SFTP (SSH) manual says format is like:

URL Format:

ssh://hostname/folder

but yours shows ssh:// twice. I exported one of my actual test jobs and edited some. It looks like

ssh://servername/folderpath?auth-username=REDACTED&auth-password=REDACTED&ssh-fingerprint=REDACTED"

So if you then do the Export As Command-line it “should” come out in just the right format, so then you’d edit the folder path from the active destination to an empty folder, just like you did for WebDAV.

Your URL seems wrong. There are 2 times ‘ssh://’ it should appear only at the beginning of the URL. How did you get it ? by export from the Web UI, as command line ?

There’s also an at-sign @ that got in somehow. We can’t see it all, but I posted explanation earlier.

EDIT 1:

and I’m also not seeing the double quotes around the whole URL that are necessary as said here.

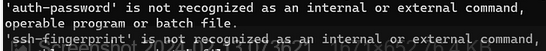

The lack of double quotes makes the Command Prompt think you’re trying to run more commands.

EDIT 2:

![]()

is (as documented) looking for what follows the ssh:// to be a published host name that it can use.

Whatever’s under the red on the first ssh:// is seemingly not, but on the second ssh:// it may be.

Maybe everything to the left of that second one doesn’t belong? Clean up that URL, double quote it.

Part with second ssh:// “appears” close to the format that I described plus required extras, except:

The required extras to the right of the question mark should be left alone. Folder is to the left of that.

to repeat, and please also use double quotes around the whole URL to protect against ampersands.

The Export As Command-line actually quotes it for you, but it looks like I accidentally edited one off.

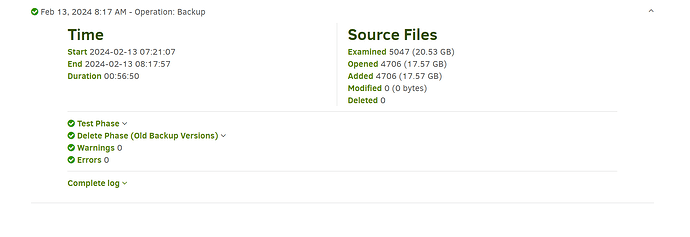

HEEEEEEYYYYYYY! I got a successful backup! No errors! I don’t know what happened, but from some reason it worked!

EDIT:

{

“DeletedFiles”: 0,

“DeletedFolders”: 0,

“ModifiedFiles”: 0,

“ExaminedFiles”: 5047,

“OpenedFiles”: 4706,

“AddedFiles”: 4706,

“SizeOfModifiedFiles”: 0,

“SizeOfAddedFiles”: 18860686175,

“SizeOfExaminedFiles”: 22045495239,

“SizeOfOpenedFiles”: 18860686175,

“NotProcessedFiles”: 0,

“AddedFolders”: 3413,

“TooLargeFiles”: 0,

“FilesWithError”: 0,

“ModifiedFolders”: 0,

“ModifiedSymlinks”: 0,

“AddedSymlinks”: 0,

“DeletedSymlinks”: 0,

“PartialBackup”: false,

“Dryrun”: false,

“MainOperation”: “Backup”,

“CompactResults”: null,

“VacuumResults”: null,

“DeleteResults”: {

“DeletedSetsActualLength”: 0,

“DeletedSets”: ,

“Dryrun”: false,

“MainOperation”: “Delete”,

“CompactResults”: null,

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “2024-02-13T13:13:50.2689916Z”,

“BeginTime”: “2024-02-13T13:13:49.780353Z”,

“Duration”: “00:00:00.4886386”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null,

“BackendStatistics”: {

“RemoteCalls”: 795,

“BytesUploaded”: 15235075496,

“BytesDownloaded”: 53133479,

“FilesUploaded”: 584,

“FilesDownloaded”: 3,

“FilesDeleted”: 113,

“FoldersCreated”: 0,

“RetryAttempts”: 93,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 815,

“KnownFileSize”: 21128987661,

“LastBackupDate”: “2024-02-13T07:21:07-05:00”,

“BackupListCount”: 3,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2024-02-13T12:21:07.3977149Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

},

“RepairResults”: null,

“TestResults”: {

“MainOperation”: “Test”,

“VerificationsActualLength”: 3,

“Verifications”: [

{

“Key”: “duplicati-20240213T122107Z.dlist.zip.aes”,

“Value”:

},

{

“Key”: “duplicati-i40b576ef4294419f953d985dacbc1264.dindex.zip.aes”,

“Value”:

},

{

“Key”: “duplicati-bdb7d6feb0bb3403f9cc1aed3527a6700.dblock.zip.aes”,

“Value”:

}

],

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “2024-02-13T13:17:57.2321111Z”,

“BeginTime”: “2024-02-13T13:17:27.81259Z”,

“Duration”: “00:00:29.4195211”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null,

“BackendStatistics”: {

“RemoteCalls”: 795,

“BytesUploaded”: 15235075496,

“BytesDownloaded”: 53133479,

“FilesUploaded”: 584,

“FilesDownloaded”: 3,

“FilesDeleted”: 113,

“FoldersCreated”: 0,

“RetryAttempts”: 93,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 815,

“KnownFileSize”: 21128987661,

“LastBackupDate”: “2024-02-13T07:21:07-05:00”,

“BackupListCount”: 3,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2024-02-13T12:21:07.3977149Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

},

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “2024-02-13T13:17:57.3457947Z”,

“BeginTime”: “2024-02-13T12:21:07.3977149Z”,

“Duration”: “00:56:49.9480798”,

“MessagesActualLength”: 1997,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: [

“2024-02-13 07:21:07 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started”,

“2024-02-13 07:21:07 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (158 bytes)”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Temporary: duplicati-20240212T234643Z.dlist.zip.aes”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-20240212T234644Z.dlist.zip.aes”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-KeepIncompleteFile]: keeping protected incomplete remote file listed as Uploading: duplicati-20240212T235949Z.dlist.zip.aes”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-Remove incomplete file]: removing incomplete remote file listed as Uploading: duplicati-b814764eb82234314bfa152f805593dd9.dblock.zip.aes”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-b814764eb82234314bfa152f805593dd9.dblock.zip.aes (49.94 MB)”,

“2024-02-13 07:21:09 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-b814764eb82234314bfa152f805593dd9.dblock.zip.aes (49.94 MB)”,

“2024-02-13 07:21:11 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b3dabc714820c43afbe66f3ea2c3f7c12.dblock.zip.aes”,

“2024-02-13 07:21:11 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-bad9ec0cb8773488886f58ef83b2e99d9.dblock.zip.aes”,

“2024-02-13 07:21:11 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-Remove incomplete file]: removing incomplete remote file listed as Uploading: duplicati-bc67b2c665888492289f0dd70ac92684f.dblock.zip.aes”,

“2024-02-13 07:21:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-bc67b2c665888492289f0dd70ac92684f.dblock.zip.aes (49.98 MB)”,

“2024-02-13 07:21:11 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-bc67b2c665888492289f0dd70ac92684f.dblock.zip.aes (49.98 MB)”,

“2024-02-13 07:21:14 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b2c21911e9c37445c8d6791088e445466.dblock.zip.aes”,

“2024-02-13 07:21:14 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-Remove incomplete file]: removing incomplete remote file listed as Uploading: duplicati-be130fc0fa8c14b5987f005a00a283b3e.dblock.zip.aes”,

“2024-02-13 07:21:14 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-be130fc0fa8c14b5987f005a00a283b3e.dblock.zip.aes (49.96 MB)”,

“2024-02-13 07:21:14 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Completed: duplicati-be130fc0fa8c14b5987f005a00a283b3e.dblock.zip.aes (49.96 MB)”,

“2024-02-13 07:21:16 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-Remove incomplete file]: removing incomplete remote file listed as Uploading: duplicati-bbfec2ecd509f4077be2f0ba1a64ccd02.dblock.zip.aes”,

“2024-02-13 07:21:16 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Delete - Started: duplicati-bbfec2ecd509f4077be2f0ba1a64ccd02.dblock.zip.aes (49.96 MB)”

],

“Warnings”: ,

“Errors”: ,

“BackendStatistics”: {

“RemoteCalls”: 795,

“BytesUploaded”: 15235075496,

“BytesDownloaded”: 53133479,

“FilesUploaded”: 584,

“FilesDownloaded”: 3,

“FilesDeleted”: 113,

“FoldersCreated”: 0,

“RetryAttempts”: 93,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 815,

“KnownFileSize”: 21128987661,

“LastBackupDate”: “2024-02-13T07:21:07-05:00”,

“BackupListCount”: 3,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Version”: “2.0.7.1 (2.0.7.1_beta_2023-05-25)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2024-02-13T12:21:07.3977149Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

}

EDIT 2:

I did this. I created a new shared folder with SFTP capability. I also created a brand new folder in that shared folder. I then ran the job. And got errors. But the errors were not described with one encapsulating description. The errors looked like this:

-

Feb 12, 2024 7:04 PM: put duplicati-bbfec2ecd509f4077be2f0ba1a64ccd02.dblock.zip.aes

-

Feb 12, 2024 7:04 PM: put duplicati-b814764eb82234314bfa152f805593dd9.dblock.zip.aes

-

Feb 12, 2024 7:04 PM: put duplicati-bc67b2c665888492289f0dd70ac92684f.dblock.zip.aes

-

Feb 12, 2024 7:04 PM: put duplicati-be130fc0fa8c14b5987f005a00a283b3e.dblock.zip.aes

-

Feb 12, 2024 7:04 PM: put duplicati-b14ec62c809674cbbb89a6a2c6bfcd173.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-bd87b82218f9f4cf7b6c36b61056cfe83.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b5978795de4664a8b9ad915b9429cd851.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-i5227bfb8fea7400e9f5064e7161ce2ce.dindex.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-ba50fab700ff54e0eb56aef9687b83d63.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-i0f454174aa434d588ee3c899feeff747.dindex.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b69757c02b1f446c9a2f3d7bb21b46a9b.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b75e96394de8b4f53920ae5fe84c0d5e5.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-bbd76ac6180434666b0c2c9f5c8111e96.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-bebe2d2ea1522413ca684e087ec1447ab.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-bc0ad679d3254411789b5ad1508855811.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b2cbef3021e294042a0312bc6a91510d8.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b539d918d35b0424e8c28bf8214411e5a.dblock.zip.aes

-

Feb 12, 2024 7:03 PM: put duplicati-b844459a72c7b4d11b2c80f9e406e7978.dblock.zip.aes

-

Feb 12, 2024 7:02 PM: put duplicati-b547ff2f4095f413387dfe5880073df5b.dblock.zip.aes

-

Feb 12, 2024 7:02 PM: put duplicati-bf939e28981cd4299b5f60cd2c1d5c4b2.dblock.zip.aes

-

Feb 12, 2024 7:02 PM: put duplicati-b4068248906bf4143bdb2884b96c950e6.dblock.zip.aes

-

Feb 12, 2024 7:02 PM: put duplicati-b5acf551df1944eda8793f45d08482daa.dblock.zip.aes

-

Feb 12, 2024 7:00 PM: delete duplicati-b527e552daea0413cbbf581488f03f923.dblock.zip.aes

-

Feb 12, 2024 7:00 PM: delete duplicati-b2194bf65fd0a4deda6bae8ae43f1fdb9.dblock.zip.aes

-

Feb 12, 2024 7:00 PM: delete duplicati-b416ca5b5df6c473282a5b627dd763816.dblock.zip.aes

-

Feb 12, 2024 6:59 PM: delete duplicati-bf499238018254bb5972192d9eeda16e7.dblock.zip.aes

-

Feb 12, 2024 6:59 PM: delete duplicati-b3cbcdd0c8257408ab9121885ed971aa5.dblock.zip.aes

-

Feb 12, 2024 6:59 PM: delete duplicati-bbfb56f6e6631441fa46cae2a870a7a20.dblock.zip.aes

-

Feb 12, 2024 6:59 PM: delete duplicati-bc617c1d50b0e4ddc989d8f03632ecb03.dblock.zip.aes

-

Feb 12, 2024 6:59 PM: list

EDIT: But it’s working now. Running a second time to see if the fix sticks.

Good news for sure.

It looks like it must have been a rerun of something that failed, as it had to do some cleanups of files. Generally this should be fine, but it’s a little less certain than a fresh run. It also had transfer troubles:

If an upload or download fails, Duplicati will retry a number of times before failing. Use this to handle unstable network connections better.

so there’s probably still something not quite right (maybe network?), but at least retries saved it once. Increasing the value above 5 is OK if you have to. The 93 retries were probably on different transfers.

I don’t understand what you’re saying here.

EDIT: I understand the retries count number of 93, as it’s mentioned in the log. Set what value above 5? The 93 transfers were on the new backup profile? No? The successful one? No?

Getting new issue. Backing up to Storj is causing my MalwareBytes to act up. It’s blocking Duplicati outbound traffic…Duplicati.GUI.TrayIcon.exe.

Suggestions?

EDIT: Website blocked due to malware/riskware. Different IP addresses and port numbers. That’s what the error messages from Malwarebytes look like.

EDIT 2: The outbound connection by that file is being blocked. I never had MalwareBytes before reformatting. Maybe that’s why these error messages are new to me.

EDIT 3: I clicked the stop button “stop after current file finishes” and nothing happened. Then I waited around 5 minutes and nothing happened. I then clicked the button again, but this time the “Stop now” option. Getting the message that the system is waiting for the upload to finish. Seems hung up though.

The default number-of-retries, as documented in manual, is 5. The manual says it’s per transfer.

(and possibly some list) had 93 retries among them, but no individual transfer needed more than 5.

If your network gets worse, increasing 5 in Options screen 5 Advanced options might be workaround.

Unlike WebDAV case, you’re perhaps having upload errors this time, but trying repeatedly gets there.

Creating a new backup job has directions which also work for Editing an existing backup

Under Advanced options there is an extensive list of options to fine-tune your backup job. Click Pick an option and select which option you want to set. This option is added to a list where you can change the value of that option.

Only you can say where what you posted came from. I’m just quoting from the log that you posted at

are you not supposed to backup FROM your drive mapped to Storj TO a Nas with Sftp ? It’s difficult to follow what you are doing…

Added another profile. No worries. You got it right. I back up my mapped storj drive both to storj itself and a synology drive.

do you mean by ‘profile’ another Duplicati job ? where you are backing up a mapped drive to storj to another directory on storj ? That’s another problem then - yet I am not sure that your backup problem with the NAS is solved, having 93 retries seems highly problematic (even if it’s on the Internet, it’s still very unreliable) so it’s better to tackle the original problem.

What did you install from after reformatting? It might also have come in with some other app installation. Windows Settings Apps listing might be able to give you a date, or maybe not, if it came in on Windows.

Vague. Maybe Malwarebytes support could make some sense of it, but here, some specifics might help. Ordinary website ports would be mostly 443 these days, maybe some still at 80. Storj nodes use 28967, although I think the node operator can choose other ports. There are many sites to check an IP address.

IP Blacklist Check is one. I don’t know if Malwarebytes uses lists, or is actually scanning on your traffic. While I wonder if network monitoring was impacting WebDAV, etc., it apparently wasn’t alerting about it.

Maybe because