What we have on disk are primarily file lists, having just hashes in them. These hashes can be anywhere in the dblock files. The dindex files are just that, a way to relate lists and dblocks. When the dindex files are missing, we need to read dblocks files to map back the data files content to the file lists (dlist).

The query I tried to optimize starts from temporary tables built from the blocks that are not known from the found, valid dindex files and put the info back into the database tables mapping remote data files to file list. Blocks are read until all the file lists have enough information to map completely the existing file lists to remote data (volumes in Duplicati parlance). If enough of file lists have been lost, not all block files will be read: some will be useless because they maps to lost file lists. Short of that, all ‘not known’ blocks will be read.

Another possibility is that the damage is not old, but new-made while network was broken.

I tried lining up dindex and dblock files. Normally they’re in State Verified, but not currently,

because bailing out early avoids that. I added State Uploaded, and numbers looked better.

Ideally I like IndexBlockLink rows to line up with Verified Index and Blocks. Here that works

for Blocks, but not for Index. Are there extra Index? If so, how does that relate to the issue?

5772 IndexBlockLink

5769 Verified Index

11 Uploaded Index

5761 Verified Blocks

11 Uploaded Blocks

Above is just numeric sanity-check. If I suspect new damage, I’ll have to study exact new files.

Database and destination internals was my attempt to describe the file formats. The above sentence is applicable (IIRC) to anything that’s looking for blocks and doesn’t have dindex mapping block to dblock.

The dlist in a blocklist situation references blocklists by hash, but usually dindex has copies in list folder. Are you thinking this is the sort of block it’s looking for, as it could also be second-level, as the blocklist references data and metadata blocks through hashes as well, and all of those things are in dblock files?

The rest of that post still seems to be talking about multi-block files, thus blocklists instead of small files that are single-block. Metadata is typically tiny, so single-block. I’ll have to read through this more times.

Yes, that’s how it works. I think what I would do is create a new copy of the container, update the Environment Variables, get the container running and then install the binaries.

I don’t have any hard limits set on my containers, so it will take whatever the host has available. I’ve got 16GB on the host, and I generally run at about 7.7GB used… so there’s some headroom there.

Yes… I’m on a Dell PowerEdge T320 with DDR3 ECC memory.

Yes, that’s accurate.

I’ve never run a canary build. I generally keep my version static until I get the notification in the front end that there’s an update.

if you run at verbose log level the custom sqlite variable settings are logged (in this case the memory setting at 200 mb). The experimental dbbuild setting is not logged at all.

The eight extra dindex files are below, but from the tiny size I suspect (not sure) they’re harmless:

SELECT * FROM "Remotevolume" LEFT JOIN "IndexBlockLink" ON "IndexVolumeID" = "ID" WHERE "Type" = "Index" AND "IndexVolumeID" IS NULL

ID OperationID Name Type Size Hash State Roughly from

8758 361 duplicati-ieee0634f13e844dd9594e6746a55da0b.dindex.zip.aes Index 541 obmkvFcL4/xvnCepVLaT5GTt7fky/t/HquNm9zqSDs0= Verified Feb 28, 2020

8759 361 duplicati-i69813d34ef414ec599b60f26da3be11f.dindex.zip.aes Index 541 bnqRYj9daaiVpCHGoMvXLX471gkMdWURgPIKdi2y2wg= Verified

8760 361 duplicati-if3b74b3f831e4419ab7be9ed59df6ffa.dindex.zip.aes Index 541 7015gMTPfMUf2o7g9B1ZfC7/QN1uc+CAe9qHaOgY528= Verified

8761 361 duplicati-i34741576f3c44c0cbc9c0f5fd6a25d40.dindex.zip.aes Index 541 thTzbBNLprem/fM7IHvy/aRXRu1nTsERv8Y5V8/1iw0= Verified

8762 361 duplicati-ifd19934d04564a1c96d7a83ff3b03e52.dindex.zip.aes Index 541 35MDyBPpJfcu507953FCg9cOeBxzWjG8yNloBDZWvtU= Verified

8960 384 duplicati-i3d9177226a5c46368f4c9b72b549eee0.dindex.zip.aes Index 541 Lh0EP0P83PPoHKEwix9zEhd/cm5+l2LOarwq4s9aNhA= Verified

10017 426 duplicati-i86a13fdc8c0445f58b26436815ee39be.dindex.zip.aes Index 541 TGI4mNA7sCM5AlD/zOxeNCMARyBQ4bIXQn8HAZk7R90= Verified

16179 584 duplicati-i5628e66ebd234dc2b7f6aac9f9e5af9d.dindex.zip.aes Index 541 hBLkrFQ2FPp4lJxM8X4YCahZgtFBYu49arw2nBafF6A= Verified Aug 22, 2020

Although I’m still studying the code, the situation here looks like the list folder data was incomplete, meaning Duplicati would have to download dblocks to figure out what the value of the blocklist was.

I went searching in GitHub issues. I wonder if this 2020 fix which missed 2.0.5.1_beta_2020-01-18

and shipped in 2.0.6.3_beta_2021-06-17 might have avoided the dblock downloads? Time may tell, assuming database recreate is tested sometime. For old backup, a dindex file exam may shed light.

Yep, 541 byte dindex files are empty. Things that make you go, "Uh-oh." - #9 by drwtsn32

In my experience the newer version only prevents the problem going forwards. If you have a functioning database, you can do the trick where you delete the dindex files and have Duplicati regenerate correct ones. I did that on all my systems back in 2020 (after upgrading to a Canary release that included the fix you linked to). After that I’ve never seen a database recreation require dblocks.

I had been planning on trying out @gpatel-fr experimental rebuild code, but this sounds like it may be a better option?

I need some guidance here on how I should proceed.

Do you have a current healthy database ? If yes, don’t try to rebuild it ![]()

Do you have a current healthy database ? If yes, don’t try to rebuild it

@ts678 advised that I should do a rebuild in this post prior to running a backup job. Are you saying I should not have done that?

Are you saying I should not have done that?

Well, you have spent quite some time on fixing it by hand. If your fixes where correct AND you saved this database version, I’d say that it’s better to use it to restart backups. If you remember, I had given you a trick to ignore the blocking error. If you deleted the current database and did not back it up, well, you don’t have a lot of choice. If you delete first the index files, the database recreation will take forever.

I need some guidance here on how I should proceed.

I have not carefully read this entire thread, so I’m not sure this is the way you want to go or not. Judging from your first post it sounds like you may have other issues.

My recommendation can apply when Duplicati is functioning fine - no database issues - backup, restore jobs work fine. There is silent corruption of dindex files on the back end due to I believe the bug in older versions that was mentioned above. I say “silent” because you’ll never notice the problem UNLESS you do a database recreation. The silent dindex corruption will trigger Duplicati into downloading dblocks, something it shouldn’t have to do for a database recreation when everything is correct on the back end.

You can fix this silent corruption by doing the procedure I mentioned. I hesitate to link to a more detailed post since I’m thinking you have something else going on.

Please read what you pointed to, and I hope you did this:

I know you have backup of DB, but testing recreate may also add confidence that restore will work.

If you decide to do this, you can move your DB aside again (keep track of which is which). Repair.

Assuming you saved your old database, the test poses no risk and might detect issues (which it did).

Having given up on recreate as maybe not time-effective (and now that there’s a theory of its cause), you’ve now got several options to backtrack. One might use your “fixed” database, but I worried that because you took the ambitious method, you might not really have everything that the old log needs.

The conservative method would have been to just give up on the old log, which you may not care for.

Another option which I don’t think was tested is to take advantage of the database backup of May 31 which possibly is in good shape without any inconsistencies that might have come in on June 1 mess which did manage to upload 5 dblock and 5 dindex files before network death. They can be removed.

The idea of recreating all dindex files is interesting, as it avoids having to find the ones old bug hurt…

Question is which database to revert to.

Ambitious manual fix may be bad. I’d prefer the conservative manual fix if we’re going to hack on a DB.

Since you have a DB backup from after May 31 backup (right?), move and note current DB once again, restore the May 31 database, do a manual Verify to see if which issue it notices first. It might notice the 10 extra files, and suggest a Repair which will delete them. Or it might complain of count inconsistency.

Do you have any good tools for the bucket? Without them, deleting dindex files might be quite a chore.

What I’d really prefer to see anyway is to not delete them (which is kind of permanent), but move them, e.g. to a subfolder whose name isn’t confusingly similar to regular file (i.e. don’t start with duplicati-).

I’d rather not damage your very long-lived backup accidentally (but if you don’t care, then just delete it). How’s the replacement backup going BTW?

If your fixes where correct

Not proven.

If you deleted the current database and did not back it up

which is exactly what the Recreate button does. I’d sort of like to find a way to get a backup in there, however that begs the question of how much space to use, and what if someone tries several times?

Moving the old database aside and doing Repair is what I always do, as I still have the old database.

Judging from your first post it sounds like you may have other issues.

There are quite a few to disentangle. Extra files from June 1 network failure, inconsistency in database possibly caused by that, but can’t really say without good logs or testing to see how well May 31 works. Least-manual-hacks approach is May 31. I can say exactly which 10 files are June 1, or just look in B2.

I hope you did this:

I did indeed copy the live database and rename it, so I have that accessible.

move and note current DB once again, restore the May 31 database, do a manual Verify to see if which issue it notices first.

I can do this, probably this evening.

Do you have any good tools for the bucket?

The only way I’ve ever interacted with data in my buckets is via Duplicati. I’m unaware of any other tools, but I’m certainly willing to learn. My goal here is, at minimum, to be able to reliably restore from that particular backup job. Perhaps best case would be to switch back to that particular job for daily backups, but I admit there is limited practical benefit of that approach (right?).

I’d rather not damage your very long-lived backup accidentally (but if you don’t care, then just delete it).

I definitely care. Protecting those backups, and making them functional for restore purposes, is my primary goal at this point.

How’s the replacement backup going BTW?

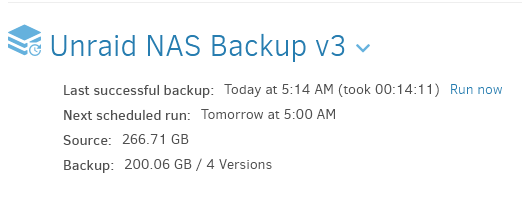

So far, so good:

So, my plan of attack at this point is:

- Take a copy of the current sqlite file

- Restore the May 31st sqlite file

- Run “Advanced > Verify files”

- Report back the results

Do I have that right?

Perhaps best case would be to switch back to that particular job for daily backups

As I think about this more, I did update some settings on the new backup based on previous comments in this thread. Namely, I set the blocksize to 500 Kbyte, and the remote volume size to 200 Mbyte. With that in mind, it’s probably not worth trying to get back to using the original backup job going forward.

I’m unaware of any other tools, but I’m certainly willing to learn.

Tool options still look rather limited. Maybe you can use Cyberduck.

S3 compatible buckets get others, but your bucket might be too old.

Protecting those backups, and making them functional for restore purposes, is my primary goal at this point.

You could certainly do the least possible, just fix, test, and “retire” it.

The cost of switching to a new job for new backups is its space use.

Do I have that right?

That’s the idea. Technically the recreate problem is present, but the

B2 backup of the database will save you from at least that problem.

If Verify can be made happy, then you can test some other restores.

Be sure to use no-local-blocks. A direct restore will probably fail

(or be very slow), so you’re sort of relying on the B2 backup of DB…

If we try to fix the recreate slowness by deleting dindex files (step 1),

there’s more of a chance of hurting your old backup even if careful…

In theory, one approach is to copy old job destination to a new folder.

Deleting dindex files in the copy is safe, as old destination still exists.

I think About → Show log → Live → Warning will name its extra files.

Main popup might just give a count. Ones I think uploaded on June 1:

duplicati-b6c5fe3944904450fb6fa1eb7ca13fd90.dblock.zip.aes

duplicati-b7e4c406c1063427b91aea4bcfdfab208.dblock.zip.aes

duplicati-bd9bc6057a26247629ddff7e52af3e477.dblock.zip.aes

duplicati-be87da23ff56e4ff082a2f04ee673b151.dblock.zip.aes

duplicati-bf18efeafa6504da3a839895061a98955.dblock.zip.aes

duplicati-i69aec3fa47e648e7982efacae6ebcb60.dindex.zip.aes

duplicati-i6cd5c5ee591b45abbd078daec9ad2b35.dindex.zip.aes

duplicati-i72d5fabae80347839f6fb35684c15374.dindex.zip.aes

duplicati-i85c1161be6994d2f829cfc73f5004852.dindex.zip.aes

duplicati-if0acff0eeeb14b2b93356678a946836e.dindex.zip.aes

If Cyberduck can sort the files by date, these should be easy to spot.

Be sure to use

no-local-blocks. A direct restore will probably fail

(or be very slow), so you’re sort of relying on the B2 backup of DB…

Can you expand on this point a bit more? Generally when I test restore, I pick a handful of files and restore them to a temporary folder, then verify that the files are functional. Should I be testing this in a different manner?

Can you expand on this point a bit more?

Previously expanded:

One interesting test would be to try to restore the version of the log file that you think you put back, as possibly some bit of it is missing. If you test that, use no-local-blocks to prevent its use of local blocks:

Duplicati will attempt to use data from source files to minimize the amount of downloaded data. Use this option to skip this optimization and only use remote data.

so if you allow Duplicati to use blocks from source to put in the restore, it’s a much worse restore test.

In terms of quantity, more is better, but also more time. There’s at least a file hash check after restore, meaning if it restores without problems, it should be the exact same file that was originally backed up.

Ahh, ok, I understand now. Sorry for my slowness… there’s a LOT of information in this thread to digest, and I’m doing my best to make sense of it all!

Thank you kindly (everyone) for your patience in walking me through this whole ordeal. I really appreciate it!

I’ll report back after restoring the 5/31 database and doing a “Verify files”

The result of “Verify files” is:

2023-06-08 07:09:29 -07 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (11.31 KB)

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b1652f5b5236143779399e4cfe80ef190.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b349e00c8fe9a4ea8b7636519fd31ca57.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b3cf40c45c1b24e88a93f4f9a911ad71d.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b46ce0c4f3a5d4615a2599f3f9ede63f7.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b59384f0c4cd14d568b61e9a2673860e1.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b6c5fe3944904450fb6fa1eb7ca13fd90.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b7cba89f7d512448289551ef2cbefc199.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b7e4c406c1063427b91aea4bcfdfab208.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b803ff07f7abf4ed0951516197f484cd7.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b91ec91fef36244ec8c9a3a5f7d3b31b0.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-b9a247030289e4c08815fd8e9ab1b3e75.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-bd9bc6057a26247629ddff7e52af3e477.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-be87da23ff56e4ff082a2f04ee673b151.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-bee5a33b259bb4ef7aaa80f081c36763c.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-bf18efeafa6504da3a839895061a98955.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-bfbaafe077ba646ee8d6723894403e581.dblock.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i13fc68c45edc47d4bfc3cd026cd409a4.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i17554fa916114466a1825e9a618a538d.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i43ede28e400345efb3580225050ce719.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i51787c5fe0c34d1cac8012931e7bee40.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i69aec3fa47e648e7982efacae6ebcb60.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i6cd5c5ee591b45abbd078daec9ad2b35.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i72d5fabae80347839f6fb35684c15374.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i7344d88a889a4b62b753a76eb463e67c.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i85c1161be6994d2f829cfc73f5004852.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i878fb27c10a64c1f93bb307ee5905c63.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-i8c9c7830c5df4b4abf05b54e4f7c0660.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-iaca4ed1e03324b8da3e4e08281803c65.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-ica8a4f8dcae240c5920da7a2b1e3b11c.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-if03bf0f179ac47bbbf629e36e5389f54.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-if0acff0eeeb14b2b93356678a946836e.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Warning-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraUnknownFile]: Extra unknown file: duplicati-if7a52d99f0eb4c3196096c2f7fd0bee5.dindex.zip.aes

2023-06-08 07:09:31 -07 - [Error-Duplicati.Library.Main.Operation.FilelistProcessor-ExtraRemoteFiles]: Found 32 remote files that are not recorded in local storage, please run repair

Should I go ahead and run repair as it suggests? Or do I need to manually delete those remote files?

EDIT: I was pretty sure that the right move was to delete the files in the remote store, so I first copied the 32 files into a folder (using Cyberduck), and then deleted the files from the bucket root. I’m now running Verify Files again.

That’s probably right. Part of my confusion was not noticing that you ran backup twice on June 1.

There seems to be a scheduled daily backup at 5 AM local, but June 1 got another run at 6:19…

Regardless, between saving the destination files and having backup of the DB, anything heading

in the wrong direction should be possible to back out. With some luck, Verify just run will end well.