Should I delete my old back-up and just start fresh?

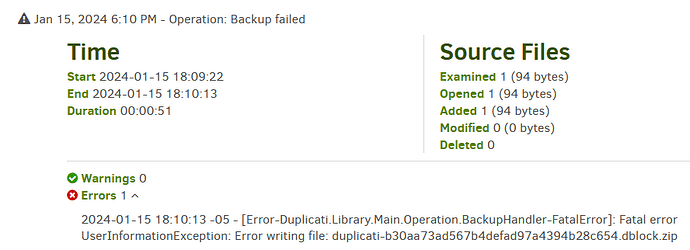

Here’s the error log:

{

“DeletedFiles”: 627325,

“DeletedFolders”: 918,

“ModifiedFiles”: 1,

“ExaminedFiles”: 312793,

“OpenedFiles”: 309649,

“AddedFiles”: 78145,

“SizeOfModifiedFiles”: 0,

“SizeOfAddedFiles”: 181822540622,

“SizeOfExaminedFiles”: 891916544419,

“SizeOfOpenedFiles”: 369995431801,

“NotProcessedFiles”: 0,

“AddedFolders”: 15030,

“TooLargeFiles”: 0,

“FilesWithError”: 0,

“ModifiedFolders”: 0,

“ModifiedSymlinks”: 0,

“AddedSymlinks”: 0,

“DeletedSymlinks”: 154,

“PartialBackup”: false,

“Dryrun”: false,

“MainOperation”: “Backup”,

“CompactResults”: null,

“VacuumResults”: null,

“DeleteResults”: null,

“RepairResults”: null,

“TestResults”: null,

“ParsedResult”: “Fatal”,

“Interrupted”: false,

“Version”: “2.0.7.100 (2.0.7.100_canary_2023-12-27)”,

“EndTime”: “2024-01-11T18:03:05.120397Z”,

“BeginTime”: “2024-01-11T15:58:35.831325Z”,

“Duration”: “02:04:29.2890720”,

“MessagesActualLength”: 109,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 1,

“Messages”: [

“2024-01-11 10:58:35 -05 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started”,

“2024-01-11 10:59:34 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()”,

“2024-01-11 11:01:08 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (36.54 KB)”,

“2024-01-11 11:01:09 -05 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Temporary: duplicati-20240109T150000Z.dlist.zip.aes”,

“2024-01-11 13:00:44 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b01aac2432baa4a11ac59942636dcf1f2.dblock.zip.aes (49.91 MB)”,

“2024-01-11 13:00:44 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b87cba52e0dc442a4918e03fd2209e10f.dblock.zip.aes (49.94 MB)”,

“2024-01-11 13:00:44 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b2012900345134317ba0feeea274c30ee.dblock.zip.aes (49.92 MB)”,

“2024-01-11 13:00:45 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b45b98b2f71ea40479b17ca6bd1e3bec4.dblock.zip.aes (49.93 MB)”,

“2024-01-11 13:01:05 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b45b98b2f71ea40479b17ca6bd1e3bec4.dblock.zip.aes (49.93 MB)”,

“2024-01-11 13:01:05 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b01aac2432baa4a11ac59942636dcf1f2.dblock.zip.aes (49.91 MB)”,

“2024-01-11 13:01:05 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b87cba52e0dc442a4918e03fd2209e10f.dblock.zip.aes (49.94 MB)”,

“2024-01-11 13:01:05 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b2012900345134317ba0feeea274c30ee.dblock.zip.aes (49.92 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b01aac2432baa4a11ac59942636dcf1f2.dblock.zip.aes (49.91 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b87cba52e0dc442a4918e03fd2209e10f.dblock.zip.aes (49.94 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b45b98b2f71ea40479b17ca6bd1e3bec4.dblock.zip.aes (49.93 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b460a46dd4a7b45ae87e163422791ec75.dblock.zip.aes (49.91 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b2012900345134317ba0feeea274c30ee.dblock.zip.aes (49.92 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.Operation.Backup.BackendUploader-RenameRemoteTargetFile]: Renaming "duplicati-b01aac2432baa4a11ac59942636dcf1f2.dblock.zip.aes" to "duplicati-b460a46dd4a7b45ae87e163422791ec75.dblock.zip.aes"”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b2f150dd2a5ad4441b3100cbb7b6886d0.dblock.zip.aes (49.94 MB)”,

“2024-01-11 13:01:15 -05 - [Information-Duplicati.Library.Main.Operation.Backup.BackendUploader-RenameRemoteTargetFile]: Renaming "duplicati-b87cba52e0dc442a4918e03fd2209e10f.dblock.zip.aes" to "duplicati-b2f150dd2a5ad4441b3100cbb7b6886d0.dblock.zip.aes"”

],

“Warnings”: ,

“Errors”: [

“2024-01-11 13:03:05 -05 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error\nUserInformationException: Error writing file: duplicati-b3cabb6eda7e04e1b8fcc68ee5cdfad27.dblock.zip.aes”

],

“BackendStatistics”: {

“RemoteCalls”: 25,

“BytesUploaded”: 0,

“BytesDownloaded”: 0,

“FilesUploaded”: 0,

“FilesDownloaded”: 0,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 20,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 37412,

“KnownFileSize”: 978449070468,

“LastBackupDate”: “2024-01-03T10:14:20-05:00”,

“BackupListCount”: 13,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Interrupted”: false,

“Version”: “2.0.7.100 (2.0.7.100_canary_2023-12-27)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2024-01-11T15:58:35.831634Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

}