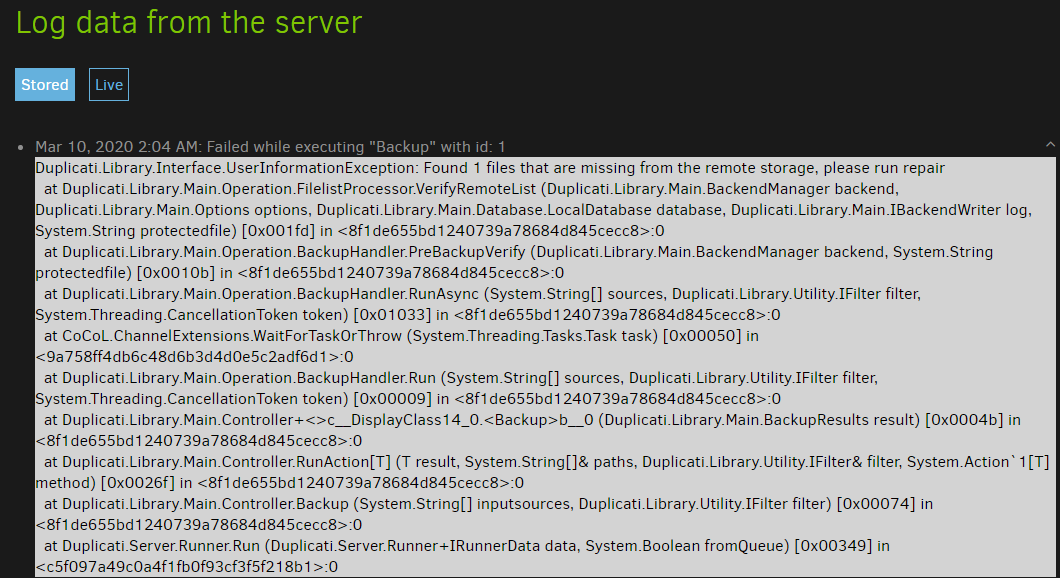

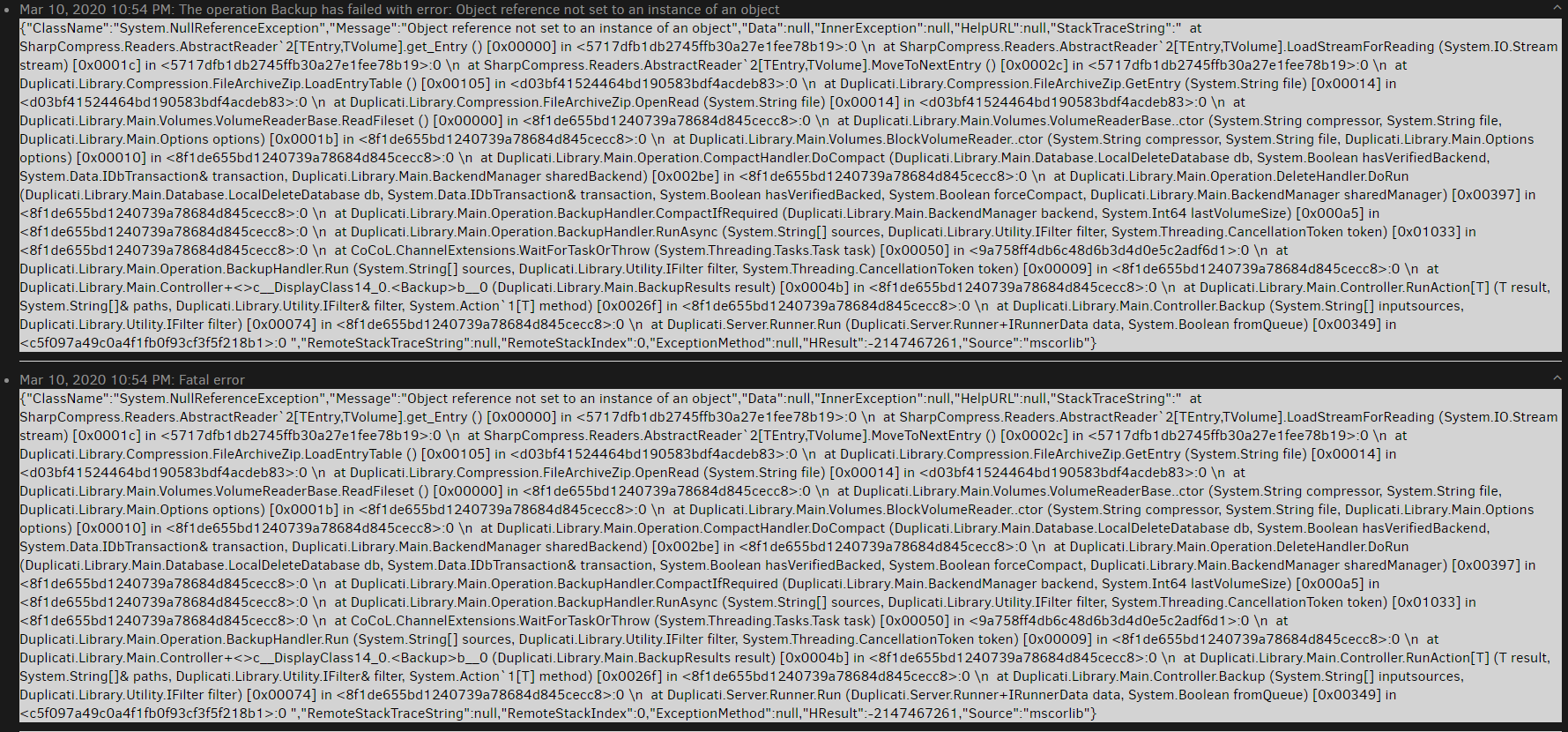

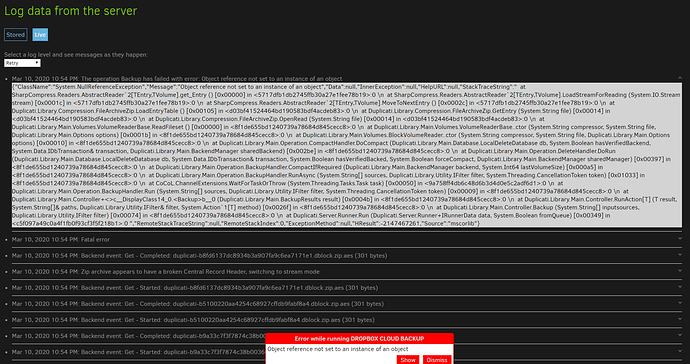

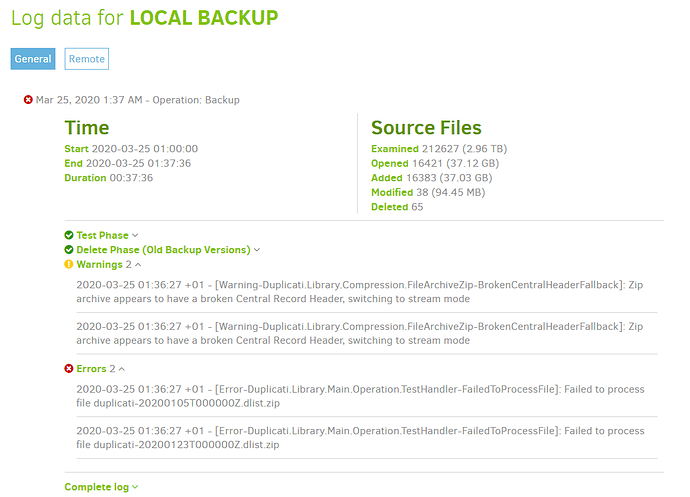

There seems to be some sort of file corruption problem, but the one-line summaries in log don’t detail.

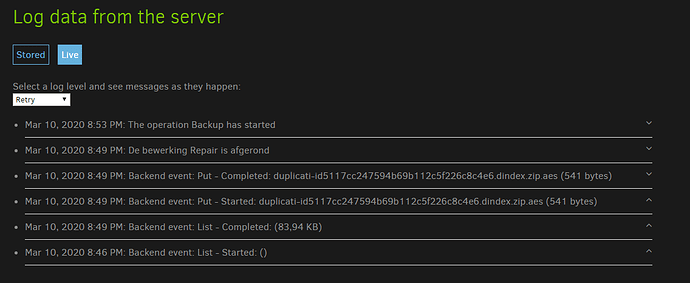

About → Show log → Live → Warning should pick up those lines, then I think click will expand them.

For the Errors, it at least names the file, so you can test, maybe with unzip -t for an integrity opinion.

I’m pretty sure FailedToProcessFile has some details in it, unlike the below which may be nearby…

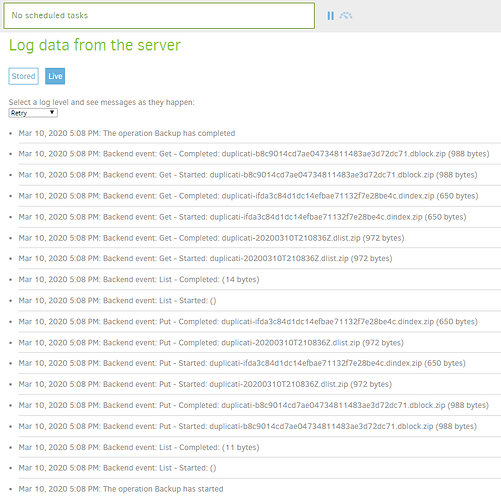

For Warnings, Cant complete backup or database compress is an example of what you might observe, where it looks like a file got through Get fine (and its name is visible), but turned out to be a broken zip.

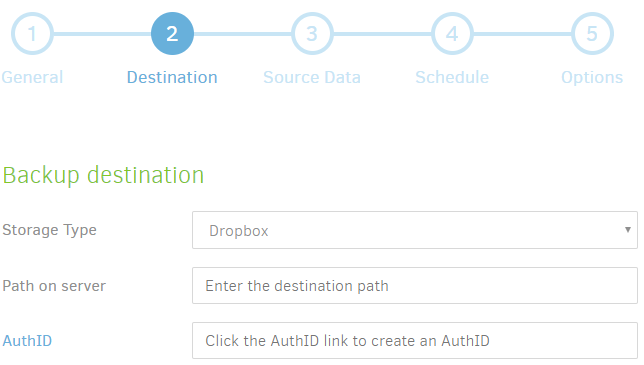

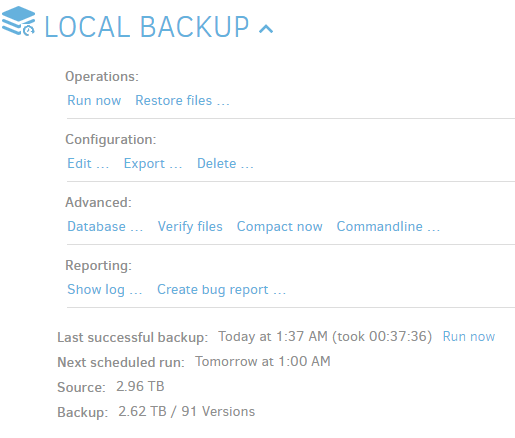

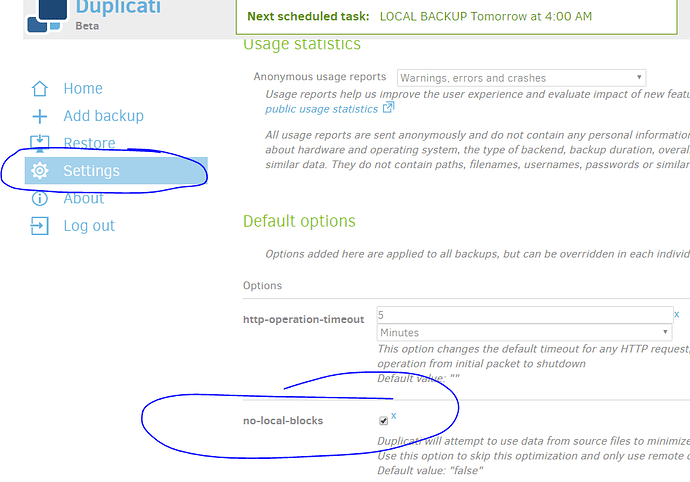

Is this even with –no-local-blocks added and checkmarked in Advanced options of Options screen? Remember, you’re not doing a restore from the backup itself (which I assume you want) without that… Explained further below, you also might not be using the files with the problem (some names still TBD).

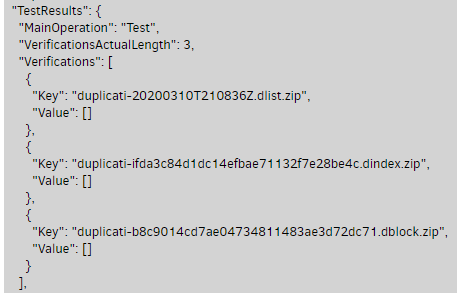

TestHandler samples are chosen somewhat randomly (but balanced), so might affect another Restore.

See above for more information on Duplicati testing. Good news here is that zip files are very easy to integrity test (doesn’t mean they’re just right, but Central Record Header errors will probably be seen).

Using the unzip command with either shell wildcard (watch out for command length limits) or a loop or xargs could be a do-it-yourself integrity test on all the SATA files to see if a pattern of problems exists.

More thorough Duplicati-style testing would use its own test command with the all option for all files.

Currently, the extent of the file issue is not known, but you’ve got at least a couple of troublesome files.

Bad dlist files don’t matter as long as the Database is intact. If you ever Recreate, they will be needed. What generated two Warnings isn’t seen, but I see the time is the same as the dlists, so maybe those.

Given an intact DB, you can rebuild bad dlist files by deleting them, then running a Database Repair. Your DB is probably intact enough to recreate those two files if Restore dropdown for the dates is OK. Note that the time on the dlist filename is UTC, but the time in your Restore dropdown would be local.

Instead of actually deleting files, it would be good to rename them with a prefix to hide them, or move them into a different directory. This will allow examination if needed, or a put-back if that seems better.