Hello!

My current problem

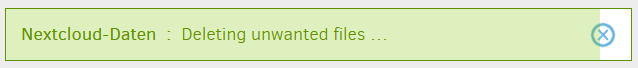

After the task finished, it remains a very long time in the step “Deleting unwanted files”

First I thought it hangs, but even after seval hours there is ressource usage like CPU on the system. It also seems to delete files on OneDrive on every run:

The marked one was just created a few seconds ago, cause I was able to watch in realtime what seems to happen: Hours after staying in “Deleting unwanted files”, I got a popup that the connection was gone and the UI trys to reconnect in I guess 15 seconds (Screenshot removed for post limit)

I wondered what’s the reason for that. I thought it was a server-side restart, but the container is running since three days:

# docker-ps | grep duplicati

duplicati_duplicati_1 Up 3 days 3 days ago linuxserver/duplicati:latest

After the waiting time, it looks like the job is started again: I see_Backup will be started_ and the Nextcloud data taskname in the header (screenshot removed for post limit)

Then it verifies the backend data, processes the files on the server and see that there are no (or just a few small) changes, so this process is finished after just a few minutes. But now it stucks again in Deleting unwanted files.

This seems to happen in an endless loop, I looked from time to time on that backup and it’s the second time now I could watch the described disconnect-process with restart in realtime. According to the OneDrive recycle bin screenshot above, just today since 0AM there were 23 files deleted. Since everyone except the first one at 02:01 AM are 12,9MB in size and have a newer timestamp in the filename, I guess its the same file which he first uploads and later deletes.

I noticed that the Duplicati container logs shows a exception very often:

[ERROR] FATAL UNHANDLED EXCEPTION: System.ArgumentNullException: Value cannot be null.

Parameter name: path

at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share, System.Int32 bufferSize, System.Boolean anonymous, System.IO.FileOptions options) [0x00014] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at System.IO.FileStream..ctor (System.String path, System.IO.FileMode mode, System.IO.FileAccess access, System.IO.FileShare share) [0x00000] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at (wrapper remoting-invoke-with-check) System.IO.FileStream..ctor(string,System.IO.FileMode,System.IO.FileAccess,System.IO.FileShare)

at Duplicati.Library.Main.Volumes.VolumeReaderBase.LoadCompressor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.IO.Stream& stream) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0

at Duplicati.Library.Main.Volumes.VolumeReaderBase..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options) [0x00007] in <8f1de655bd1240739a78684d845cecc8>:0

at Duplicati.Library.Main.Volumes.IndexVolumeReader..ctor (System.String compressor, System.String file, Duplicati.Library.Main.Options options, System.Int64 hashsize) [0x00000] in <8f1de655bd1240739a78684d845cecc8>:0

at Duplicati.Library.Main.BackendManager.RenameFileAfterError (Duplicati.Library.Main.BackendManager+FileEntryItem item) [0x0018a] in <8f1de655bd1240739a78684d845cecc8>:0

at Duplicati.Library.Main.BackendManager.ThreadRun () [0x002de] in <8f1de655bd1240739a78684d845cecc8>:0

at System.Threading.ThreadHelper.ThreadStart_Context (System.Object state) [0x00014] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at System.Threading.ExecutionContext.RunInternal (System.Threading.ExecutionContext executionContext, System.Threading.ContextCallback callback, System.Object state, System.Boolean preserveSyncCtx) [0x00071] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at System.Threading.ExecutionContext.Run (System.Threading.ExecutionContext executionContext, System.Threading.ContextCallback callback, System.Object state, System.Boolean preserveSyncCtx) [0x00000] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at System.Threading.ExecutionContext.Run (System.Threading.ExecutionContext executionContext, System.Threading.ContextCallback callback, System.Object state) [0x0002b] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

at System.Threading.ThreadHelper.ThreadStart () [0x00008] in <254335e8c4aa42e3923a8ba0d5ce8650>:0

It was much more often in the logs, I just put out a few for better readability and to fit the thread limit here. It seems to be always the same, and it appears after the crash. So I guess the following happens:

During cleanup, something went wrong which cause the System.ArgumentNullException. Since it’s unhandled, the application inside the Docker container is re-started quickly. It seems so quick that Docker doesn’t notice it and things the container is still running. This causes the connection abort in the UI. Since Duplicati notices that it was exited before the Nextcloud data task was finished, it re-starts the task and everything starts from the beginning.

My setup

I have a selfhosted Nextcloud instance with currently 632GB of data in the filesystem. My account is the biggest with 423G, the other space is spread across some other users (family/friends). It’s running on Docker and I’d like to use Duplicat to backup the data directory securely encrypted to OneDrive personal. I also have some other things to backup in several tasks, from 8GB to 40GB. These smaller ones works well, but the Nextcloud data task never worked without issues yet.

docker-compose.yml from Duplicati

version: '2'

services:

duplicati:

image: linuxserver/duplicati

mem_limit: 4G

volumes:

- duplicati-config:/config

- /storage/duplicati-temp:/backups

- /storage/duplicati-temp/tmp:/tmp

- /storage:/source:ro

- /etc/docker/container:/src/docker-container:ro

- /vms/docker-lib/volumes/:/src/docker-volumes:ro

networks:

- my-net

environment:

#- PUID=1000

#- PGID=1000

- TZ=Europe/Berlin

- PUID=0

- PGID=0

labels:

- "traefik.port=8200"

- "traefik.frontend.rule=Host:my.domain"

- "traefik.frontend.entryPoints=http"

networks:

my-net:

external: true

volumes:

duplicati-config:

I made sure to pull the latest version avaliable.

Exported job config

{

"CreatedByVersion": "2.0.5.1",

"Schedule": {

"ID": 3,

"Tags": [

"ID=3"

],

"Time": "2021-03-02T03:30:00Z",

"Repeat": "1D",

"LastRun": "2021-03-01T10:50:20Z",

"Rule": "AllowedWeekDays=Monday,Tuesday,Wednesday,Thursday,Friday,Saturday,Sunday",

"AllowedDays": [

"mon",

"tue",

"wed",

"thu",

"fri",

"sat",

"sun"

]

},

"Backup": {

"ID": "3",

"Name": "Nextcloud-Daten",

"Description": "",

"Tags": [],

"TargetURL": "onedrivev2://backups/nas-duplicati/nextcloud-data/",

"DBPath": "/config/XLGOTEDXNE.sqlite",

"Sources": [

"/source/nextcloud_data-html/"

],

"Settings": [

{

"Filter": "",

"Name": "encryption-module",

"Value": "aes",

"Argument": null

},

{

"Filter": "",

"Name": "compression-module",

"Value": "zip",

"Argument": null

},

{

"Filter": "",

"Name": "dblock-size",

"Value": "2GB",

"Argument": null

},

{

"Filter": "",

"Name": "retention-policy",

"Value": "1W:1D,4W:1W,12M:1M",

"Argument": null

}

],

"Filters": [],

"Metadata": {

"LastErrorDate": "20210301T105043Z",

"LastErrorMessage": "Found 1 files that are missing from the remote storage, please run repair",

"LastBackupDate": "20210301T105130Z",

"BackupListCount": "4",

"TotalQuotaSpace": "1104880336896",

"FreeQuotaSpace": "119831723888",

"AssignedQuotaSpace": "-1",

"TargetFilesSize": "874866981263",

"TargetFilesCount": "891",

"TargetSizeString": "814,78 GB",

"SourceFilesSize": "672284328220",

"SourceFilesCount": "138763",

"SourceSizeString": "626,11 GB",

"LastBackupStarted": "20210301T105130Z",

"LastBackupFinished": "20210301T110756Z",

"LastBackupDuration": "00:16:26.0346620",

"LastCompactDuration": "00:00:53.9963860",

"LastCompactStarted": "20210101T043856Z",

"LastCompactFinished": "20210101T043950Z"

},

"IsTemporary": false

},

"DisplayNames": {

"/source/nextcloud_data-html/": "nextcloud_data-html"

}

}

As you can see, I changed the blocksize to 2GB. I thought this make sense, because I have a large amount of data and a relatively fast upload speed (40Mbit/s). And I guess that too much files could potentially cause problems. I’ll now try to reduce it to 1GB, which is recommended in Choosing Sizes in Duplicati • Duplicati

Another noticeble thing is, that my ISP do a reconnect every 24 hours. By the large amount of data, I think that Duplicati will be interupted at least one time during a full backup. I don’t know if that’s a problem, I’d expect that Duplicati detect this since it’s not a uncommon scanario, and continue the download, or if this is not possible re-start the volume upload.

History

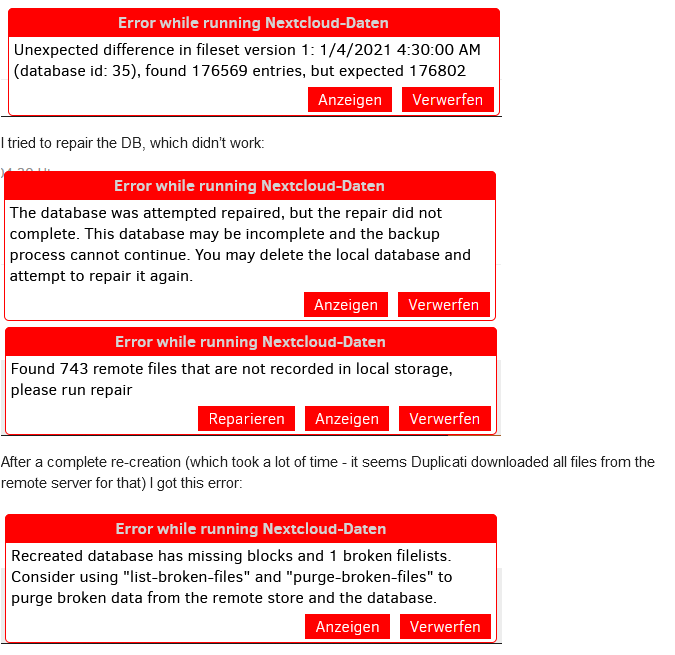

My first problem after the first run was, that there seems to be some inconsistences in the avaliabla data vs the expected:

Finally I purged the broken files and this leads me to the situation where I’m currently: The backup itself seems to work, but it takes long on “Deleting unwanted files” and then starts an endless loop.

Where is the problem?

I’ll now try to reduce the volume size to 1GB and maybe even to 512MB if 1GB doesn’t help, maybe many Duplicati users have much smaller sizes and I ran into issues by choosing larger ones. Maybe I also have to delete the existing server backup and re-upload everything.

I made this thread for ideas how to fix it, and on the other side to get feedback if I’m doing something wrong. Since I had a lot of problems with the Nextcloud data backup task, I think more and more that I’m doing something wrong.

Or is Duplicati not designed for such a large amount of data?

Is increasing the block size to better use my connection speed maybe not as good as it sounds from my understanding?