I have just under 2.5 TB of data on my computer I’m backing up to Backblaze B2 with Duplicati. I started my backup, and it says I have 55 TB to go?! The weirdest thing is, I had tried IDrive before, and it said the same thing! Where is Duplicati getting this number from, and will it actually upload 55 TB of data? Because I will not be able to pay for that, that’s for sure. The file number is accurate, but the size is completely not.

![]()

(Screenshot from Duplicati)

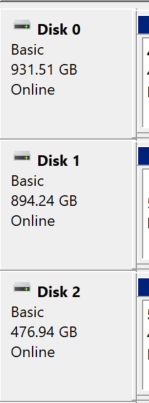

(Screenshot from Disk Management of all my drives)

Any chance you have sparse links? OneDrive creates those. Also is deduplication & compression enabled? 20 x dedup would be a killer to get. Mounted SMB shares under NTFS ?

So OneDrive is off, but there are files in the onedrive folder, including many shortcuts. I excluded any onedrive folders, and any files containing onedrive. Would the sparse links be in those folders, or elsewhere?

I believe dedup and compression are enabled by default, no? I haven’t turned them off, that’s for sure.

And I don’t have any SMB shares.

Is there a way to rescan my computer, to see if the upload size changes?

I suppose you could use tree size or GetFolder Size and analyse disk usage. Pay attention to file size vs size on disk.

Recently on one of my ZFS data set I had 3 times usage of the file size and I confirmed it via multiple tools which was driving me nuts. I was on the verge of getting more drives only to realise I had 3 copies set on the ZFS data set.

If de-duplication is in use you should see file size greater than size on disk.

Try creating 1 job for each drive and see on which one you get disk usage mismatch. That will help you narrow down the issue.

Narrowed it down to drive G:, which I use primarily for storage of my phone’s data, as well as a backup of Google Photos. Both WinDirStat and TreeSize say the drive has 383 GB of data, which is accurate. When I try backing it up though, Duplicati says it’s 54 TB. I’m going to try backing up individual folders within the drive to narrow it down further.

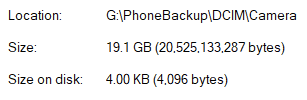

Found the problem! I have some files backed up from my phone, which I compressed with File explorer’s “compress contents…”. Each photo and video claims to be 20 GB, but only take 4 KB of disk space. ~2,500 “20 GB” files add up to 50 TB…

In addition, almost none of them actually open - they throw an error of “Can’t read file header”. I have no idea how that happened, but at least now I know what’s going on. I can exclude this broken folder from backup, until I figure out what happened and hopefully recover the files.

Thanks @RaptorG for your help and suggestions!

Hi,

It sounds like a calculation error. Check Duplicati’s FAQ or their support forum for troubleshooting tips. Also, review your Backblaze B2 settings to ensure everything’s correct. It’s unlikely you’ll actually upload 55 TB.