My C# reading skills are limited. I was wondering about the end of that chunk. Why two await?

Because I keep a profiling log and other debug records in case something breaks, a sample is:

2023-03-02 07:38:13 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UpdateChangeStatistics]: UpdateChangeStatistics took 0:00:00:00.929

2023-03-02 07:38:13 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Starting - Uploading a new fileset

2023-03-02 07:38:13 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 21.31 MB in 00:00:05.6437223, 3.78 MB/s

2023-03-02 07:38:14 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-b5e15e007b4d545bf8ea82654e73b6bba.dblock.zip.aes (21.31 MB)

2023-03-02 07:38:14 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2023-03-02 07:38:17 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:02.807

2023-03-02 07:38:17 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: Starting - ExecuteScalarInt64: INSERT INTO "Remotevolume" ("OperationID", "Name", "Type", "State", "Size", "VerificationCount", "DeleteGraceTime") VALUES (825, "duplicati-i6d465ff4ae49429f9033225d96d0769f.dindex.zip.aes", "Index", "Temporary", -1, 0, 0); SELECT last_insert_rowid();

2023-03-02 07:38:17 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: ExecuteScalarInt64: INSERT INTO "Remotevolume" ("OperationID", "Name", "Type", "State", "Size", "VerificationCount", "DeleteGraceTime") VALUES (825, "duplicati-i6d465ff4ae49429f9033225d96d0769f.dindex.zip.aes", "Index", "Temporary", -1, 0, 0); SELECT last_insert_rowid(); took 0:00:00:00.000

2023-03-02 07:38:17 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitUpdateRemoteVolume

2023-03-02 07:38:17 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitUpdateRemoteVolume took 0:00:00:00.303

2023-03-02 07:38:17 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-i6d465ff4ae49429f9033225d96d0769f.dindex.zip.aes (228.78 KB)

2023-03-02 07:38:18 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 228.78 KB in 00:00:00.4808930, 475.74 KB/s

2023-03-02 07:38:18 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-i6d465ff4ae49429f9033225d96d0769f.dindex.zip.aes (228.78 KB)

2023-03-02 07:38:18 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2023-03-02 07:38:18 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.172

2023-03-02 07:38:18 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Backup.UploadRealFilelist-UploadNewFileset]: Uploading a new fileset took 0:00:00:05.343

2023-03-02 07:38:18 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitUpdateRemoteVolume

2023-03-02 07:38:19 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitUpdateRemoteVolume took 0:00:00:00.142

2023-03-02 07:38:19 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-20230302T122030Z.dlist.zip.aes (915.34 KB)

2023-03-02 07:38:20 -05 - [Profiling-Duplicati.Library.Main.Operation.Backup.BackendUploader-UploadSpeed]: Uploaded 915.34 KB in 00:00:01.1729199, 780.39 KB/s

2023-03-02 07:38:20 -05 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Completed: duplicati-20230302T122030Z.dlist.zip.aes (915.34 KB)

2023-03-02 07:38:20 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2023-03-02 07:38:20 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.117

2023-03-02 07:38:20 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: Starting - CommitAfterUpload

2023-03-02 07:38:20 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Operation.Common.DatabaseCommon-CommitTransactionAsync]: CommitAfterUpload took 0:00:00:00.000

2023-03-02 07:38:20 -05 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: Starting - ExecuteReader: SELECT "IsFullBackup", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

2023-03-02 07:38:20 -05 - [Profiling-Timer.Finished-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteReader]: ExecuteReader: SELECT "IsFullBackup", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 0:00:00:00.000

2023-03-02 07:38:20 -05 - [Information-Duplicati.Library.Main.Operation.DeleteHandler:RetentionPolicy-StartCheck]: Start checking if backups can be removed

and this can be compared to OP log, if indeed there is more to it. As expected, the backup ends with dblock and dindex upload, comprising the data, and dlist which gives the files and the blocks in each.

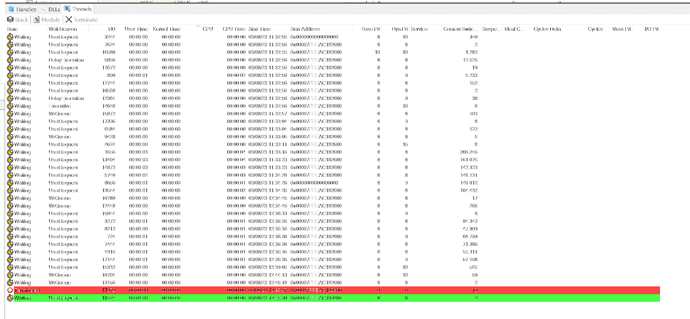

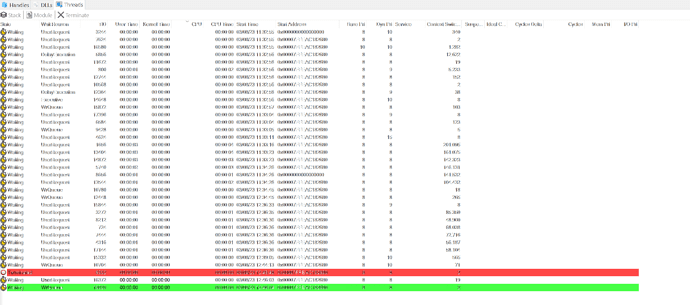

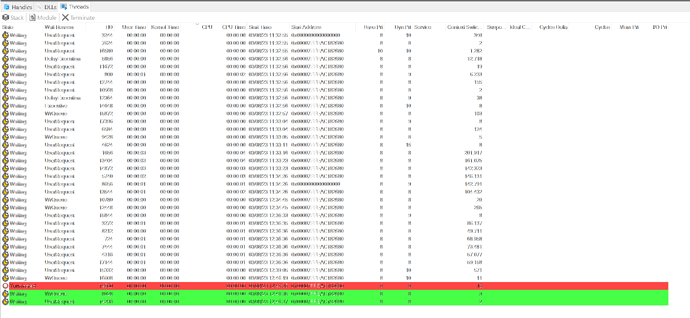

Actually, OP log looks like a log-file, so maybe we can see a bit more of it to compare to my sample?

What’s there, though, is enough to see that it’s missing quite a few lines unless they were edited out.

EDIT:

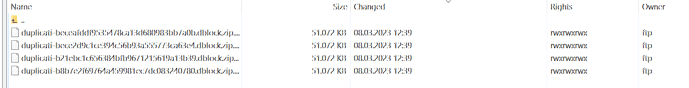

What are the last couple of files at the destination? That would be another clue of how far things got.