Hi All,

Few days ago I moved to Truenas Scale 24.04. I was able to install Duplicati as an app and successfully migrated several of the backups. Each time Duplicati recreated the database and started backing up normally.

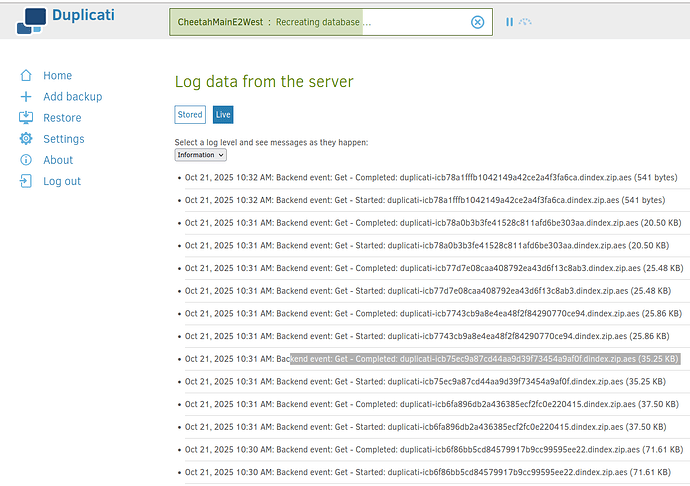

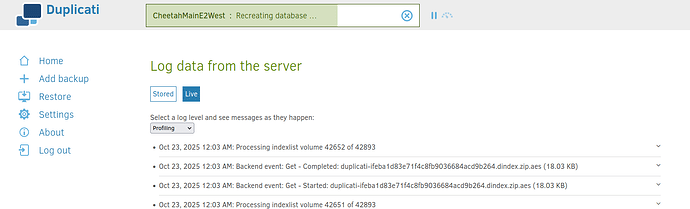

The challenge started when I decided to go ahead with the big backup (almost 2TB to cloud - E2). The database recreation is running for close to 48h and still not done.

- Duplicati is installed as an app (that is Docker based but I just downloaded it - no customization)

- Currently I can see the DB that it created (just by looking at the disk space is less than 3 GB)

- Everything is on spinning disks (no SSD).

- Currently the restore is working - haven’t crashed yet.

- All data had to move - before the computer (pop os) used to back data from a network drive, now the data is on truenas so Duplicati sees it as local.

- I also had to re consider what am I backing up to the cloud and as a result select a bit less than originally (what is already in the cloud)

- The RAM for the truenas is 8 GB.

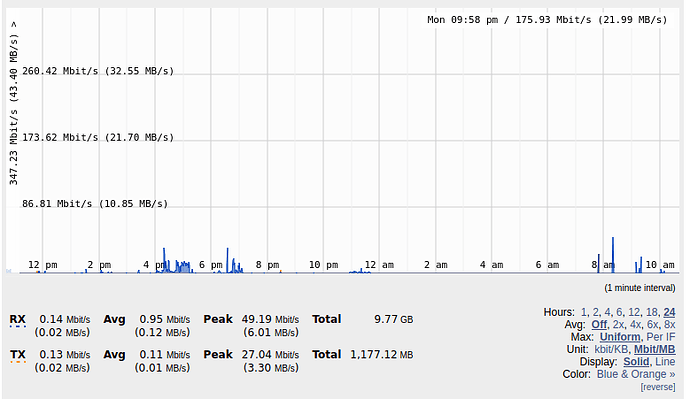

What puzzles me is the performance

- The app reports only 1-2 % utilization of the CPU.

- The RAM used is give or take 300 MB

- The network is next to nothing in utilization

- Disk read/writes - less than 5 MB/s max for reads and less than 43 MB/s for writes. However - this is the total that the system does - including my regular access of the server and I am sure the server can utilize much more when it is pushed.

If it was a question of resource - I expect I would have seen it. But it does not look like it. Does anybody have experience with such configuration? Are there any areas that I maybe overlooking?

Most likely I will leave the process continue but I expect it will take at least another 24-48 h. That is in addition to the already close to 48 h spent. And this looks quite a lot for DB recreation.