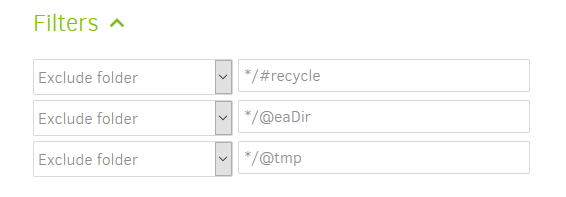

Synology DSM seems to consider folder hidden and system that start with a “@”. Not only do these folders not show up in File Station, but they also don’t show up in SMB shares. Because of the latter I did not have to filter the “/@eadir” in the Windows Duplicati job. Only once I moved that over to Synology/Docker did I notice the extra files.

Now that I know about it I can handle it manually, but the Windows version is easier to handle in this regard. The Windows version also handles the “#Recycle” folders as hidden and thus excludes them when the corresponding option is set in the backup job.

Not sure what you mean by temp files - do you mean the third DOS/Windows attribute called “archive”? Linux has no equivalent for that either.

The Windows version offers the following “Exclude” options:

Hidden files, System files, Temporary files, Files larger than:

I assume that “Temporary files” automatically excludes files based on certain file-name features, like .tmp extension or files beginning with a ~ and the like. Unfortunately I did not find a documentation on that yet.

You can find everything under “Computer” in the backup job Source Data tab. For docker version of Duplicati you are presented with a view of the filesystem that the container sees, which isn’t the same as the host NAS device. The only folders on the host that you’ll see in the docker view of the filesystem are folders you’ve mapped to the container in the Docker configuration.

Yes, of course. But everything under “Computer” is a hard-coded path inside the backup job. On Windows everything under “User Data” has the advantage that its path can be changed externally. When Duplicati is set to backup “My Documents” or “My Pictures” then I can change the path of these folders in Windows and Duplicati will still back them up without having to change the backup job.

Everything under “Computer” looks different on different computers and even more so on different operating-systems. On Linux/Synology you see shares (like /photo), on Windows you see drive-letters (like P:).

Because of this I opted for the third alternative, adding a “path directly”. On Windows I added "\nas-ip\share\folder" directly, which points to the very same location as “//photo/pictures/” or "P:\pictures" or “My Pictures” would. But when I move the job over to another PC in the same network it would work out of the box using the same NAS IP, instead of me having to change the source first.

Why is this relevant? Because changing the source in Duplicati likely leads Duplicati to identify all files as “new” files, even though the different Duplicati “Source” points to the very same NAS source folders. I am still in the process of testing this, but realize that the behavior may change with any new Duplicati version anyway.

I’m not sure what you mean by this. You should be selecting your source folders under “Computer” in the Source Data tab. Host mode networking in Docker is unrelated.

“Host” mode networking makes the Docker container accessible under the same sub-network address as the Synology NAS. Instead of an isolated 172.17.0.0/16 network you can access it via your local sub-net (usually 192.168.x.0/24). This way the container should reach the NAS under the very same IP as any other Duplicati installation outside the container would (like 192.168.x.y) and thus the backup job can be moved in and out of containers without having to change the “Source”.

Using the container via “HOST” network also exposes the Duplicati web UI via port 8200 on the host IP.

Again I’m not really sure what you are trying to do here. But Duplicati is correct. Linux doesn’t use backslashes like Windows does. The path separator character is the forward slash.

I am trying to add a path that includes an IP address pointing to a SMB share. Said SMB share is on the very same NAS that the container is working on, but since the container does not know how to access SMB shares I cannot use this. It was worth a try, though.

In general I would not back up data via CIFS/SMB shares. It’s better to install Duplicati on the local machine/device and back up the normal local drive letters (Windows) or paths (Linux).

The drawback of that approach is that each backup job is only valid on the machine that created it. If you want to move backup jobs between machines without starting the whole backup/upload over there likely are more or less painful ways of doing it right. I am trying different possibilities to find a “best practice” for when the need arises in the future.

I’m not familiar with the throttling feature of Duplicati, as I control/prioritize my outbound internet traffic a different way. But docker should be no different in this regard than any other install of Duplicati. I don’t recall if you can change the throttling on the fly, or if it doesn’t go into effect until it starts the next file.

It seems that the backup job has to be restarted for Duplicati throttling to come into effect, regardless of whether it’s set up in the job or general settings.

Synology’s “Traffic Control” can throttle Docker containers that use the BRIDGE interface, containers via HOST interface may work, too, if the specific port is set to be throttled. I have to test yet if this can be changed while an active upload is running.

On my Windows PC I am using a software called “CfosSpeed” that allows to set up both priorities and bandwidth throttling. Unfortunately using priorities does not help much with Duplicati’s upload to Sharepoint, I have to use throttling, else my occasional online gaming traffic is stalled (despite Duplicati being low and the game being high in priorities). That’s still a local control, though, while I would have to first log into the NAS in order to change that on the fly. Not much more work, just different.