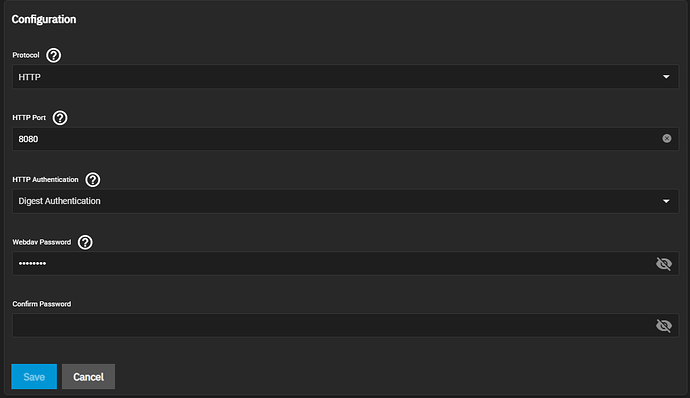

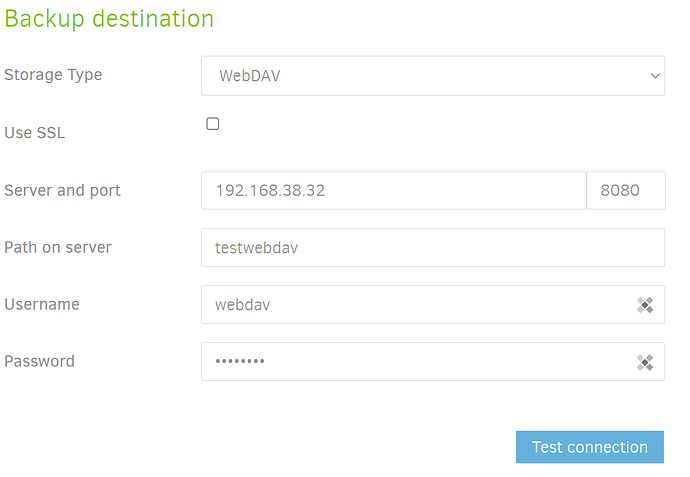

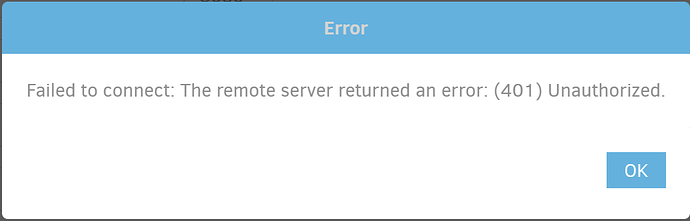

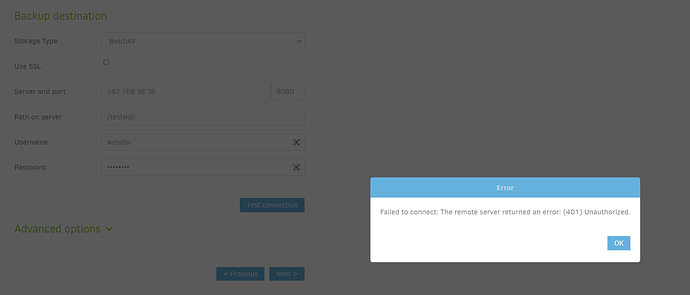

My first problem was that I was using digest auth on the server and basic on the client. I ran a tcpdump on the server stuffed it into wireshark and got the answer that way

So I now have a connection - yay

Backup still doesn’t work - but its a different issue now its not writing any files anywhere I can find. The progress goes through the first few files, but then stops and just hangs as if its trying to write but for whatever reason can’t

I have stopped and started duplicati a couple of times and got a warning, when I started and then stopped the process quickly

{

“DeletedFiles”: 0,

“DeletedFolders”: 0,

“ModifiedFiles”: 0,

“ExaminedFiles”: 6,

“OpenedFiles”: 5,

“AddedFiles”: 5,

“SizeOfModifiedFiles”: 0,

“SizeOfAddedFiles”: 742539289,

“SizeOfExaminedFiles”: 1100146149,

“SizeOfOpenedFiles”: 742539289,

“NotProcessedFiles”: 0,

“AddedFolders”: 0,

“TooLargeFiles”: 0,

“FilesWithError”: 0,

“ModifiedFolders”: 0,

“ModifiedSymlinks”: 0,

“AddedSymlinks”: 0,

“DeletedSymlinks”: 0,

“PartialBackup”: true,

“Dryrun”: false,

“MainOperation”: “Backup”,

“CompactResults”: null,

“VacuumResults”: null,

“DeleteResults”: null,

“RepairResults”: null,

“TestResults”: null,

“ParsedResult”: “Warning”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “2023-01-16T21:43:29.426349Z”,

“BeginTime”: “2023-01-16T21:42:51.476583Z”,

“Duration”: “00:00:37.9497660”,

“MessagesActualLength”: 45,

“WarningsActualLength”: 2,

“ErrorsActualLength”: 0,

“Messages”: [

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Backup has started”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: ()”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-RemoteUnwantedMissingFile]: removing file listed as Temporary: duplicati-20230116T200052Z.dlist.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-KeepIncompleteFile]: keeping protected incomplete remote file listed as Temporary: duplicati-20230116T213455Z.dlist.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b86bc8df1bd454dee9b04e748354199d5.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b90d742dc7bf849709caf0663ad414cb9.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-bf3c9b8711e70460bbdc8530350c00bf1.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b32b709b633a0428b940ca7523a039ceb.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b3a4690478cc44d89a9f633e7f7d288d2.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b6216bcda0a37419cb6f69a5909e8cd2b.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b236fc62d79254efab120c102de297fa2.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b4eacccbc648b422f87b0de0c5954381f.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b8ca56466177349cbb1a400780b16d32b.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b43bf2fba0260432b89f9d5fb36832010.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b05f9e779cac34de5941a869dde22e41e.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-ba572be775b1a419499ca843234da1306.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b92b020a75c6b4048b1f00dbdff0b807b.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-ba028c8cb45114803b74d378363958c64.dblock.zip.aes”,

“2023-01-16 21:42:51 +00 - [Information-Duplicati.Library.Main.Operation.FilelistProcessor-SchedulingMissingFileForDelete]: scheduling missing file for deletion, currently listed as Uploading: duplicati-b160ef538ce1e4d10a6a19b4b7e55c970.dblock.zip.aes”

],

“Warnings”: [

“2023-01-16 21:42:51 +00 - [Warning-Duplicati.Library.Main.Operation.Backup.UploadSyntheticFilelist-MissingTemporaryFilelist]: Expected there to be a temporary fileset for synthetic filelist (1, duplicati-20230116T213455Z.dlist.zip.aes), but none was found?”,

“2023-01-16 21:43:29 +00 - [Warning-Duplicati.Library.Main.Operation.BackupHandler-CancellationRequested]: Cancellation was requested by user.”

],

“Errors”: [],

“BackendStatistics”: {

“RemoteCalls”: 2,

“BytesUploaded”: 0,

“BytesDownloaded”: 0,

“FilesUploaded”: 0,

“FilesDownloaded”: 0,

“FilesDeleted”: 0,

“FoldersCreated”: 0,

“RetryAttempts”: 0,

“UnknownFileSize”: 0,

“UnknownFileCount”: 0,

“KnownFileCount”: 0,

“KnownFileSize”: 0,

“LastBackupDate”: “0001-01-01T00:00:00”,

“BackupListCount”: 1,

“TotalQuotaSpace”: 0,

“FreeQuotaSpace”: 0,

“AssignedQuotaSpace”: -1,

“ReportedQuotaError”: false,

“ReportedQuotaWarning”: false,

“MainOperation”: “Backup”,

“ParsedResult”: “Success”,

“Version”: “2.0.6.3 (2.0.6.3_beta_2021-06-17)”,

“EndTime”: “0001-01-01T00:00:00”,

“BeginTime”: “2023-01-16T21:42:51.477099Z”,

“Duration”: “00:00:00”,

“MessagesActualLength”: 0,

“WarningsActualLength”: 0,

“ErrorsActualLength”: 0,

“Messages”: null,

“Warnings”: null,

“Errors”: null

}

}

I suspect the important bit is under warnings - I think that for whatever reason the upload is failing

I have checked using winscp to WebDAV and I can write files correctly

I should add that I am using the same Duplicati instance to upload to Storj via S3

If I run the job via the commandline I get:

Backup started at 01/16/2023 21:53:15

Checking remote backup …

Listing remote folder …

Listing remote folder …

Scanning local files …

20 files need to be examined (3.12 GB) (still counting)

7750 files need to be examined (44.43 GB) (still counting)

15243 files need to be examined (101.34 GB) (still counting)

15243 files need to be examined (101.34 GB) (still counting)

22539 files need to be examined (103.01 GB) (still counting)

29114 files need to be examined (104.66 GB) (still counting)

34494 files need to be examined (105.66 GB) (still counting)

37655 files need to be examined (106.45 GB) (still counting)

40949 files need to be examined (107.20 GB) (still counting)

44004 files need to be examined (107.89 GB) (still counting)

Uploading file (49.94 MB) …

47230 files need to be examined (108.60 GB) (still counting)

Uploading file (49.99 MB) …

Uploading file (50.00 MB) …

Uploading file (49.91 MB) …

59701 files need to be examined (111.08 GB) (still counting)

75633 files need to be examined (114.59 GB) (still counting)

93504 files need to be examined (118.63 GB) (still counting)

118481 files need to be examined (124.51 GB) (still counting)

147194 files need to be examined (130.70 GB) (still counting)

173143 files need to be examined (136.26 GB) (still counting)

200631 files need to be examined (142.28 GB) (still counting)

227813 files need to be examined (148.17 GB) (still counting)

254939 files need to be examined (153.69 GB) (still counting)

282265 files need to be examined (159.68 GB) (still counting)

312651 files need to be examined (166.36 GB) (still counting)

347529 files need to be examined (174.23 GB) (still counting)

384656 files need to be examined (182.04 GB) (still counting)

423658 files need to be examined (190.39 GB) (still counting)

452765 files need to be examined (199.38 GB) (still counting)

488940 files need to be examined (211.37 GB) (still counting)

525726 files need to be examined (223.64 GB) (still counting)

561808 files need to be examined (235.12 GB) (still counting)

598016 files need to be examined (246.49 GB) (still counting)

634209 files need to be examined (258.44 GB) (still counting)

670579 files need to be examined (269.69 GB) (still counting)

674020 files need to be examined (270.94 GB)

The job hangs at this point

There is no sign that the files are appearing at the remote end.