Hi guys,

I have a backup job (Film) with two folder for a total of 5TB of data.

the db size is 9GB.

the backup is ok and finished from month…

today I moved 300gb from a folder to another and started the job so it dedup the files on new folder…

the problem is that is taking to much time (in my opinion) to dedup these files.

I’m spoking about large files ( >2gb each)

there’s a method to speed up deduplication?

If no file contents are changed then moving the files should not cause any new data to be uploaded.

Additionally Duplicati doesn’t really use any resources or time deduplicating because it’s not something it actively has to “work on”. It just looks through all the files for changes and then hashes the changed file pieces and uploads the changed pieces if the resulting hash doesn’t already exists.

However, 9GB is a fairly large database, so you may be seeing poor performance due to the size of the database.

What part of the backup is taking a long time?

It’s not uploading file…is deduplicating folder moved from 1 suorce to the other (in the same job obviusly)

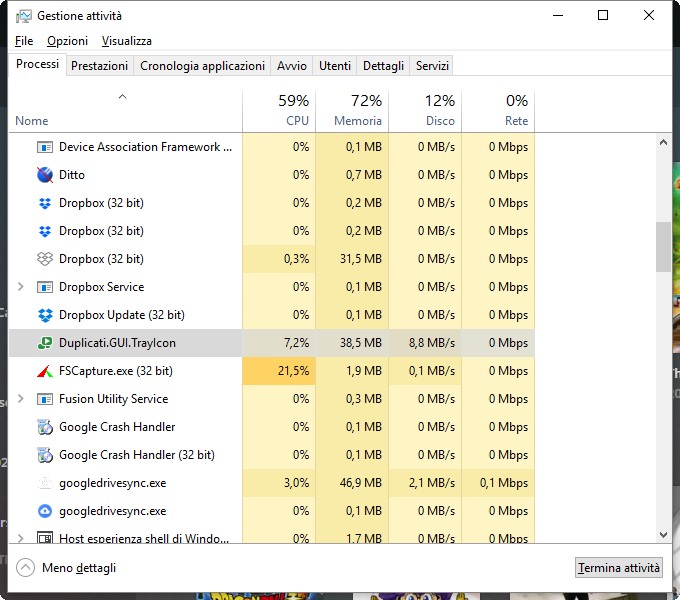

what I see is in resource monitor the constant read of file moved (8MB/s) to dedup on the new folder…

what I’m trying to figure is why is taking so long to dedup 300GB of files…

8MB per second does not seem very fast.

When Duplicati needs to determine if the files have changed it checks the metadata, but after moving the files it probably has to hash each file.

You can’t hash the file without reading it and at 8MB/sec it takes over 11 hours to read 300GB.

I agree…

I’ll see if I can do something for faster reading…

Nothing to do…the read is slow…

suggestions?

uppp

how can I speed up reading?

This is my config for video files - disabled compression and simplified duplication.

–zip-compression-level=0

–blocksize=1MB

unfortunatelly I can’t change block size with a completed backup…

I’ll try to disable compression but I think is applied only on new files…