No, it is not necessary to initialize from scratch.

Duplicacy has two ways of storing the settings for each repository (the local folders) and the storages for which each repository sends the backups:

- a “duplicacy” folder inside each repository (unpractical …)

- a centralized folder with all configurations of all repositories.

I use the second format. And in this format, there is only a small file in each repository that contains only one line with the configuration path in the centralized folder.

So to get clearer:

I have the d:\myprojects folder that I want to back up (it’s a “repository”, by the nomenclature of Duplicacy);

I have a centralized folder of settings, in which there is a subfolder for each repository:

\centralized_configs

└── my…

└── my…

└── myprojects

└── my…

In the repository (d:\myprojects) there is only one .duplicacy file with the line:

\centralized_configs\myprojects

(no key, password, nothing, just the path)

So in the script I just create this little file inside the temporary folder for which I’m going to download the test files.

You just do this:

duplicacy restore -storage "storage_where_my_file_is" file1.txt

(without worrying about the subfolder, etc)

If you execute this command from the original folder, it will restore the file to the original subfolder (the original location). If you run the command on a temporary folder, it will restore to that temporary folder by rebuilding the folder structure for this file.

Or use patterns: Include Exclude Patterns

In this case (Duplicacy backups) I’m considering the philosophy of the scripts like this:

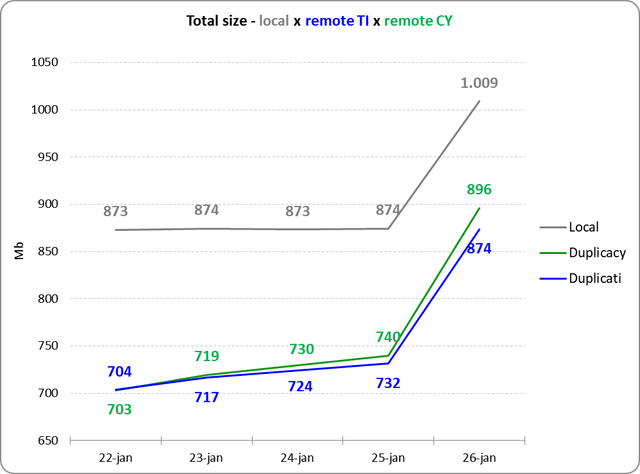

“let’s put in a file all the commands that I would have to type, and schedule the execution of this file” ![]()