No but you already found out that it’s 100 KB by default. Blocksize is the value that can’t change later.

If you think your maximum backup ever will be 30 TB, blocksize should be something like 10 - 30 MB.

Choosing sizes in Duplicati talks about sizes, however remote volume size can change later (though repackaging via compact may or may not be done on existing files), so I’m worrying about it less now.

If you don’t like 50 MB remote volume size, make it bigger. Might be a good idea anyway for big backup.

The above guide on sizes explains why making it larger can hurt you due to making inefficient restores, however keeping it small does make a lot of files in backup folder. Maybe subfolders will exist someday.

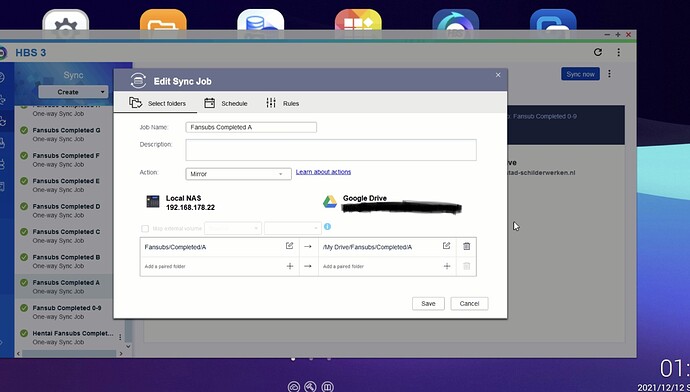

You could consider splitting your backup into a few smaller ones, if there’s a reasonable way to do that.

This will reduce the need to increase all the sizes in order to ease strain on Duplicati, Google Drive, etc.

You know about Google Drive’s 750 GB per day upload limit, right? If you have fast Internet, beware of it.

You might be missing the reason for blocks. Among other things it gets deduplication and small uploads when small changes are made, as opposed to, say, uploading an entire slightly changed large file again.

Features talks about this and other things. Block-based backup is what most advanced backups do now. Drawback is the complexity. Some people prefer to use lots of space and keep many copies of their files.

The backup process explained explains simply. How the backup process works provides technical detail.

If you’re saying that you want a zip file per source file, that’s totally contrary to a deduplicated block-based backup where a single .zip file has a group of blocks that may be part of a number of different source files.

If Duplicati is ever able to add subfolders these won’t likely be source folder names, which are stored in a similar way to files, thus get timestamps, securitity attributes, and no character sensitivities depending on the destination (you can backup file and folder names the destination can’t write). How does hbs3 backup different versions of a file? Complete files? What about the rest of the unchanged files? Some backups at least don’t upload the complete set of files for every backup, but they might upload complete changed files.

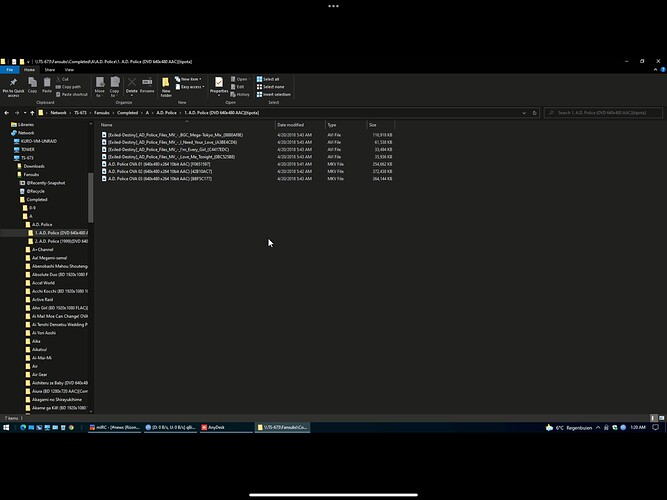

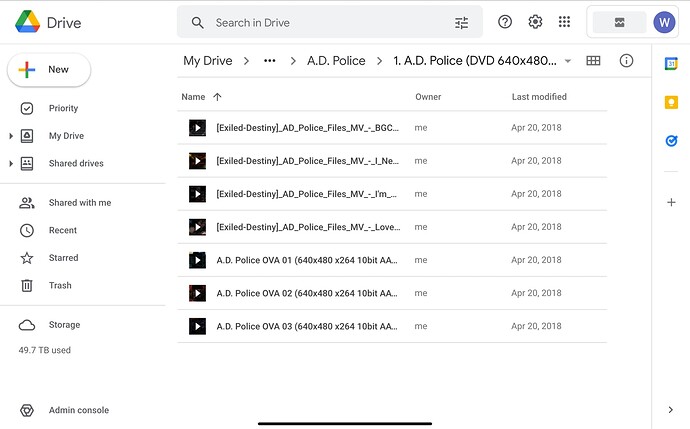

What you’re talking about with keeping the source tree shape at the destination is more what a sync does.

Overview

Duplicati is not:

- A file synchronization program.