I’m skeptical that this is so. Got a good citation? My guess is it’s a file-filtered version of usual.

Image backups these days can do better than copying all sectors, whether used or free space. Generally they know about specific filesystems and know how to backup only the needed data, meaning on Windows they probably study NTFS Master File Table and file cluster information.

I used to use Macrium Reflect Free for occasional image backup for full coverage that frequent Duplicati file backup didn’t get. Macrium to USB drive ran fast, so much so that I think Macrium must be optimizing for physical hard drive head position to avoid seeking which kills throughput.

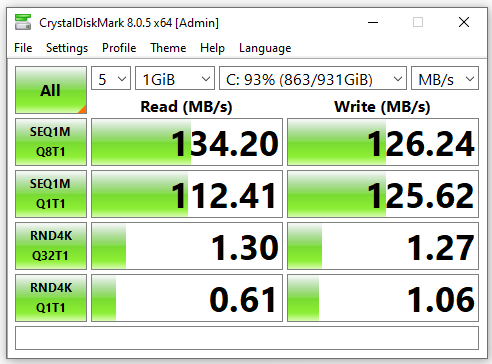

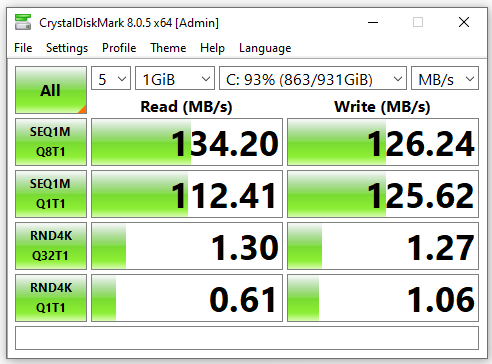

Look at a drive benchmark, or benchmark your own. Here’s my hard drive on Crystal DiskMark:

so there’s an example of how much faster sequential is, so trying to achieve that is worthwhile, however normal programs are not filesystem aware. They let their OS abstract all of that away.

I tend to agree for the image backup, and am wondering if file backup is just an image subset.

Windows Explorer shell integration appears very similar between the two backup types. If you know, did File and Folder .mrbak get same speed as partition .mrimg? That’s some evidence.

Free is gone, and I’m still looking for a good replacement for the same use. I never had a paid version, so can’t speak to File and Folder, or differential, or incremental. Duplicati still does OK running my frequent smaller backups. I don’t like to do all-permissible-file backups on anything because it’s an overnight job even on restic, and Duplicati is slower though not enormously so.

I do like having an offsite backup of the smaller selected data, as it’s better disaster protection. Having multiple backups is a good idea too. I’m not sure how to get all I want in any single tool.

If your use case is on-site-only at Macrium speeds, you might be hard pressed to find even an image backup program that can match that (based on seeing reports of others who have tried).

I’m currently trying Paragon image, and it’s slower and not as nice, but file backup is yet slower, inherently, because AFAIK they are all non-filesystem-aware and so suffer from random access.

Some of this is SQLite cache size related, and can be configured around, but it’s a bit awkward.

CUSTOMSQLITEOPTIONS_DUPLICATI is the environment variable, and forum has examples.