I might have missed an easy UI, but so far the bad news is it looks like it’s all tedious options, however the good news is that there’s no process wrinkle that demands it. Blocksize is picked up and remembered as default blocksize. This can actually be seen in a Profiling level log, going in the per-job database. Example:

2020-07-07 08:49:38 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteNonQuery]: Starting - ExecuteNonQuery: INSERT INTO “Configuration” (“Key”, “Value”) VALUES (“blocksize”, “125952”)

Ordinarily the 125952 is 102400 for the default default, but it looks like recreating DB sets a new default.

The blocksize is (as always) used to sanity-check the DB against oversized blocks, so there’s also this:

2020-07-07 08:50:24 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.Database.ExtensionMethods-ExecuteScalarInt64]: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM “Block” WHERE “Size” > 125952

To detail above DB recreate, I made a backup with --blocksize=123 KB, deleted it, re-typed it without the --blocksize option, used Repair, and ran a backup to continue from where I left off. No problems thus far, beyond not being able to find my 123 KB in Commandline or Export As Command-line to reenter it as an explicit --blocksize. Instead, I tried a different explicit blocksize, the original default, and found my answer:

I just want “back to previous”, so I set --blocksize to 123 KB, and all works and I see a --blocksize option.

So your choices in roughly increasing order of “tedious” here seem to be:

-

Don’t bother explicitly setting the blocksize. Let it be detected and be the new default for the backup.

-

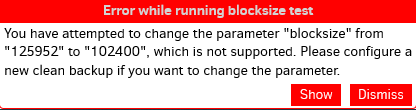

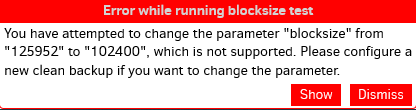

Rely on new default initially, then try setting a different blocksize, and let error message give default, then set default explicitly just to get the blocksize on the record in case you export job configuration.

-

Use the Database screen to find its path, then look at Configuration table to find the blocksize value. DB Browser for SQLite is one example of a DB browser, but there are other such browsers around.

-

Unzip remote file, and read its manifest text file. This is quite simple if the remote is not encrypted, however if it’s decrypted you can use AES Crypt or Duplicati’s SharpAESCrypt.exe (CLI) to decrypt.

-

Use Profiling log –log-file and –log-file-log-level=profiling, or About → Show log → Live → Profiling which unfortunately gets big fast unless you can find a short operation that shows you needed data.

Unable to restore backup: blocksize mismatch in manifest #2323 and some others talk about automatic detection. Above issue is closed, so I hope everything goes smoothly for you, as it seemed to go for me.