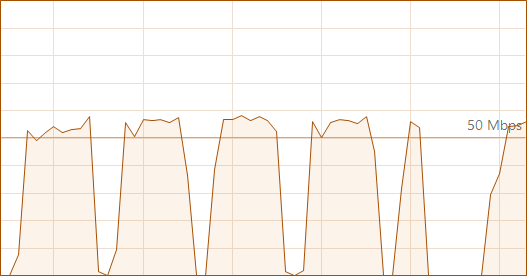

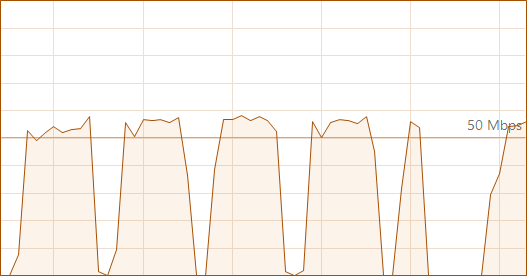

Testing a small video recovery (5GB) and I see this peculiar download bandwidth cycle, from full capacity to zero…

Is this normal?

Testing a small video recovery (5GB) and I see this peculiar download bandwidth cycle, from full capacity to zero…

Is this normal?

Possibly. It doesn’t have concurrent downloads yet. Concurrent uploads are in Canary and Experimental. Jottacloud got concurrent downloads but it’s sort of a special case, and it’s also not in a Beta release yet.

You can view live log at About → Show logs → Live → Information to see if activity matches file actions.

This was a single file… not a series of files, but it seems to be uploaded in pieces as opposed to a steady flow.

v2.0.4.22_canary_2019-06-30

Block-based storage engine is the simplified version of how files are broken into blocks for backup.

How the backup process works is a more technical explanation.

Overview from Duplicati 2 User’s Manual:

Duplicati is not :

- A file synchronization program.

Duplicati is a block based backup solution. Files are split up in small chunks of data (blocks), which are optionally encrypted and compressed before they are sent to the backup location. In backup location, Duplicati uploads not original files but files that contain blocks of original files and other necessary data that allows Duplicati to restore stored files to its original form by restoration process. This block based backup system allows features like file versioning and deduplication. If you need to be able to access your files directly from the backup location, you will need file synchronization software, not block based backup software like Duplicati.

That explanation is helpful and explains the observed bandwidth cyclicity… still, at an apparent upload penalty of approx 35% of unused bandwidth.

Perhaps “block #2” isn’t encrypted until “block #1” upload is complete (surely this is not the case)… either that or the time to encrypt exceeds the time to upload, such that “block #1” upload completes more quickly than “block #2” encryption.

Hopefully there is a means to narrow the gap between uploaded blocks.

Thank you.

Did we switch from downloads to uploads? Uploads are supposed to be concurrent (if you install the right pre-Beta Canary or Experiment), so in theory would do better at keeping the network full. Before that there was (and still will be) some advance preparation configurable by –asynchronous-upload-limit, which does:

--asynchronous-upload-limit = 4

When performing asynchronous uploads, Duplicati will create volumes that can be uploaded. To prevent Duplicati from generating too many volumes, this option limits the number of pending uploads. Set to zero to disable the limit

Did we switch from downloads to uploads?

No downloads… I got things reversed reading your note on upload encryption… So same thing… downloading blocks with interruptions between block downloads… presumably to decrypt? Not sure why the wasted bandwidth. Which goes back to my OP.

So I can infer that Duplicati is unable to download blocks without interruption as the bandwidth record in the OP illustrates?

Yes, it takes some time to process the downloaded block. Decryption, rehydration of deduplicated data, etc. So this is “normal.” It may be improved in the future if a concurrent download feature is implemented.

In general any backup program that uses deduplication will take longer to do the restore. That’s one of the tradeoffs for deduplication (trading processing time for space savings).

Out of curiosity have you changed any settings in Duplicati like deduplication block size (100KB) or remote volume size (default 50MB)? Also what are you restoring from? If it’s over the internet, what is your max circuit download speed?

Non-concurrent means there will be some amount of gap. In this testing, the gap is less than 1 second:

2019-09-11 09:14:24 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: Starting - RemoteOperationGet

2019-09-11 09:14:24 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b7a1971a459e04113bebec45f0501855e.dblock.zip.aes (49.98 MB)

2019-09-11 09:14:35 -04 - [Profiling-Duplicati.Library.Main.BackendManager-DownloadSpeed]: Downloaded and decrypted 49.98 MB in 00:00:10.6568414, 4.69 MB/s

2019-09-11 09:14:35 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-b7a1971a459e04113bebec45f0501855e.dblock.zip.aes (49.98 MB)

2019-09-11 09:14:35 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: RemoteOperationGet took 0:00:00:10.656

2019-09-11 09:14:35 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: Starting - RemoteOperationGet

2019-09-11 09:14:35 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-bfee093d669f4478bb2074841ee0ec4fb.dblock.zip.aes (49.96 MB)

2019-09-11 09:14:44 -04 - [Profiling-Duplicati.Library.Main.BackendManager-DownloadSpeed]: Downloaded and decrypted 49.96 MB in 00:00:09.4144392, 5.31 MB/s

2019-09-11 09:14:44 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-bfee093d669f4478bb2074841ee0ec4fb.dblock.zip.aes (49.96 MB)

2019-09-11 09:14:44 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: RemoteOperationGet took 0:00:00:09.414

2019-09-11 09:14:44 -04 - [Profiling-Timer.Begin-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: Starting - RemoteOperationGet

2019-09-11 09:14:44 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b928f5836df494d07a0b0be7204747919.dblock.zip.aes (32.78 MB)

2019-09-11 09:14:51 -04 - [Profiling-Duplicati.Library.Main.BackendManager-DownloadSpeed]: Downloaded and decrypted 32.78 MB in 00:00:06.9104868, 4.74 MB/s

2019-09-11 09:14:51 -04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Completed: duplicati-b928f5836df494d07a0b0be7204747919.dblock.zip.aes (32.78 MB)

2019-09-11 09:14:51 -04 - [Profiling-Timer.Finished-Duplicati.Library.Main.BackendManager-RemoteOperationGet]: RemoteOperationGet took 0:00:00:06.911

You can run your own –log-file at –log-file-log-level=profiling to see if you can catch a larger gap between requests. Also keep in mind that the service (in this it’s Backblaze B2) might need some time to respond. Getting high-resolution time of gaps at network level could be done by packet analysis, e.g. by Wireshark.

I’m not sure how to stop the gap until when/if concurrent download is implemented. That should also help with high-speed high (or normal) latency links, where a single connection has inherent speed limits due to speed-of-light limitations, acknowledgments, and the amount of data allowed to be “in-transit” at one time: