Some days ago, one of three daily jobs (backups to local drives) finished with error. I tried a repair and later delete and rebuild of database. Now the backup ends successfully but still shows the error:

2025-04-08 13:33:39 +02 - [Error-Duplicati.Library.Main.Operation.TestHandler-FailedToProcessFile]: Failed to process file duplicati-i6bc89956eb164f21891de31ed4500973.dindex.zip.aes CryptographicException: Invalid header value: 2E-65-6E-63-6F 2025-04-08 13:33:41 +02 - [Error-Duplicati.Library.Main.Operation.TestHandler-Test results]: Verified 10 remote files with 1 problem(s)

Error ist persistent for 5 runs, database was recreated 2 times. Any help appreciated.

What OS? Got any tools to look at the start of that file? You can compare to others.

Linux has hexdump or od. On Windows, sometimes notepad can sort of suffice…

AES Crypt Stream Format

says the file should start with the three letters AES, but that’s not what yours shows.

You can also try using the AES Crypt (GUI) or Duplicati (CLI) tool to decrypt that file.

It’s on Win10. Source and Destination on one machine, two drives.

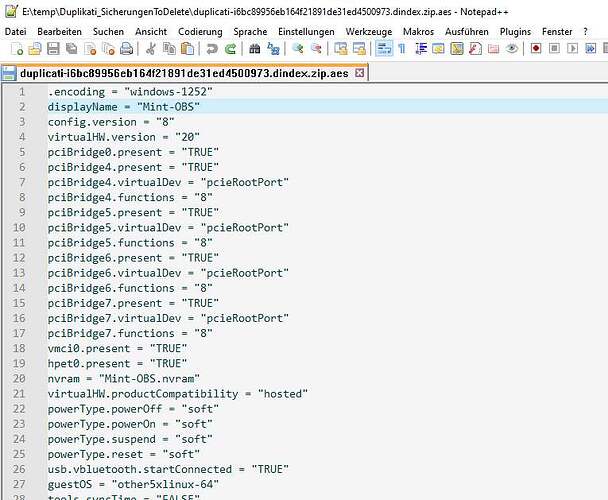

The file is unencrypted, notepad++

The content might belong to VMware VM running on the Win10 host.

Other .aes files are encryted.

How might it have gotten in? That file is doubly wrong. Decrypting it should give a .zip:

Does the date on that file give you any clue as to its source, e.g. does it match a backup?

If you want to get kind of technical, the database might have records, especially if file isn’t older than 30 days. You can (maybe) browse yourself with DB Browser for SQLite or such, or Create bug report, put it somewhere, and post a link. If file is older, info has aged off.

Do you recall what error it gave, or are you saying it was the same error on same file?

would have wiped the prior history, and gotten the idea that the file is supposed to exist.

Since it’s not at all a useful Duplicati index file, if all else fails, maybe move it aside and recreate the database again, but it would be nice to know how it got corrupted that way.

Not sure how this can happen, but I am confident that Duplicati will never write a file with that content. Can you perhaps look at the timestamps to see if it looks like the file has been modified/overwritten after Duplicati looked at it?

Oddly, there should also be a complaint from Duplicati that the remote file size does not match the actual file size. If the two files are the same size, I would assume that the contents of the file are truncated or starts to be binary at some point.

In any case, I would guess that something on the storage is causing problems.

Maybe there was one in the past, but “recreated 2 times” probably lost original information. Testing this, I used notepad to have a dindex say “overwrite”, Recreate, ignore a message, and Remotevolume table thinks the proper size is 9 bytes, because that’s what it had seen.

Sorry for coming back so late with a reply. For sure I will try to give you as much assist as I can.

My setup is 3 daily tasks saving data to a local second HDD on the same machine. Later the day there are 3 rclone tasks sheduled syncing the Duclicati data with pCloud drive. I do this because direct pCloud backup was not reliable.

Unfortunately I did look at the error only one day after it occured so already a backup to pCloud of the corrupted data.had run.

About the error:

I’m sure the original error was about encryption as well.It applied to the same file that is with unencrypted content now. I still have a copy of that file. Timestamp and filesize are the same, but it is encrypted

I tried a decrypt:

c:\Program Files\Duplicati 2>Duplicati.CommandLine.SharpAESCrypt.exe d xxxxxxxxxxxxxx e:\temp\duplicati-i6bc89956eb164f21891de31ed4500973.dindex.zip.aes-bad e:\temp\duplicati.badfile.txt

Error: Invalid header value: 00-00-00-00-00

Database files currently available:

control_dir_v2\ <DIR> 13.04.2025 11:19 ----

Duplicati-server.sqlite 339.968 13.04.2025 13:33 -a--

KNQHBMKLMC.sqlite 778.125.312 13.04.2025 13:33 -a--

MBMXFKQZSU.sqlite 635.617.280 13.04.2025 13:20 -a--

XBHOUZBAIL.sqlite 312.029.184 13.04.2025 13:10 -a--

Sicherung Duplicati-server 20250405100348.sqlite 339.968 05.04.2025 10:03 -a--

machineid.txt 247 05.04.2025 10:03 -a--

installation.txt 283 05.04.2025 10:03 -a--

Duplicati-server20250405-100348.bak 339.968 05.04.2025 10:02 -a--

Sicherung KNQHBMKLMC 20250405100443.sqlite 737.644.544 05.04.2025 00:24 -a--

Sicherung MBMXFKQZSU 20250405012000.sqlite 635.617.280 04.04.2025 13:20 -a--

Sicherung XBHOUZBAIL 20250405011137.sqlite 312.029.184 04.04.2025 13:10 -a--

UXTWVWRFVA.sqlite 265.793.536 07.12.2023 05:30 -a--

YDQOMYUYRG.sqlite 167.936 07.12.2023 05:15 -a--

KMHSSXJHJH.sqlite 627.335.168 07.12.2023 05:00 -a--

dbconfig.json 2.715 08.03.2022 09:02 -a--

Sicherung KNQHBMKLMC 20250405100443.sqlite seems to be related to the corrupted database. I opened it with DB browser. Integrity check is o.k. no glue what else I can check.

However this seems to be a backup after I updated to the latest Duplicati version hoping to fix my problem.

But I have a VEEAM backup of the machine from 2.4.25 containg this fileset:

control_dir_v2\ <DIR> 13.04.2025 14:14 ----

KNQHBMKLMC.sqlite 814.891.008 02.04.2025 13:34 -a--

MBMXFKQZSU.sqlite 635.617.280 02.04.2025 13:20 -a--

KMHSSXJHJH.sqlite 627.335.168 07.12.2023 05:00 -a--

XBHOUZBAIL.sqlite 312.029.184 02.04.2025 13:10 -a--

UXTWVWRFVA.sqlite 265.793.536 07.12.2023 05:30 -a--

Duplicati-server.sqlite 339.968 02.04.2025 13:34 -a--

YDQOMYUYRG.sqlite 167.936 07.12.2023 05:15 -a--

dbconfig.json 2.715 08.03.2022 09:02 -a--

This should contain a dabase prior the first error. If you a willing to investigate in this, let me know, I’ll gladly assist you.

Is this the WebDAV version? We have added pCloud in the canary builds, and if this is not reliable, I would love to hear about it.

Slightly different header there, now it is all zeroes? Is the file perhaps only filled with zeroes?

The timestamp indicates that it is a version before updating Duplicati. Does the UTC timestamp correspond to the time the backup ran? If so, the correct file is KNQHBMKLMC.sqlite, but as @ts678 mentions, a “Recreate” will delete the database and recreate it.

The recreated file does not have the log that we are looking for. If you look in the database, look at the table “RemoteOperation” and here you can see each operation that has been performed with the remote storage. What we are looking for here is an entry where operation is “put” and the filename matches the broken filename.

If you find that one, you can note the size and hash value (SHA256).

We can then compare the values with the defective file to see that if it was broken before or after upload. Also, any subsequent "list" operations that mentions the file are valuable to see if it changes size at any time.

Bit of detective work unfortunately…

Thanks for your time spending on this.

Is this the WebDAV version? We have added pCloud in the canary builds, and if this is not reliable, I would love to hear about it.

No, I ran into problems about 2years ago and learned pCloud WebDAV is not officailly supported so I switched to the 2 step solution with local backup resource for Duplicati. Working fine for a long time.

Sorry but I could not find the requested file in the Database so I think this can be closed here. After deleting the spoilt .aes file the backup was woking for the last days, until today. But more about that severe reproduceable problem in a new topic.