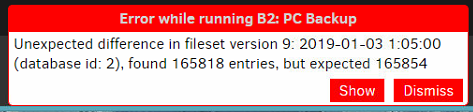

Sigh… so after I cancelled the Compact Now operation, last night’s scheduled backup run ran automatically and completed successfully. But then (after running a different backup set, and being forced to force cancel and restart Duplicati to get it to unlock the database), now when I run the original B2 backup job again, I’m now getting the dreaded “Unexpected difference in fileset” error:

Duplicati.Library.Interface.UserInformationException: Unexpected difference in fileset version 11: 2018-09-21 2:05:00 (database id: 397), found 163435 entries, but expected 163440

(I’m on the 2.0.4.12 canary on this machine - the auto-updater doesn’t seem to be working at the moment).

Edit: urghh… I’ve tried running Repair on the database, only to be told:

Destination and database are synchronized, not making any changes

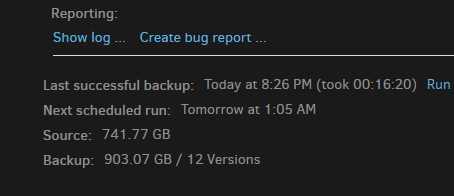

Meanwhile, running the job fails instantly with the same error message. I don’t want to have to do a Recreate on this database, as the total backup set is over 800GB. Any suggestions?

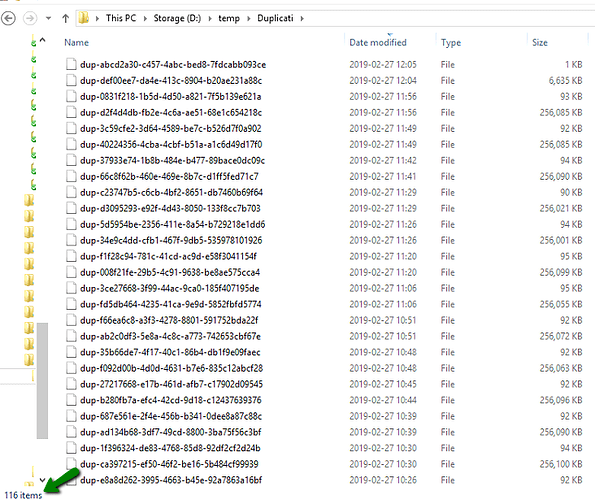

Edit 2: following the directions from an old thread about this error, I ran the “delete” command line tool to delete Version 11. Now when I try to run backup, I get the same error but with different mismatch numbers and pointing to Version 10. I’m running delete on that one too, now. How many versions will I need to delete??

Edit 3: Deleted version 10, now it’s reporting an error with version 9  I give up for now.

I give up for now.

Edit 4: I decided to go for broke and run a recreate. I had reasonable luck with this in my smaller backup job when I got this error previously. crossing fingers

Edit 5: Ugh… the recreate is still running (over 7 hours later) and seemingly downloading every dblock file from B2… this is gonna cost me $10 in extra bandwidth fees :-/

Edit 6 (and final): The recreate finished overnight; luckily it only needed to download 17 or so more gigs, so nowhere near the whole 800 GB fileset. It had me worried there for a bit.