I have been using Duplicati on a Windows VM for quite some time. Since I now use the VM exclusively for Duplicati, I wanted to switch to Duplicati with Docker on a Linux platform (unraid). Of course I want to keep the existing backups, so I exported the config files and imported them into the Duplicati container. When starting the existing backups I now get an error with the following description:

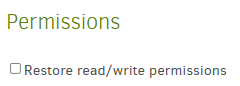

“The backup contains files that belong to another operating system. Proceeding with a backup would cause the database to contain paths from two different operation systems, which is not supported. To proceed without losing remote data, delete all filesets and make sure the --no-auto-compact option is set, then run the backup again to re-use the existing data on the remote store.”

I have read in other posts (some many years old) that Duplicati does not handle different OS well.

Is there a solution for the problem I have in the meantime?

EDIT:

I have now carried out another test. If I use duplicati on the new server not in a Docker container but also again in a (completely new) Windows VM, the backup works fine and the backup just keeps running with the old settings and the old remote data.

So it means that the change of the OS is the main reason.