Welcome to the forum @besweeet

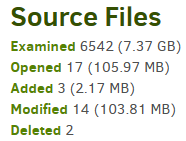

Seen how? On initial backup or update? If update, what amount of files changes? Log example:

Are these changing much (a variation of above question)? If not, time difference would be in scan.

usn-policy can avoid the ordinary scan, but given usually fast SSD, you’d have to test it, if you like.

What OS is this, and does it have any performance tools to check on typical performance metrics?

How large is large? Is it initial or update? Try to set blocksize to use less than about a million blocks, because database operations slow dramatically on excess blocks. Default is good for about 100 GB.

How many cores on the CPU? There are two Advanced options to set concurrency. You could test.

There are some I/O and CPU priority controls, but I don’t know if you’d want to prioritize your backup.

During a backup, Duplicati is looking for files that changed (by folder scan or USN), reading files that changed to find the changes, then accumulating those for upload in (default) 50 MB remote volumes.

GUI shows what’s being read. If a path gets to 100% and sits, this is probably searching for next one. When a volume gets filled, it uploads, and I think first one is what begins upload speeds in status bar. Once uploads have begun, average speed declines until another volume fills up and gets uploaded…

You can see this live in the not-so-human-friendly About → System info Server state properties

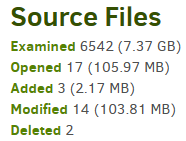

lastPgEvent : {“BackupID”:“2”,“TaskID”:176,“BackendAction”:“Put”,“BackendPath”:“ParallelUpload”,“BackendFileSize”:47621623,“BackendFileProgress”:47621623,“BackendSpeed”:3366342,“BackendIsBlocking”:false,“CurrentFilename”:null,“CurrentFilesize”:0,“CurrentFileoffset”:0,“CurrentFilecomplete”:false,“Phase”:“Backup_WaitForUpload”,“OverallProgress”:0,“ProcessedFileCount”:5,“ProcessedFileSize”:102088889,“TotalFileCount”:6542,“TotalFileSize”:7911004076,“StillCounting”:false}

The Complete log in the job log has lots of stats on the source findings and the BackendStatistics.

Does “storage mediums” refer to destination? What else are you trying? Was SSD a speedup attempt?