I suspect something else will be the limit first, especially on a backup that actually writes a lot of data.

There’s first preparation of compressed .zip files of blocks in a temporary area, then encryption and transmission and storage somewhere. There are also a lot of database actions of varying complexity.

How the backup process works reminds me that for many backups where little is changed, the time required sometimes is in walking the filesystem to see which files look like they were modified. Using

usn-policy on Windows can sometimes help that, but the walk is likely not an issue here, with 5 files.

The current case is atypical IMO because it’s source-read-intensive, not really backing up that much.

BTW there’s a progress bar on the home page that should show exactly how long it takes on one file.

This would go well with the test I suggested, which is how long it takes to read big file to find changes.

Stated that way, what’s the point? Maximum hardware is not affordable, and time/skill is hugely limited

I once worked for a company where performance-crown-of-the-year helped sales. Doesn’t apply here.

If the statement is to do analysis (e.g. profiling) to tune code, that’s different, but just to get a max rate?

Main thing I can think of is it would help in chases like this, if a fastest-possible-speed-this-year existed.

There are definitely comparisons contributed occasionally of Duplicati versus other backups. There’s a dedicated forum category called Comparison that gets some of this (and others might exist elsewhere).

Big Comparison - Borg vs Restic vs Arq 5 vs Duplicacy vs Duplicati is a recent one, done on a desktop.

Anybody is most welcome to look for a max speed. Maybe we’re doing that here, but without the skills to identify the bottlenecks – which is why I suggest changing the configuration to see if local SSD is faster. Maybe it doesn’t match the actual backup needs, where the drive is remote. It would be to understand…

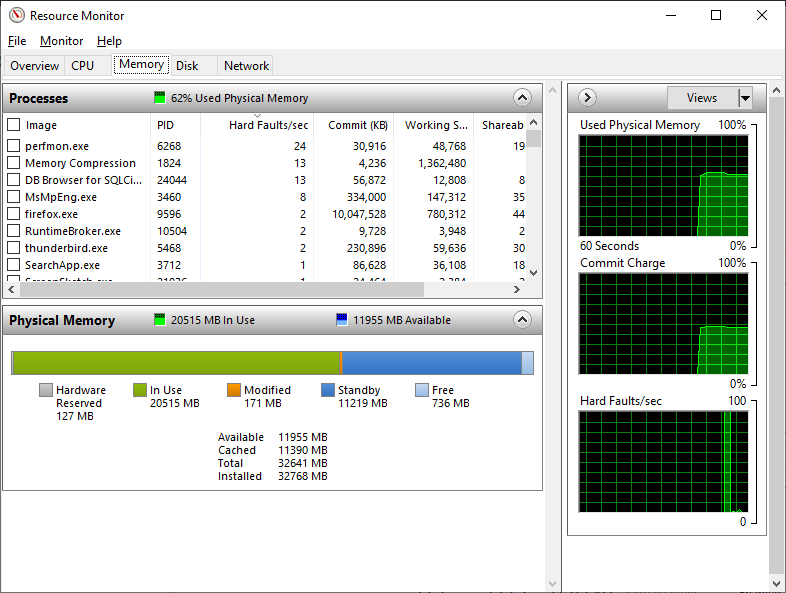

There are other things to potentially look into, e.g. is memory limited so that programs are page faulting. Task Manager shows these if you turn on the PF Delta column. Most are soft page faults, related to the processor capabilities (I think). You can open Resource Monitor from Task Manager to watch hard faults.

On the Disk tab, one can watch C:\pagefile.sys load when hard faults get high. How fast is drive it’s on?

The answer is easy for this desktop. Everything is local mechanical drive, so no need to ask any further.