Aloha fellow duplicati peoples,

I’m having a rather difficult time understanding why some of my backups are taking a looooong time to finish.

We’ve got a Hyper-V machine that runs Microsoft Exchange, total size is 1.20 TB.

The machine is located on both ISCSI disk on a TrueNAS machine that’s connected with 10 gbit/s and a locally attached SSD.

The backup is taking 8 hours and 38 minutes.

However, when looking at the log the Test Phase took 12 seconds, Compact phase took 1 minute and 10 seconds and Delete Phase took 4 minutes and 48 seconds.

The backup gets placed on an SMB-share on the TrueNAS it’s connected to with 10 gbit/s (There’s one 10 gbit/s switch between the Hyper-V host and the TrueNAS, same rack, no latency issues)

So what in gods name is the server doing that’s taking such long time?

The log in it’s entirity can be found here: EXCHANGE_DUPLICATI_LOG - Pastebin.com

Another server is phasing a similar issue, it’s connected with a 1 gbit/s connection to the TrueNAS, but when looking at duplicati it’s doing:

Current action: Backup_ProcessingFiles

4 files (202.84GB) to go at 3.00 MB/s

However, often when looking at the physical server itself during “Backup_ProcessingFiles” it’s doing more recieving data than uploading, yesterday it would recieve hundreds of mbit/s a second when Duplicati was “Backup_ProcessingFiles”.

Exactly what is Duplicati during that time?

How can I troubleshoot were the slow downs are happening?

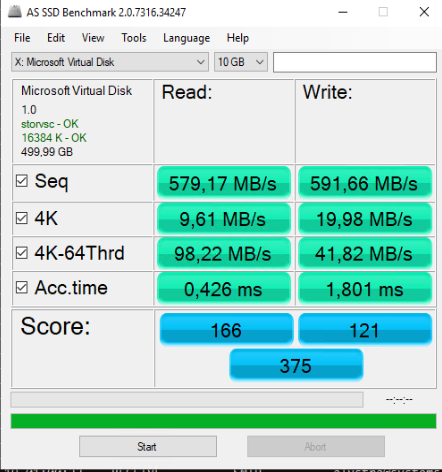

I’ve ran SSD benchmarks on the iscsi disks on the Exchange Guest OS, with the results being just fine: